Practice Free DP-600 Exam Online Questions

You have a custom Direct Lake semantic model named Model1 that has one billion rows of data.

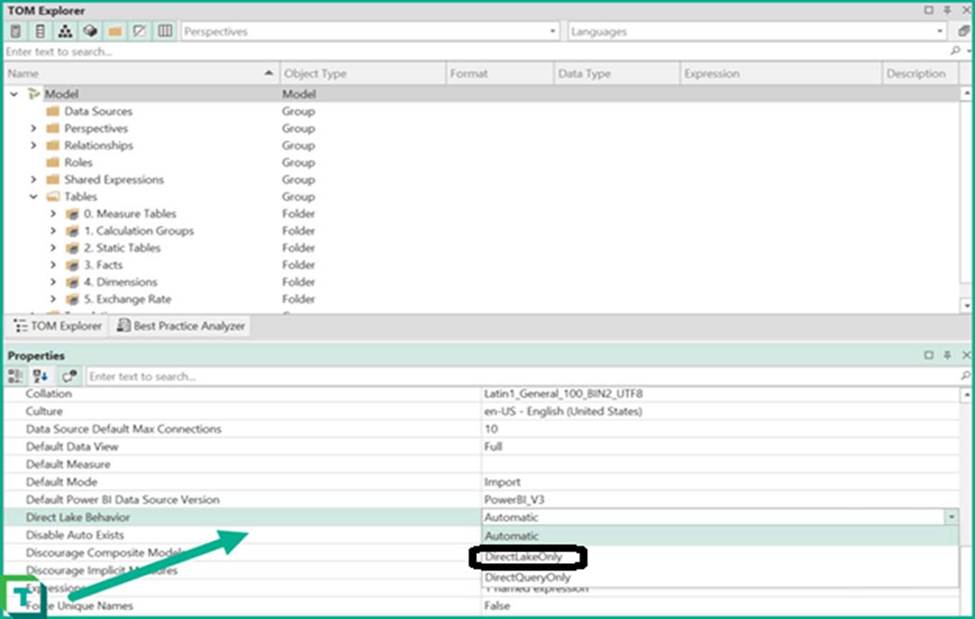

You use Tabular Editor to connect to Model1 by using the XMLA endpoint.

You need to ensure that when users interact with reports based on Model1, their queries always use Direct Lake mode.

What should you do?

- A . From Model, configure the Default Mode option.

- B . From Partitions, configure the Mode option.

- C . From Model, configure the Storage Location option.

- D . From Model, configure the Direct Lake Behavior option.

D

Explanation:

New Behavior Property

With the update to the latest TOM library a new Fabric-only property is available in model properties. Direct Lake Behavior allows control over whether the model should fallback to DirectQuery or not.

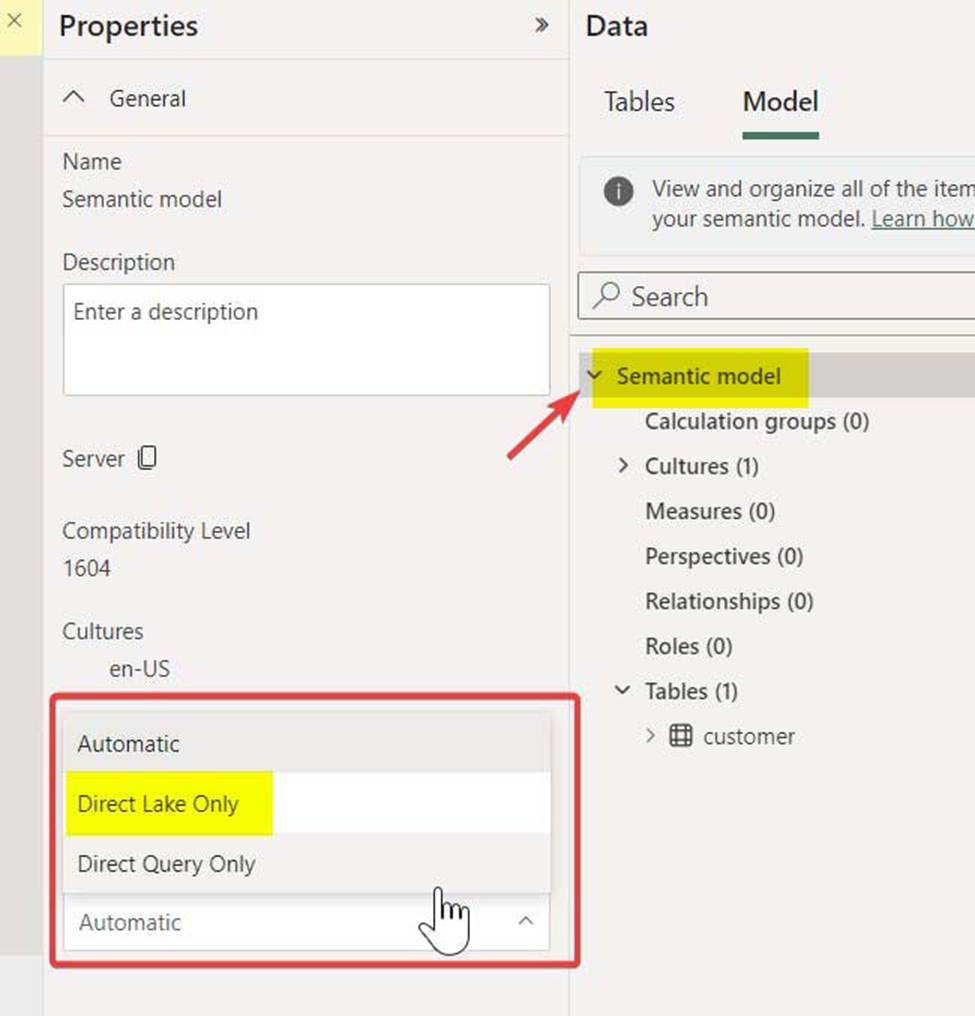

Note: Power BI Service:

As of Feb 21, 2024, Web modeling experience in Power BI service has the UI option to change the Direct Lake fallback behavior. Default is Automatic.

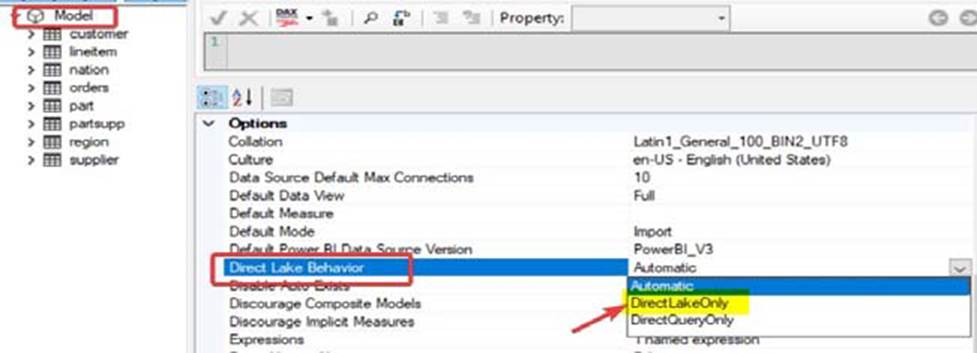

Tabular Editor:

Upgrade to the latest version of Tabular Editor 2 (v2.21.1) which has the latest AMO/TOM properties. Link to Tabular Editor.

Connect to the Direct Lake semantic model using XMLA endpoint

Select Model > Under Options > Direct Lake Behaviour > Change from Automatic to DirectLakeOnly

Save the model

Reference:

https://blog.tabulareditor.com/2023/11/27/tabular-editor-3-november-2023-release/

https://fabric.guru/controlling-direct-lake-fallback-behavior

You have a Fabric tenant that contains a complex semantic model. The model is based on a star schema and contains many tables, including a fact table named Sales.

You need to visualize a diagram of the model. The diagram must contain only the Sales table and related tables.

What should you use from Microsoft Power BI Desktop?

- A . data categories

- B . Data view

- C . Model view

- D . DAX query view

C

Explanation:

The Model view in Microsoft Power BI Desktop provides a visual representation of the relationships between tables in your semantic model. It allows you to see the structure of your star schema, including the Sales fact table and its related dimension tables. You can filter or focus on specific tables (like the Sales table and its related tables) to create a simplified view.

You have a Fabric tenant that contains a warehouse named DW1 and a lakehouse named LH1. DW1 contains a table named Sales.Product. LH1 contains a table named Sales.Orders.

You plan to schedule an automated process that will create a new point-in-time (PIT) table named Sales.ProductOrder in DW1. Sales.ProductOrder will be built by using the results of a query that will join Sales.Product and Sales.Orders.

You need to ensure that the types of columns in Sales.ProductOrder match the column types in the source tables. The solution must minimize the number of operations required to create the new table.

Which operation should you use?

- A . INSERT INTO

- B . CREATE TABLE AS SELECT (CTAS)

- C . CREATE TABLE AS CLONE OF

- D . CREATE MATERIALIZED VIEW AS SELECT

B

Explanation:

The CREATE TABLE AS SELECT (CTAS) statement allows you to create a new table based on the result of a SELECT query. This method automatically defines the new table’s columns with the same names and data types as those in the result set of the query, ensuring consistency with the source tables.

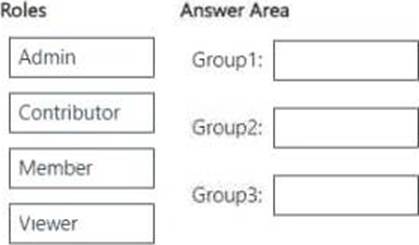

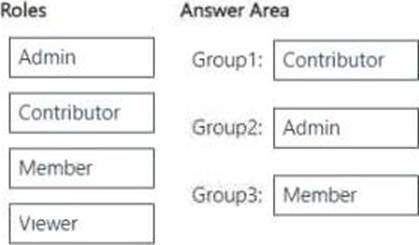

DRAG DROP

You have a Fabric workspace named Workspace1.

You have three groups named Group1, Group2, and Group3.

You need to assign a workspace role to each group.

The solution must follow the principle of least privilege and meet the following requirements:

– Group1 must be able to write data to Workspace1, but be unable to add members to Workspace1.

– Group2 must be able to configure and maintain the settings of Workspace1.

– Group3 must be able to write data and add members to Workspace1, but be unable to delete Workspace1.

Which workspace role should you assign to each group? To answer, drag the appropriate roles to the correct groups. Each role may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Group1 (Can write data but cannot add members) → Contributor

Contributors can write, edit, and manage data, but cannot manage workspace settings or add/ remove users.

Group2 (Can configure and maintain workspace settings) → Admin

Admins have full control over the workspace, including configuring settings, managing permissions, and maintaining security policies.

Group3 (Can write data and add members but cannot delete the workspace) → Member Members can add/remove members and write data, but they cannot delete the workspace or configure settings at the admin level.

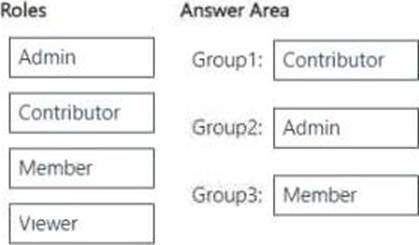

DRAG DROP

You have a Fabric workspace named Workspace1.

You have three groups named Group1, Group2, and Group3.

You need to assign a workspace role to each group.

The solution must follow the principle of least privilege and meet the following requirements:

– Group1 must be able to write data to Workspace1, but be unable to add members to Workspace1.

– Group2 must be able to configure and maintain the settings of Workspace1.

– Group3 must be able to write data and add members to Workspace1, but be unable to delete Workspace1.

Which workspace role should you assign to each group? To answer, drag the appropriate roles to the correct groups. Each role may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Group1 (Can write data but cannot add members) → Contributor

Contributors can write, edit, and manage data, but cannot manage workspace settings or add/ remove users.

Group2 (Can configure and maintain workspace settings) → Admin

Admins have full control over the workspace, including configuring settings, managing permissions, and maintaining security policies.

Group3 (Can write data and add members but cannot delete the workspace) → Member Members can add/remove members and write data, but they cannot delete the workspace or configure settings at the admin level.

Prepare data

Question Set 3

You have a Fabric tenant that contains a machine learning model registered in a Fabric workspace.

You need to use the model to generate predictions by using the PREDICT function in a Fabric notebook.

Which two languages can you use to perform model scoring? Each correct answer presents a complete solution. NOTE: Each correct answer is worth one point.

- A . T-SQL

- B . DAX

- C . Spark SQL

- D . PySpark

CD

Explanation:

Machine learning model scoring with PREDICT in Microsoft Fabric

To invoke the PREDICT function, you can use the Transformer API, the Spark SQL API, or a PySpark user-defined function (UDF).

Note: Microsoft Fabric allows users to operationalize machine learning models with a scalable function called PREDICT, which supports batch scoring in any compute engine. Users can generate batch predictions directly from a Microsoft Fabric notebook or from a given ML model’s item page.

Reference: https://learn.microsoft.com/en-us/fabric/data-science/model-scoring-predict

You have a Fabric workspace named Workspace1.

Workspace1 contains multiple semantic models, including a model named Model1. Model1 is updated by using an XMLA endpoint.

You need to increase the speed of the write operations of the XMLA endpoint.

What should you do?

- A . Delete any unused semantic models from Workspace1.

- B . Select Large semantic model storage format for Workspace1.

- C . Configure Model 1 to use the Direct Lake storage format.

- D . Delete any unused columns from Model1.

C

Explanation:

The Direct Lake storage format in Microsoft Fabric allows semantic models to read data directly from OneLake without requiring data movement or import. This significantly improves the performance of write operations when updating a model via the XMLA endpoint by eliminating the need for data duplication or transformation.

You have a query in Microsoft Power BI Desktop that contains two columns named Order_Date and Shipping_Date.

You need to create a column that will calculate the number of days between Order_Date and Shipping_Date for each row.

Which Power Query function should you use?

- A . DateTime.LocalNow

- B . Duration.Days

- C . Duration.From

- D . Date.AddDays

B

Explanation:

Power Query M, Duration.Days

Syntax

Duration.Days(duration as nullable duration) as nullable number

About

Returns the days portion of duration.

Example 1

Extract the number of days between two dates.

Usage

Duration.Days(#date(2022, 3, 4) – #date(2022, 2, 25))

Output

7

Reference: https://learn.microsoft.com/en-us/powerquery-m/duration-days

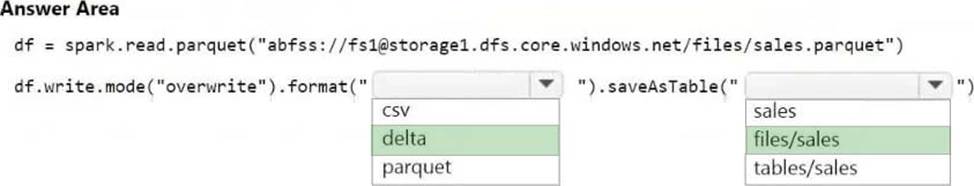

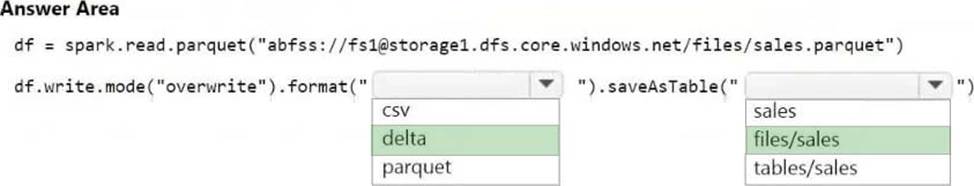

HOTSPOT

You have an Azure Data Lake Storage Gen2 account named storage1 that contains a Parquet file named sales.parquet.

You have a Fabric tenant that contains a workspace named Workspace1.

Using a notebook in Workspace1, you need to load the content of the file to the default lakehouse. The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: delta

Use a notebook to load data into your Lakehouse

Saving data in the Lakehouse using capabilities such as Load to Tables or methods described in Options to get data into the Fabric Lakehouse, all data is saved in Delta format.

# Keep it if you want to save dataframe as a delta lake, parquet table to Tables section of the default Lakehouse

df.write.mode("overwrite").format("delta").saveAsTable(delta_table_name)

# Keep it if you want to save the dataframe as a delta lake, appending the data to an existing table df.write.mode("append").format("delta").saveAsTable(delta_table_name)

QUESTION NO: NO: The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

Box 2: files/sales

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-notebook-load-data

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-and-delta-tables

HOTSPOT

You have an Azure Data Lake Storage Gen2 account named storage1 that contains a Parquet file named sales.parquet.

You have a Fabric tenant that contains a workspace named Workspace1.

Using a notebook in Workspace1, you need to load the content of the file to the default lakehouse. The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: delta

Use a notebook to load data into your Lakehouse

Saving data in the Lakehouse using capabilities such as Load to Tables or methods described in Options to get data into the Fabric Lakehouse, all data is saved in Delta format.

# Keep it if you want to save dataframe as a delta lake, parquet table to Tables section of the default Lakehouse

df.write.mode("overwrite").format("delta").saveAsTable(delta_table_name)

# Keep it if you want to save the dataframe as a delta lake, appending the data to an existing table df.write.mode("append").format("delta").saveAsTable(delta_table_name)

QUESTION NO: NO: The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

Box 2: files/sales

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-notebook-load-data

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-and-delta-tables