Practice Free DVA-C02 Exam Online Questions

A developer is writing unit tests tor a new application that will be deployed on AWS. The developer wants to validate all pull requests with unit tests and merge the code with the main branch only when all tests pass

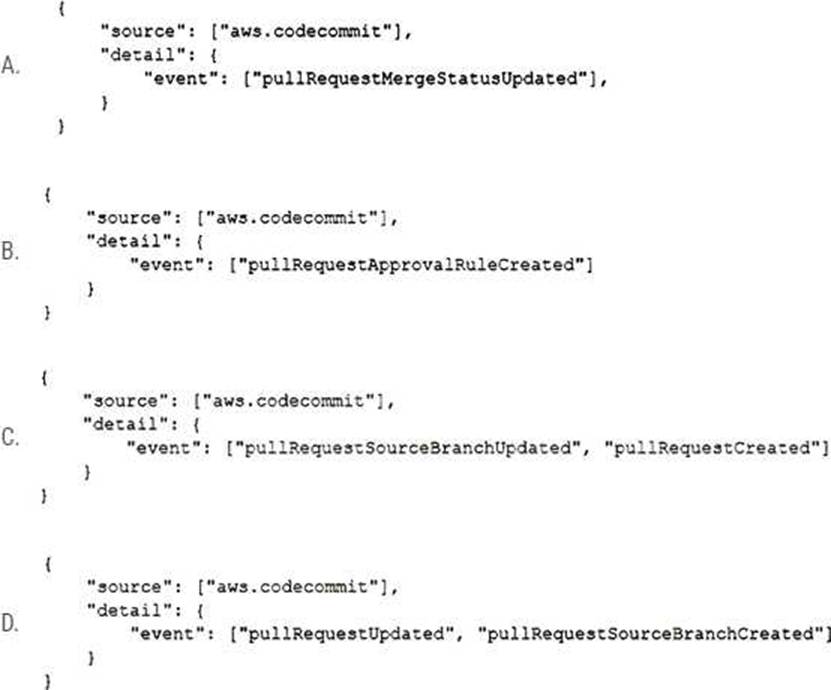

The developer stores the code in AWS CodeCommit and sets up AWS CodeBuild to run the unit tests. The developer creates an AWS Lambda function to start the CodeBuild task. The developer needs to identify the CodeCommit events in an Amazon EventBridge event that can invoke the Lambda function when a pull request is created or updated.

Which CodeCommit event will meet these requirements?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

C

Explanation:

https://aws.amazon.com/blogs/devops/automated-code-review-on-pull-requests-using-aws-codecommit-and-aws-codebuild/

A developer has a legacy application that is hosted on-premises. Other applications hosted on AWS depend on the on-premises application for proper functioning. In case of any application errors, the developer wants to be able to use Amazon CloudWatch to monitor and troubleshoot all applications from one place.

How can the developer accomplish this?

- A . Install an AWS SDK on the on-premises server to automatically send logs to CloudWatch.

- B . Download the CloudWatch agent to the on-premises server. Configure the agent to use IAM user credentials with permissions for CloudWatch.

- C . Upload log files from the on-premises server to Amazon S3 and have CloudWatch read the files.

- D . Upload log files from the on-premises server to an Amazon EC2 instance and have the instance forward the logs to CloudWatch.

B

Explanation:

Amazon CloudWatch is a service that monitors AWS resources and applications. The developer can use CloudWatch to monitor and troubleshoot all applications from one place. To do so, the developer needs to download the CloudWatch agent to the on-premises server and configure the agent to use IAM user credentials with permissions for CloudWatch. The agent will collect logs and metrics from the on-premises server and send them to CloudWatch.

Reference: [What Is Amazon CloudWatch? – Amazon CloudWatch]

[Installing and Configuring the CloudWatch Agent – Amazon CloudWatch]

A developer has written a distributed application that uses micro services. The microservices are running on Amazon EC2 instances. Because of message volume, the developer is unable to match log output from each microservice to a specific transaction. The developer needs to analyze the message flow to debug the application.

Which combination of steps should the developer take to meet this requirement? (Select TWO.)

- A . Download the AWS X-Ray daemon. Install the daemon on an EC2 instance. Ensure that the EC2 instance allows UDP traffic on port 2000.

- B . Configure an interface VPC endpoint to allow traffic to reach the global AWS X-Ray daemon on TCP port 2000.

- C . Enable AWS X-Ray. Configure Amazon CloudWatch to push logs to X-Ray.

- D . Add the AWS X-Ray software development kit (SDK) to the microservices. Use X-Ray to trace requests that each microservice makes.

- E . Set up Amazon CloudWatch metric streams to collect streaming data from the microservices.

A developer is working on an ecommerce application that stores data in an Amazon RDS for MySQL cluster. The developer needs to implement a caching layer for the application to retrieve information about the most viewed products.

Which solution will meet these requirements?

- A . Edit the RDS for MySQL cluster by adding a cache node. Configure the cache endpoint instead of the cluster endpoint in the application.

- B . Create an Amazon ElastiCache (Redis OSS) cluster. Update the application code to use the ElastiCache (Redis OSS) cluster endpoint.

- C . Create an Amazon DynamoDB Accelerator (DAX) cluster in front of the RDS for MySQL cluster.

Configure the application to connect to the DAX endpoint instead of the RDS endpoint. - D . Configure the RDS for MySQL cluster to add a standby instance in a different Availability Zone.

Configure the application to read the data from the standby instance.

B

Explanation:

To add a caching layer for frequently requested data (such as “most viewed products”), the AWS-native choice is Amazon ElastiCache, commonly using Redis for low-latency key/value caching, counters, sorted sets, and leaderboard-like patterns. Redis is particularly well-suited for ecommerce “top N” or popularity queries because it supports atomic increments and sorted sets, enabling efficient ranking updates.

Option B correctly introduces an ElastiCache (Redis OSS) cluster and updates the application to read from Redis first (cache-aside or write-through patterns) instead of hitting the RDS MySQL cluster for every request. This offloads read pressure from the database, reduces latency, and improves overall throughput.

Option A is not a valid RDS feature: you do not add a “cache node” to an RDS MySQL cluster as part of RDS itself.

Option C is incorrect because DAX is a caching layer specifically for DynamoDB, not for RDS MySQL.

Option D improves availability via Multi-AZ standby, but the standby is not for read scaling in standard RDS Multi-AZ (it’s primarily for failover). It does not provide a cache and does not solve the performance needs for hot reads.

A developer wrote an application that uses an AWS Lambda function to asynchronously generate short videos based on requests from customers. This video generation can take up to 10 minutes. After the video is generated, a URL to download the video is pushed to the customer’s web browser. The customer should be able to access these videos for at least 3 hours after generation.

Which solution will meet these requirements?

- A . Store the video in the /tmp folder within the Lambda execution environment. Push a Lambda function URL to the customer.

- B . Store the video in an Amazon EFS file system attached to the function. Generate a presigned URL for the video object and push the URL to the customer.

- C . Store the video in Amazon S3. Generate a presigned URL for the video object and push the URL to the customer.

- D . Store the video in an Amazon CloudFront distribution. Generate a presigned URL for the video object and push the URL to the customer.

C

Explanation:

The customer must be able to download the generated video for at least 3 hours, which requires durable storage that persists beyond the Lambda execution environment. The best fit is Amazon S3 with a presigned URL.

Lambda’s /tmp storage (Option A) is ephemeral and scoped to the execution environment. It is not intended for durable customer downloads and can be deleted when the execution environment is recycled. A Lambda function URL also does not solve durable file hosting and would require the function to serve the content itself.

Option C uses S3 to store the video object durably and then issues a presigned URL that grants time-limited access to that specific object. Presigned URLs are designed for exactly this scenario: allow unauthenticated users temporary access to a private S3 object without making the bucket public. The developer can set the URL expiration to 3 hours (or longer), meeting the access requirement cleanly.

Option B is incorrect because S3 presigned URLs are for S3 objects, not EFS files. EFS does not natively use S3-style presigned URLs for external downloads.

Option D is not the right model: CloudFront can distribute S3 content and supports signed URLs/cookies, but CloudFront is a distribution layer, not an origin storage solution by itself. You would still need to store the video in S3 (or another origin). For the stated requirement, S3 + presigned URL is simpler and has less overhead.

Therefore, store videos in Amazon S3 and provide presigned URLs with a 3-hour expiration.

A developer is building an application that includes an AWS Lambda function that is written in .NET Core. The Lambda function’s code needs to interact with Amazon DynamoDB tables and Amazon S3 buckets. The developer must minimize the Lambda function’s deployment time and invocation duration.

Which solution will meet these requirements?

- A . Increase the Lambda function’s memory.

- B . Include the entire AWS SDK for .NET in the Lambda function’s deployment package.

- C . Include only the AWS SDK for .NET modules for DynamoDB and Amazon S3 in the Lambda function’s deployment package.

- D . Configure the Lambda function to download the AWS SDK for .NET from an S3 bucket at runtime.

A social media application is experiencing high volumes of new user requests after a recent marketing campaign. The application is served by an Amazon RDS for MySQL instance. A solutions architect examines the database performance and notices high CPU usage and many "too many connections" errors that lead to failed requests on the database. The solutions architect needs to address the failed requests.

Which solution will meet this requirement?

- A . Deploy an Amazon DynamoDB Accelerator (DAX) cluster. Configure the application to use the DAX cluster.

- B . Deploy an RDS Proxy. Configure the application to use the RDS Proxy.

- C . Migrate the database to an Amazon RDS for PostgreSQL instance.

- D . Deploy an Amazon ElastiCache (Redis OSS) cluster. Configure the application to use the ElastiCache cluster.

B

Explanation:

Why Option B is Correct: RDS Proxy manages database connections efficiently, reducing overhead on the RDS instance and mitigating "too many connections" errors.

Why Other Options are Incorrect:

Option A: DAX is for DynamoDB, not RDS.

Option C: Migration to PostgreSQL does not address the current issue.

Option D: ElastiCache is useful for caching but does not solve connection pool issues.

AWS Documentation

Reference: Amazon RDS Proxy

A company notices that credentials that the company uses to connect to an external software as a service (SaaS) vendor are stored in a configuration file as plaintext.

The developer needs to secure the API credentials and enforce automatic credentials rotation on a quarterly basis.

Which solution will meet these requirements MOST securely?

- A . Use AWS Key Management Service (AWS KMS) to encrypt the configuration file. Decrypt the configuration file when users make API calls to the SaaS vendor. Enable rotation.

- B . Retrieve temporary credentials from AWS Security Token Service (AWS STS) every 15 minutes. Use the temporary credentials when users make API calls to the SaaS vendor.

- C . Store the credentials in AWS Secrets Manager and enable rotation. Configure the API to have Secrets Manager access.

- D . Store the credentials in AWS Systems Manager Parameter Store and enable rotation. Retrieve the credentials when users make API calls to the SaaS vendor.

C

Explanation:

Store the credentials in AWS Secrets Manager and enable rotation. Configure the API to have Secrets Manager access. This is correct. This solution will meet the requirements most securely, because it uses a service that is designed to store and manage secrets such as API credentials. AWS Secrets Manager helps you protect access to your applications, services, and IT resources by enabling you to rotate, manage, and retrieve secrets throughout their lifecycle1. You can store secrets such as passwords, database strings, API keys, and license codes as encrypted values2. You can also configure automatic rotation of your secrets on a schedule that you specify3. You can use the AWS SDK or CLI to retrieve secrets from Secrets Manager when you need them4. This way, you can avoid storing credentials in plaintext files or hardcoding them in your code.

A developer has created an AWS Lambda function that is written in Python. The Lambda function reads data from objects in Amazon S3 and writes data to an Amazon DynamoDB table.

The function is successfully invoked from an S3 event notification when an object is created.

However, the function fails when it attempts to write to the DynamoDB table.

What is the MOST likely cause of this issue?

- A . The Lambda function’s concurrency limit has been exceeded.

- B . The DynamoDB table requires a global secondary index (GSI) to support writes.

- C . The Lambda function does not have IAM permissions to write to DynamoDB.

- D . The DynamoDB table is not running in the same Availability Zone as the Lambda function.

C

Explanation:

Because the Lambda function is successfully triggered by the S3 event notification, the invocation path (S3 → Lambda) is working correctly. The failure occurs specifically when the function tries to write to DynamoDB, which strongly indicates an authorization problem rather than an invocation, scaling, or infrastructure issue.

In AWS, a Lambda function interacts with other services by using its execution role (an IAM role). AWS documentation explains that a Lambda function must have explicit IAM permissions to call downstream services such as DynamoDB. To write items, the role typically needs actions like dynamodb: PutItem (and sometimes dynamodb: UpdateItem, dynamodb: BatchWriteItem, depending on code behavior) on the target table resource ARN. If these permissions are missing or scoped incorrectly, DynamoDB returns an AccessDeniedException (or similar) and the function fails at the write step.

Option A is unlikely because exceeding concurrency would typically prevent invocation or cause throttling at the Lambda service level, not selectively fail only at DynamoDB write time after the function begins executing.

Option B is incorrect: DynamoDB does not require a GSI to support writes. GSIs are for alternate query access patterns, not mandatory for write operations.

Option D is incorrect because DynamoDB is a regional service, not tied to a single Availability Zone, and Lambda does not need to be “in the same AZ” to access it.

Therefore, the most likely cause is that the Lambda execution role lacks the necessary IAM permissions to perform DynamoDB write operations.

A developer is creating an application that includes an Amazon API Gateway REST API in the us-east-2 Region. The developer wants to use Amazon CloudFront and a custom domain name for the API. The developer has acquired an SSL/TLS certificate for the domain from a third-party provider.

How should the developer configure the custom domain for the application?

- A . Import the SSL/TLS certificate into AWS Certificate Manager (ACM) in the same Region as the API.

Create a DNS A record for the custom domain. - B . Import the SSL/TLS certificate into CloudFront. Create a DNS CNAME record for the custom domain.

- C . Import the SSL/TLS certificate into AWS Certificate Manager (ACM) in the same Region as the API.

Create a DNS CNAME record for the custom domain. - D . Import the SSL/TLS certificate into AWS Certificate Manager (ACM) in the us-east-1 Region. Create a DNS CNAME record for the custom domain.

D

Explanation:

Amazon API Gateway is a service that enables developers to create, publish, maintain, monitor, and secure APIs at any scale. Amazon CloudFront is a content delivery network (CDN) service that can improve the performance and security of web applications. The developer can use CloudFront and a custom domain name for the API Gateway REST API. To do so, the developer needs to import the SSL/TLS certificate into AWS Certificate Manager (ACM) in the us-east-1 Region. This is because CloudFront requires certificates from ACM to be in this Region. The developer also needs to create a DNS CNAME record for the custom domain that points to the CloudFront distribution.

Reference: [What Is Amazon API Gateway? – Amazon API Gateway]

[What Is Amazon CloudFront? – Amazon CloudFront]

[Custom Domain Names for APIs – Amazon API Gateway]