Practice Free DVA-C02 Exam Online Questions

A developer wants to deploy a new version of an AWS Elastic Beanstalk application. During deployment the application must maintain full capacity and avoid service interruption. Additionally, the developer must minimize the cost of additional resources that support the deployment.

Which deployment method should the developer use to meet these requirements?

- A . All at once

- B . Rolling with additional batch

- C . Bluegreen

- D . Immutable

B

Explanation:

This solution will meet the requirements by using a rolling with additional batch deployment method, which deploys the new version of the application to a separate group of instances and then shifts traffic to those instances in batches. This way, the application maintains full capacity and avoids service interruption during deployment, as well as minimizes the cost of additional resources that support the deployment.

Option A is not optimal because it will use an all at once deployment method, which deploys the new version of the application to all instances simultaneously, which may cause service interruption or downtime during deployment.

Option C is not optimal because it will use a blue/green deployment method, which deploys the new version of the application to a separate environment and then swaps URLs with the original environment, which may incur more costs for additional resources that support the deployment.

Option D is not optimal because it will use an immutable deployment method, which deploys the new version of the application to a fresh group of instances and then redirects traffic to those instances, which may also incur more costs for additional resources that support the deployment.

Reference: AWS Elastic Beanstalk Deployment Policies

A company wants to use AWS AppConfig to gradually deploy a new feature to 15% of users to test the feature before a full deployment.

Which solution will meet this requirement with the LEAST operational overhead?

- A . Set up a custom script within the application to randomly select 15% of users. Assign a flag for the new feature to the selected users.

- B . Create separate AWS AppConfig feature flags for both groups of users. Configure the flags to target 15% of users.

- C . Create an AWS AppConfig feature flag. Define a variant for the new feature, and create a rule to target 15% of users.

- D . Use AWS AppConfig to create a feature flag without variants. Implement a custom traffic-splitting mechanism in the application code.

C

Explanation:

AWS AppConfig Feature Flags are designed to release features safely with minimal code changes. The lowest operational overhead approach is to use a single feature flag and configure it to deliver the feature to a percentage of users through built-in targeting rules and variants.

With option C, the developer creates one AppConfig feature flag and defines a variant that represents the new feature behavior (for example, enabled=true or a “newExperience” variant). Then the developer creates a rule in AppConfig to target 15% of users. AppConfig feature flags support rules that can evaluate attributes (such as user IDs or other contextual attributes supplied by the application) and apply percentage-based rollout. This avoids building and maintaining custom rollout logic in the application.

Option A and D both require implementing and maintaining custom traffic splitting in code, which increases complexity and operational burden, and risks inconsistent behavior across clients/services.

Option B adds extra configuration overhead and complexity by managing multiple feature flags for “groups” instead of using a single flag with a variant and rule. A single flag with variants is the clean, scalable pattern.

Therefore, create one AppConfig feature flag, define a variant, and configure a rule to target 15% of users.

A developer is building a microservice that uses AWS Lambda to process messages from an Amazon Simple Queue Service (Amazon SQS) standard queue. The Lambda function calls external APIs to enrich the SOS message data before loading the data into an Amazon Redshift data warehouse. The SOS queue must handle a maximum of 1.000 messages per second.

During initial testing, the Lambda function repeatedly inserted duplicate data into the Amazon Redshift table. The duplicate data led to a problem with data analysis. All duplicate messages were submitted to the queue within 1 minute of each other.

How should the developer resolve this issue?

- A . Create an SOS FIFO queue. Enable message deduplication on the SOS FIFO queue.

- B . Reduce the maximum Lambda concurrency that the SOS queue can invoke.

- C . Use Lambda’s temporary storage to keep track of processed message identifiers.

- D . Configure a message group ID for every sent message. Enable message deduplication on the SQS standard queue.

A developer is building an application that processes a stream of user-supplied data. The data stream must be consumed by multiple Amazon EC2 based processing applications in parallel and in real time. Each processor must be able to resume without losing data if there is a service interruption. The application architect plans to add other processors in the near future, and wants to minimize the amount of data duplication involved.

Which solution will satisfy these requirements?

- A . Publish the data to Amazon Simple Queue Service (Amazon SQS).

- B . Publish the data to Amazon Data Firehose.

- C . Publish the data to Amazon EventBridge.

- D . Publish the data to Amazon Kinesis Data Streams.

A company uses more than 100 AWS Lambda functions to handle application services. One Lambda function is critical and must always run successfully. The company notices that occasionally, the critical Lambda function does not initiate. The company investigates the issue and discovers instances of the Lambda TooManyRequestsException: Rate Exceeded error in Amazon CloudWatch logs. Upon further review of the logs, the company notices that some of the non-critical functions run properly while the critical function fails. A developer must resolve the errors and ensure that the critical Lambda function runs successfully.

Which solution will meet these requirements with the LEAST operational overhead?

- A . Configure reserved concurrency for the critical Lambda function. Set reserved concurrent executions to the appropriate level.

- B . Configure provisioned concurrency for the critical Lambda function. Set provisioned concurrent executions to the appropriate level.

- C . Configure CloudWatch alarms for TooManyRequestsException errors. Add the critical Lambda function as an alarm state change action to invoke the critical function again after a failure.

- D . Configure CloudWatch alarms for TooManyRequestsException errors. Add Amazon EventBridge as an action for the alarm state change. Use EventBridge to invoke the critical function again after a failure.

A

Explanation:

Reserved concurrency guarantees a specific number of concurrent executions for a critical Lambda function. This ensures that the critical function always has sufficient resources to execute, even if other functions are consuming concurrency.

Why Option A:

Ensures Function Availability: Reserved concurrency isolates the critical Lambda function from other functions.

Low Overhead: Configuring reserved concurrency is straightforward and requires minimal setup.

Why Not Other Options:

Option B: Provisioned concurrency is ideal for reducing cold starts, not for managing execution limits.

Option C & D: Alarms and re-invocation mechanisms add complexity without resolving the root cause.

Managing Concurrency for AWS Lambda

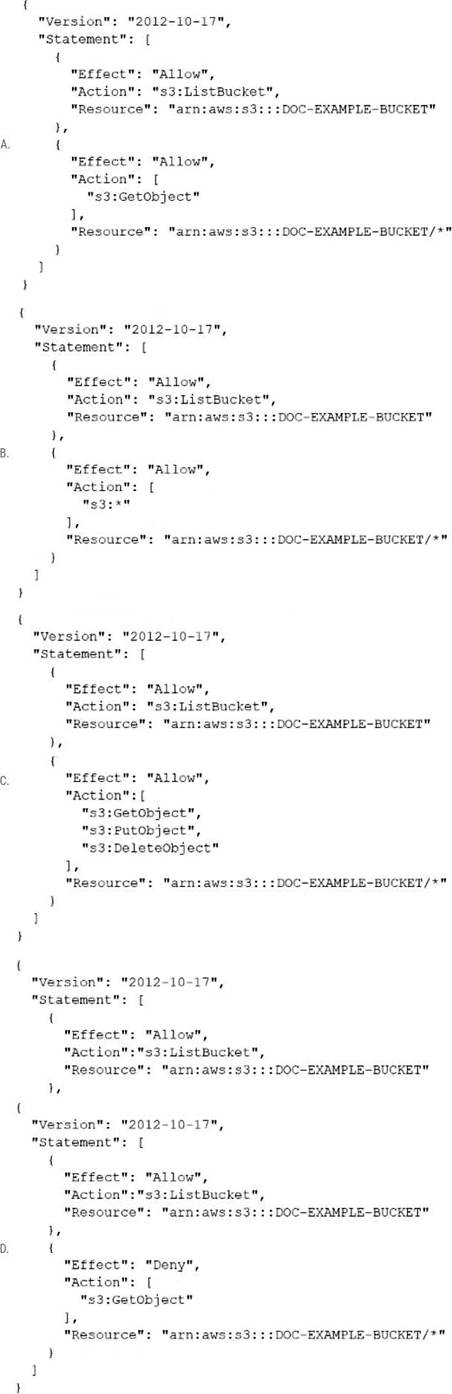

A company has an online web application that includes a product catalog. The catalog is stored in an Amazon S3 bucket that is named DOC-EXAMPLE-BUCKET. The application must be able to list the objects in the S3 bucket and must be able to download objects through an 1AM policy.

Which policy allows MINIMUM access to meet these requirements?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

A company is using Amazon RDS as the Backend database for its application. After a recent marketing campaign, a surge of read requests to the database increased the latency of data retrieval from the database.

The company has decided to implement a caching layer in front of the database. The cached content must be encrypted and must be highly available.

Which solution will meet these requirements?

- A . Amazon Cloudfront

- B . Amazon ElastiCache to Memcached

- C . Amazon ElastiCache for Redis in cluster mode

- D . Amazon DynamoDB Accelerate (DAX)

C

Explanation:

This solution meets the requirements because it provides a caching layer that can store and retrieve encrypted data from multiple nodes. Amazon ElastiCache for Redis supports encryption at rest and in transit, and can scale horizontally to increase the cache capacity and availability. Amazon ElastiCache for Memcached does not support encryption, Amazon CloudFront is a content delivery network that is not suitable for caching database queries, and Amazon DynamoDB Accelerator (DAX) is a caching service that only works with DynamoDB tables.

Reference: [Amazon ElastiCache for Redis Features], [Choosing a Cluster Engine]

An application uses Lambda functions to extract metadata from files uploaded to an S3 bucket; the metadata is stored in Amazon DynamoDB. The application starts behaving unexpectedly, and the developer wants to examine the logs of the Lambda function code for errors.

Based on this system configuration, where would the developer find the logs?

- A . Amazon S3

- B . AWS CloudTrail

- C . Amazon CloudWatch

- D . Amazon DynamoDB

C

Explanation:

Amazon CloudWatch is the service that collects and stores logs from AWS Lambda functions. The developer can use CloudWatch Logs Insights to query and analyze the logs for errors and metrics.

Option A is not correct because Amazon S3 is a storage service that does not store Lambda function logs.

Option B is not correct because AWS CloudTrail is a service that records API calls and events for AWS services, not Lambda function logs.

Option D is not correct because Amazon DynamoDB is a database service that does not store Lambda function logs.

Reference: AWS Lambda Monitoring, [CloudWatch Logs Insights]

A company is building an application for stock trading. The application needs sub-millisecond latency for processing trade requests. The company uses Amazon DynamoDB to store all the trading data that is used to process each trading request A development team performs load testing on the application and finds that the data retrieval time is higher than expected. The development team needs a solution that reduces the data retrieval time with the least possible effort.

Which solution meets these requirements’?

- A . Add local secondary indexes (LSis) for the trading data.

- B . Store the trading data m Amazon S3 and use S3 Transfer Acceleration.

- C . Add retries with exponential back off for DynamoDB queries.

- D . Use DynamoDB Accelerator (DAX) to cache the trading data.

D

Explanation:

This solution will meet the requirements by using DynamoDB Accelerator (DAX), which is a fully managed, highly available, in-memory cache for DynamoDB that delivers up to a 10 times performance improvement – from milliseconds to microseconds – even at millions of requests per second. The developer can use DAX to cache the trading data that is used to process each trading request, which will reduce the data retrieval time with the least possible effort.

Option A is not optimal because it will add local secondary indexes (LSIs) for the trading data, which may not improve the performance or reduce the latency of data retrieval, as LSIs are stored on the same partition as the base table and share the same provisioned throughput.

Option B is not optimal because it will store the trading data in Amazon S3 and use S3 Transfer Acceleration, which is a feature that enables fast, easy, and secure transfers of files over long distances between S3 buckets and clients, not between DynamoDB and clients.

Option C is not optimal because it will add retries with exponential backoff for DynamoDB queries, which is a strategy to handle transient errors by retrying failed requests with increasing delays, not by reducing data retrieval time.

Reference: [DynamoDB Accelerator (DAX)], [Local Secondary Indexes]

A company is running a custom application on a set of on-premises Linux servers that are accessed using Amazon API Gateway. AWS X-Ray tracing has been enabled on the API test stage.

How can a developer enable X-Ray tracing on the on-premises servers with the LEAST amount of configuration?

- A . Install and run the X-Ray SDK on the on-premises servers to capture and relay the data to the X-Ray service.

- B . Install and run the X-Ray daemon on the on-premises servers to capture and relay the data to the X-Ray service.

- C . Capture incoming requests on-premises and configure an AWS Lambda function to pull, process, and relay relevant data to X-Ray using the PutTraceSegments API call.

- D . Capture incoming requests on-premises and configure an AWS Lambda function to pull, process, and relay relevant data to X-Ray using the PutTelemetryRecords API call.

B

Explanation:

The X-Ray daemon is a software that collects trace data from the X-Ray SDK and relays it to the X-Ray service. The X-Ray daemon can run on any platform that supports Go, including Linux, Windows, and macOS. The developer can install and run the X-Ray daemon on the on-premises servers to capture and relay the data to the X-Ray service with minimal configuration. The X-Ray SDK is used to instrument the application code, not to capture and relay data. The Lambda function solutions are more complex and require additional configuration.

Reference: [AWS X-Ray concepts – AWS X-Ray]

[Setting up AWS X-Ray – AWS X-Ray]