Practice Free UIPATH-ADPV1 Exam Online Questions

Which of the following examples accurately demonstrates the correct usage of Al Computer Vision features in a UiPath project?

- A . Employing Al Computer Vision to identify and interact with Ul elements in a remote desktop application with low quality or scaling issues.

- B . Utilizing Al Computer Vision to train a custom machine learning model to recognize specific patterns in data.

- C . Using Al Computer Vision to extract plain text from a scanned PDF document and store the output in a string variable.

- D . Applying Al Computer Vision to perform sentiment analysis on a provided text string and displaying the result.

A

Explanation:

AI Computer Vision is a feature of UiPath that enables the automation of remote applications or desktops, such as Citrix Virtual Apps, Windows Remote Desktop, or VMware Horizon, by using native selectors. Native selectors are expressions that identify UI elements reliably and accurately, without relying on OCR or image recognition activities1. AI Computer Vision uses a mix of AI, OCR, text fuzzy-matching, and an anchoring system to visually locate elements on the screen and interact with them via UiPath Robots, simulating human interaction2. AI Computer Vision is especially useful for scenarios where the UI elements have low quality or scaling issues, which make them difficult to recognize with traditional methods3.

Option A is an accurate example of using AI Computer Vision features in a UiPath project, because it involves identifying and interacting with UI elements in a remote desktop application, which is one of the main use cases of AI Computer Vision. By using the Computer Vision activities, such as CV Screen Scope, CV Click, CV Get Text, etc., you can automate tasks in a remote desktop application without using selectors, OCR, or image recognition activities4.

The other options are not accurate examples of using AI Computer Vision features in a UiPath project, because they involve tasks that are not related to the automation of remote applications or desktops, or that do not use the Computer Vision activities.

For example:

Option B involves training a custom machine learning model, which is not a feature of AI Computer Vision, but of the UiPath AI Fabric, a platform that enables you to deploy, consume, and improve machine learning models in UiPath.

Option C involves extracting plain text from a scanned PDF document, which is not a feature of AI Computer Vision, but of the UiPath Document Understanding, a framework that enables you to classify, extract, and validate data from various types of documents.

Option D involves performing sentiment analysis on a text string, which is not a feature of AI Computer Vision, but of the UiPath Text Analysis, a set of activities that enable you to analyze the sentiment, key phrases, and language of a text using pre-trained machine learning models.

Reference: 1: Studio – About Selectors – UiPath Documentation Portal 2: AI Computer Vision – Introduction – UiPath Documentation Portal 3: The New UiPath AI Computer Vision Is Now in Public Preview 4: Activities – Computer Vision activities – UiPath Documentation Portal: [AI Fabric – Overview – UiPath Documentation Portal]: [Document Understanding – Overview – UiPath Documentation Portal]: [Text Analysis – UiPath Activities]

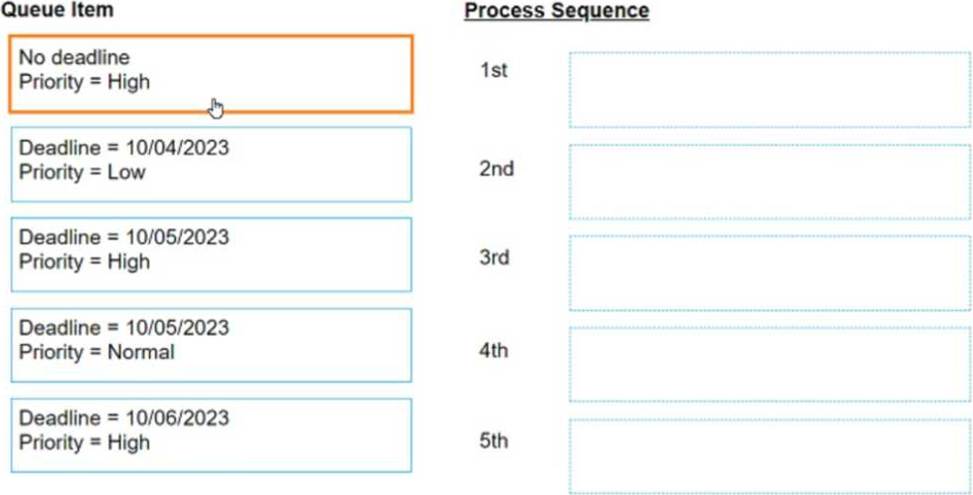

DRAG DROP

On 10/04/2023 five Queue Items were added to a queue.

What is the appropriate processing sequence for Queue Items based on their properties?

Instructions: Drag the Queue Item found on the "Left" and drop on the correct Process Sequence found on the "Right".

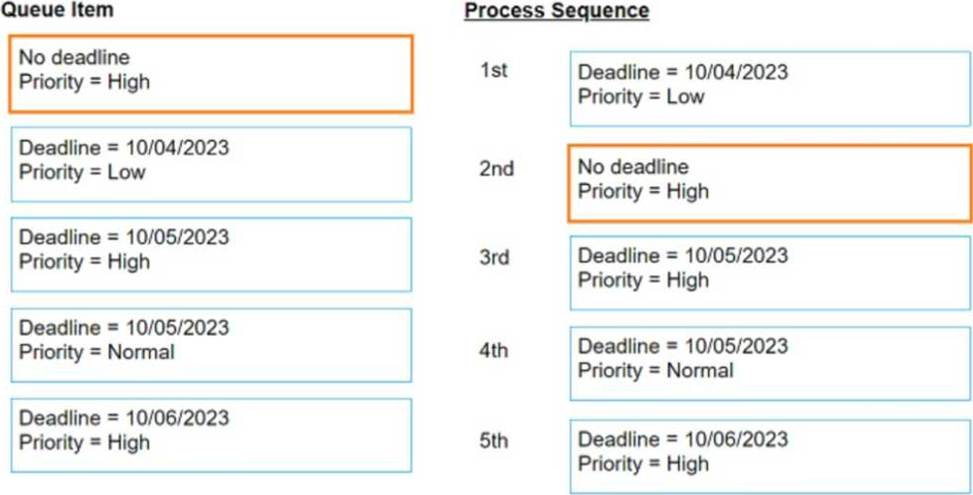

Explanation:

The processing sequence for queue items in UiPath Orchestrator is determined primarily by the deadline and priority of each item. Items with an earlier deadline are processed first.

If multiple items have the same deadline, then priority determines the order:

High, Normal, then Low. Following this logic, the processing sequence would be:

1st: Deadline = 10/04/2023 Priority = LowSince this is the only item with the deadline of the current day (assuming today is 10/04/2023), it should be processed first regardless of its priority.

2nd: No deadline Priority = HighAlthough this item has no deadline, its high priority places it next in the sequence after items with a deadline for the current day.

3rd: Deadline = 10/05/2023 Priority = HighThis item is next due to its combination of an imminent deadline and high priority.

4th: Deadline = 10/05/2023 Priority = NormalThis item has the same deadline as the third but a lower priority, so it comes next.

5th: Deadline = 10/06/2023 Priority = HighThis item, while high priority, has the latest deadline, so it is processed last.

So the order would be:

1st: Deadline = 10/04/2023 Priority = Low2nd: No deadline Priority = High3rd: Deadline = 10/05/2023 Priority = High4th: Deadline = 10/05/2023 Priority = Normal5th: Deadline = 10/06/2023 Priority = High

When automating the process of entering values into a web form, requiring each field to be brought to the foreground, which property of the Type Into activity should be adjusted to achieve this?

- A . Delay before

- B . Activate

- C . Input Element

- D . Selector

B

Explanation:

The Type Into activity is used to send keystrokes to a UI element, such as a text box1. The activity has several properties that can be adjusted to achieve different behaviors and results1. One of these properties is the Activate property, which determines whether the specified UI element is brought to

the foreground and activated before the text is written1. By default, this property is not selected, which means that the activity will type into the current active window1. However, if the property is selected, the activity will first make sure that the target UI element is visible and focused, and then type the text1. Therefore, if the automation process requires each field to be brought to the foreground, the Activate property of the Type Into activity should be adjusted to achieve this

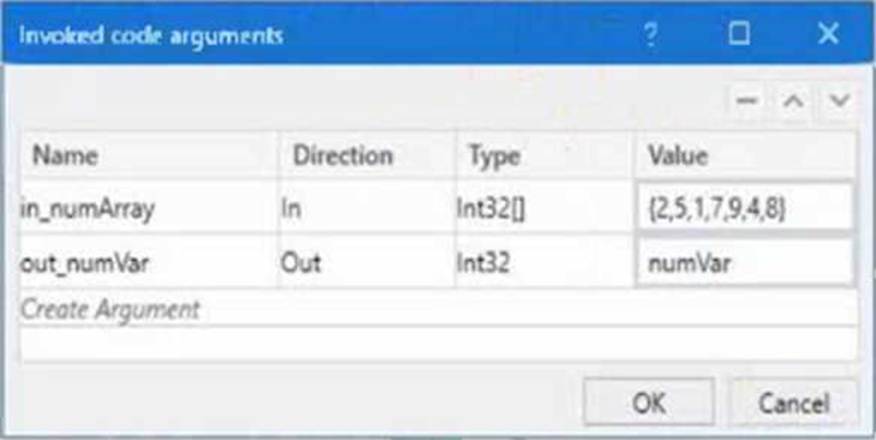

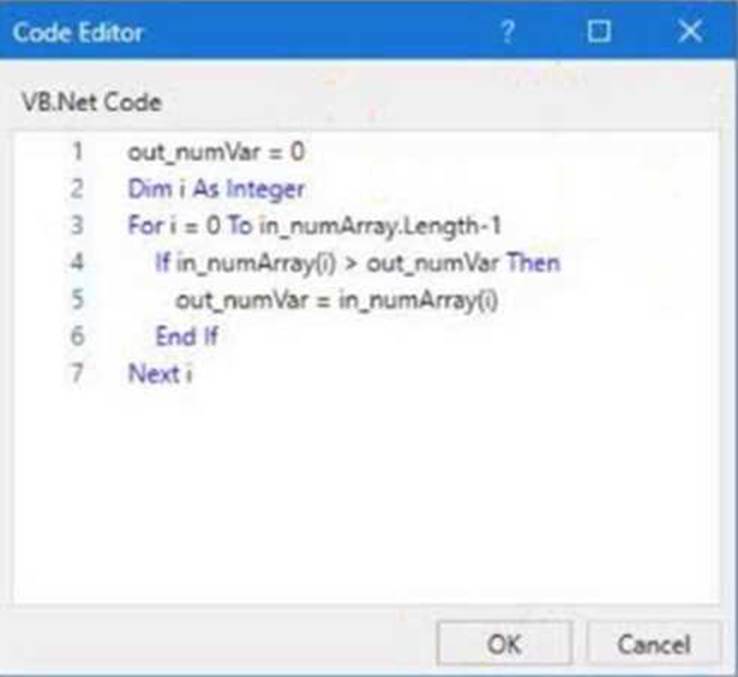

Given the following list of arguments:

and the following code:

What is the value that will be displayed in the Output Panel at the end of the sequence below:

- A . 1

- B . 2

- C . 7

- D . 9

D

Explanation:

The value that will be displayed in the Output Panel at the end of the sequence is 9. This is because the code in the Invoke Code activity is looping through the array in_numArray and setting the variable out_numVar to the highest value in the array. The array in_numArray has the values {1, 2, 7, 9, 4} as shown in the list of arguments. The variable out_numVar is initialized to 0. The For loop iterates from 0 to the upper bound of the array, which is 4. In each iteration, the If condition checks if the current element of the array is greater than the current value of out_numVar. If it is, then out_numVar is assigned to the current element of the array. Otherwise, out_numVar remains unchanged. Therefore, after the first iteration, out_numVar becomes 1. After the second iteration, out_numVar becomes 2. After the third iteration, out_numVar becomes 7. After the fourth iteration, out_numVar becomes 9. After the fifth iteration, out_numVar remains 9, since 4 is not greater than 9. The Write Line activity outputs the value of out_numVar to the console, which is 9.

Reference: Invoke Code

What are the primary functions of the UiPath Integration Service?

- A . Enables automation with a library of connectors, manages connections easily with standardized authentication, kicks off automations with server-side triggers or events, provides curated activities and events, simplifies automation design.

- B . Automates Ul design, manages API connections, provides limited activities and events, simplifies automation design.

- C . Enables automation with API integration, manages connections with user-provided authentication, kicks off automations based on application-specific triggers, simplifies automation design with the help of third-party libraries.

- D . Enables automation with Ul components, manages API keys, kicks off automations with client-side triggers, provides curated events.

A

Explanation:

UiPath Integration Service is a component of the UiPath Platform that allows users to automate third-party applications using both UI and API integration. It has the following primary functions1: Enables automation with a library of connectors: Connectors are pre-built API integrations that provide a consistent developer experience and simplify the integration process. Users can browse and import connectors from the Connector Catalog or the UiPath Marketplace, or create their own connectors using the Connector Builder (Preview).

Manages connections easily with standardized authentication: Users can create and manage secure connections to various applications using standardized authentication methods, such as OAuth 2.0, Basic, API Key, etc. Users can also share connections with other users, groups, or automations within the UiPath Platform.

Kicks off automations with server-side triggers or events: Users can trigger automations based on events from external applications, such as webhooks, email, or schedules. Users can also configure parameters and filters for the events to customize the automation logic.

Provides curated activities and events: Users can access a set of curated activities and events that are specific to each connector and application. These activities and events can be used in UiPath Studio or UiPath StudioX to design and execute automations.

Simplifies automation design: Users can leverage the UiPath Integration Service Designer to create and test integrations in a graphical interface. Users can also import common API formats, such as

Swagger, YAML, or Postman collections, to generate connectors automatically.

Reference: 1: Integration Service – Introduction – UiPath Documentation Portal

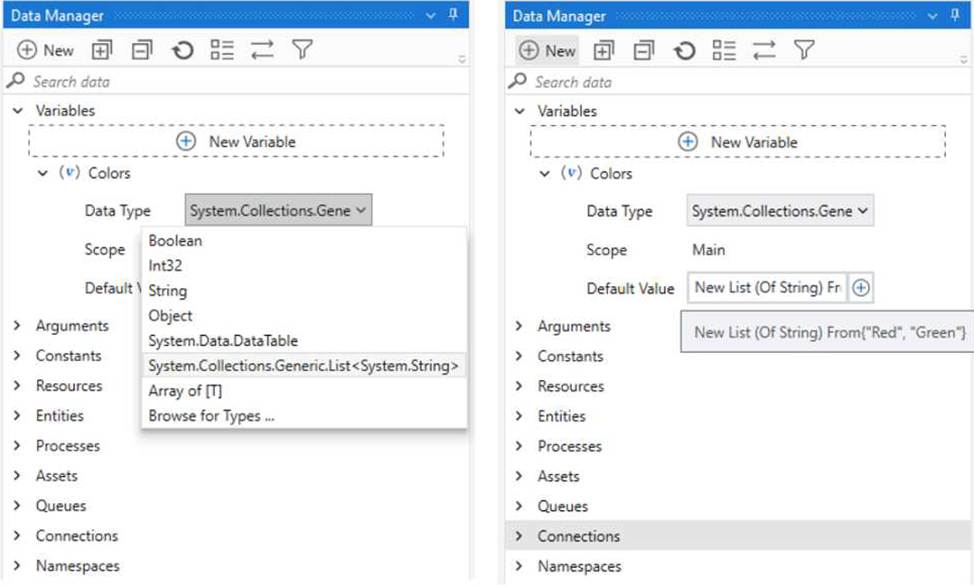

A developer wants to add items to a list of strings using the Invoke Method activity.

The list is declared as follows:

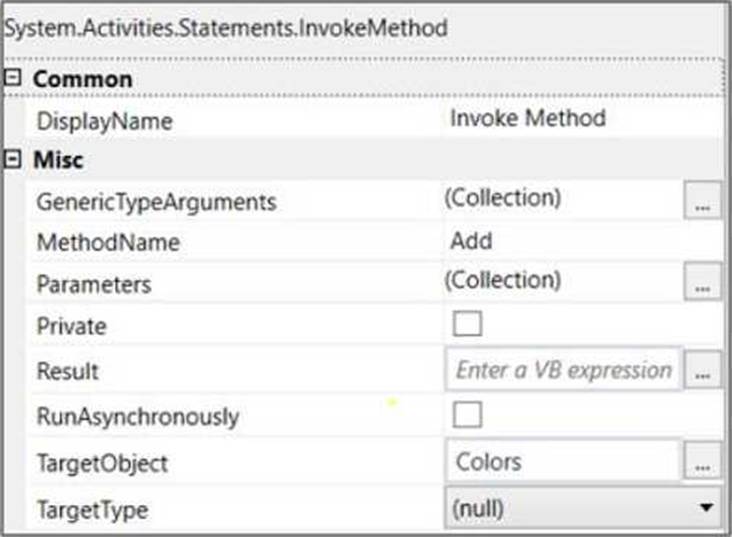

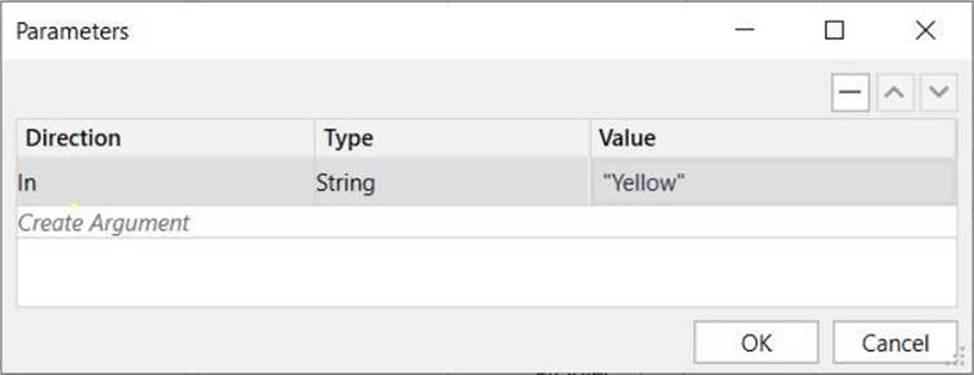

The Invoke Method includes the following properties:

The Invoke Method includes the following properties:

Based on the exhibits, what is the outcome of this Invoke Method activity?

- A . Colors will contain items in the following order: "Yellow", "Red", "Green".

- B . Colors will contain items in the following order: "Red", "Green".

- C . Invoke Method activity will throw an error.

- D . Colors will contain items in the following order: "Red", "Green", "Yellow".

D

Explanation:

Based on the exhibits provided, the developer has set up an Invoke Method activity to add an item to the "Colors" list variable. The list is initially declared with two items "Red" and "Green". The Invoke Method activity is configured to add the string "Yellow" to this list.

The properties of the Invoke Method activity indicate that the method ‘Add’ will be called on the target object ‘Colors’ with the parameter "Yellow". This means the string "Yellow" will be added to the end of the list.

The outcome of executing this Invoke Method activity will be:

D. Colors will contain items in the following order: "Red", "Green", "Yellow".

This is because items in a List<T> in .NET are added in sequence, and the "Add" method appends the new item to the end of the list.

What is created automatically when you create a coded automation in UiPath?

- A . A namespace using the name of the Studio project.

- B . A new activity package with the name of the Studio project.

- C . A helper class using the name of the Studio project.

- D . A folder with the name of the Studio project.

A

Explanation:

When creating a Coded Automation project in UiPath Studio, a default namespace is automatically generated using the project name. This helps organize classes and methods and ensures that the structure aligns with best practices for .NET-based development in UiPath.

Reference: UiPath Developer Guide > Coded Workflows > Project Structure

Which statement best describes the purpose of ‘Publish’ in UiPath Studio?

- A . It compiles the project into a .nupkg file.

- B . It runs the project for testing purposes.

- C . It documents the project in a user-friendly format.

- D . It sends the project to a specified email address.

What happens when the area selection feature in the UiPath Computer Vision wizard is used?

- A . The selected area is treated as a single UI element, with no further analysis of its contents.

- B . The selected area is automatically resized to fit all UI elements within it.

- C . A duplicated UI can be selected, and the copy is modified in the automation process.

- D . A portion of the application UI can be selected, which is helpful when dealing with multiple fields bearing the same label.

D

Explanation:

The area selection feature in the UiPath Computer Vision wizard is used to indicate a specific region of the screen that you want to work with. It can be activated by clicking the Indicate on screen button in the CV Screen Scope activity or any other Computer Vision activity that requires a target element. The area selection feature allows you to draw a box around the desired area, and then choose an anchor for it. The anchor is a stable UI element that helps locate the target area at runtime.

The area selection feature is helpful when dealing with multiple fields bearing the same label, such as text boxes, check boxes, or radio buttons. In such cases, the Computer Vision engine may not be able to identify the correct field to interact with, or it may return ambiguous results. By using the area selection feature, you can narrow down the scope of the target element and avoid confusion. The other options are not correct descriptions of the area selection feature.

Option A is false, because the selected area is not treated as a single UI element, but as a region that contains one or more UI elements. The Computer Vision engine still analyzes the contents of the selected area and returns the best match for the target element.

Option B is false, because the selected area is not automatically resized to fit all UI elements within it. The selected area remains fixed, unless you manually adjust it.

Option C is false, because the area selection feature does not create a duplicate UI, nor does it modify the copy in the automation process. The area selection feature only selects a portion of the existing UI, and performs the specified action on it.

Reference: Using the Computer Vision activities – UiPath Documentation Portal, Computer Vision activities – UiPath Documentation Portal, Extract multiple words – AI Computer Vision – UiPath Community Forum, Computer Vision Recording – UiPath Community Forum

Which of the following is a tag in a selector?

- A . id=’selMonth’

- B . aaname=’FirstName’

- C . <html app-msedge.exe’ title-Find Unicorn Name’ />

- D . class-down-chevron set-font’

C

Explanation:

A tag in a selector is a node that represents a UI element or a container of UI elements. A tag has the following format: <ui_system attr_name_1=’attr_value_1′ … attr_name_N=’attr_value_N’/>. A tag can have one or more attributes that help identify the UI element uniquely and reliably1. For example, the tag <html app-msedge.exe’ title-Find Unicorn Name’ /> represents the HTML document of a web page opened in Microsoft Edge browser, with the title “Find Unicorn Name”. The attributes app-msedge.exe and title-Find Unicorn Name help distinguish this tag from other HTML documents.

The other options are not tags, but attributes. An attribute is a property of a UI element that has a name and a value. For example, id=’selMonth’ is an attribute that indicates the ID of a UI element is “selMonth”. Attributes are used inside tags to specify the UI element or container1.

Reference: 1: Studio – About Selectors – UiPath Documentation Portal