Practice Free SPLK-2002 Exam Online Questions

Which index-time props.conf attributes impact indexing performance? (Select all that apply.)

- A . REPORT

- B . LINE_BREAKER

- C . ANNOTATE_PUNCT

- D . SHOULD_LINEMERGE

B,D

Explanation:

The index-time props.conf attributes that impact indexing performance are LINE_BREAKER and SHOULD_LINEMERGE. These attributes determine how Splunk breaks the incoming data into events and whether it merges multiple events into one. These operations can affect the indexing speed and the disk space consumption. The REPORT attribute does not impact indexing performance, as it is used to apply transforms at search time. The ANNOTATE_PUNCT attribute does not impact indexing performance, as it is used to add punctuation metadata to events at search time. For more information, see [About props.conf and transforms.conf] in the Splunk documentation.

metrics. log is stored in which index?

- A . main

- B . _telemetry

- C . _internal

- D . _introspection

C

Explanation:

According to the Splunk documentation1, metrics.log is a file that contains various metrics data for reviewing product behavior, such as pipeline, queue, thruput, and tcpout_connections. Metrics.log is stored in the _internal index by default2, which is a special index that contains internal logs and metrics for Splunk Enterprise.

The other options are false because:

main is the default index for user data, not internal data3.

_telemetry is an index that contains data collected by the Splunk Telemetry feature, which sends anonymous usage and performance data to Splunk4.

_introspection is an index that contains data collected by the Splunk Monitoring Console, which monitors the health and performance of Splunk components.

(Which of the following data sources are used for the Monitoring Console dashboards?)

- A . REST API calls

- B . Splunk btool

- C . Splunk diag

- D . metrics.log

A,D

Explanation:

According to Splunk Enterprise documentation for the Monitoring Console (MC), the data displayed in its dashboards is sourced primarily from two internal mechanisms ― REST API calls and metrics.log.

The Monitoring Console (formerly known as the Distributed Management Console, or DMC) uses REST API endpoints to collect system-level information from all connected instances, such as indexer clustering status, license usage, and search head performance. These REST calls pull real-time configuration and performance data from Splunk’s internal management layer (/services/server/status, /services/licenser, /services/cluster/peers, etc.).

Additionally, the metrics.log file is one of the main data sources used by the Monitoring Console. This log records Splunk’s internal performance metrics, including pipeline latency, queue sizes, indexing throughput, CPU usage, and memory statistics. Dashboards like “Indexer Performance,” “Search Performance,” and “Resource Usage” are powered by searches over the _internal index that reference this log.

Other tools listed ― such as btool (configuration troubleshooting utility) and diag (diagnostic archive generator) ― are not used as runtime data sources for Monitoring Console dashboards. They assist in troubleshooting but are not actively queried by the MC.

Reference (Splunk Enterprise Documentation):

• Monitoring Console Overview C Data Sources and Architecture

• metrics.log Reference C Internal Performance Data Collection

• REST API Usage in Monitoring Console

• Distributed Management Console Configuration Guide

Which of the following artifacts are included in a Splunk diag file? (Select all that apply.)

- A . OS settings.

- B . Internal logs.

- C . Customer data.

- D . Configuration files.

B,D

Explanation:

The following artifacts are included in a Splunk diag file:

Internal logs. These are the log files that Splunk generates to record its own activities, such as splunkd.log, metrics.log, audit.log, and others. These logs can help troubleshoot Splunk issues and monitor Splunk performance.

Configuration files. These are the files that Splunk uses to configure various aspects of its operation, such as server.conf, indexes.conf, props.conf, transforms.conf, and others. These files can help understand Splunk settings and behavior. The following artifacts are not included in a Splunk diag file:

OS settings. These are the settings of the operating system that Splunk runs on, such as the kernel version, the memory size, the disk space, and others. These settings are not part of the Splunk diag file, but they can be collected separately using the diag –os option.

Customer data. These are the data that Splunk indexes and makes searchable, such as the rawdata and the tsidx files. These data are not part of the Splunk diag file, as they may contain sensitive or confidential information. For more information, see Generate a diagnostic snapshot of your Splunk Enterprise deployment in the Splunk documentation.

When converting from a single-site to a multi-site cluster, what happens to existing single-site clustered buckets?

- A . They will continue to replicate within the origin site and age out based on existing policies.

- B . They will maintain replication as required according to the single-site policies, but never age out.

- C . They will be replicated across all peers in the multi-site cluster and age out based on existing policies.

- D . They will stop replicating within the single-site and remain on the indexer they reside on and age out according to existing policies.

D

Explanation:

When converting from a single-site to a multi-site cluster, existing single-site clustered buckets will maintain replication as required according to the single-site policies, but never age out. Single-site clustered buckets are buckets that were created before the conversion to a multi-site cluster. These buckets will continue to follow the single-site replication and search factors, meaning that they will have the same number of copies and searchable copies across the cluster, regardless of the site.

These buckets will never age out, meaning that they will never be frozen or deleted, unless they are manually converted to multi-site buckets. Single-site clustered buckets will not continue to replicate within the origin site, because they will be distributed across the cluster according to the single-site policies. Single-site clustered buckets will not be replicated across all peers in the multi-site cluster, because they will follow the single-site replication factor, which may be lower than the multi-site total replication factor. Single-site clustered buckets will not stop replicating within the single-site and remain on the indexer they reside on, because they will still be subject to the replication and availability rules of the cluster

Which indexes.conf attribute would prevent an index from participating in an indexer cluster?

- A . available_sites = none

- B . repFactor = 0

- C . repFactor = auto

- D . site_mappings = default_mapping

B

Explanation:

The repFactor (replication factor) attribute in the indexes.conf file determines whether an index participates in indexer clustering and how many copies of its data are replicated across peer nodes.

When repFactor is set to 0, it explicitly instructs Splunk to exclude that index from participating in the cluster replication and management process.

This means:

The index is not replicated across peer nodes.

It will not be managed by the Cluster Manager.

It exists only locally on the indexer where it was created.

Such indexes are typically used for local-only storage, such as _internal, _audit, or other custom indexes that store diagnostic or node-specific data.

By contrast:

repFactor=auto allows the index to inherit the cluster-wide replication policy from the Cluster Manager.

available_sites and site_mappings relate to multisite configurations, controlling where copies of the data are stored, but they do not remove the index from clustering.

Setting repFactor=0 is the only officially supported way to create a non-clustered index within a clustered environment.

Reference (Splunk Enterprise Documentation):

• indexes.conf Reference C repFactor Attribute Explanation

• Managing Non-Clustered Indexes in Clustered Deployments

• Indexer Clustering: Index Participation and Replication Policies

• Splunk Enterprise Admin Manual C Local-Only and Clustered Index Configurations

(What is a recommended way to improve search performance?)

- A . Use the shortest query possible.

- B . Filter as much as possible in the initial search.

- C . Use non-streaming commands as early as possible.

- D . Leverage the not expression to limit returned results.

B

Explanation:

Splunk Enterprise Search Optimization documentation consistently emphasizes that filtering data as early as possible in the search pipeline is the most effective way to improve search performance. The base search (the part before the first pipe |) determines the volume of raw events Splunk retrieves from the indexers. Therefore, by applying restrictive conditions early―such as time ranges, indexed fields, and metadata filters―you can drastically reduce the number of events that need to be fetched and processed downstream.

The best practice is to use indexed field filters (e.g., index=security sourcetype=syslog host=server01) combined with search or where clauses at the start of the query. This minimizes unnecessary data movement between indexers and the search head, improving both search speed and system efficiency.

Using non-streaming commands early (Option C) can degrade performance because they require full result sets before producing output. Likewise, focusing solely on shortening queries (Option A) or excessive use of the not operator (Option D) does not guarantee efficiency, as both may still process large datasets.

Filtering early leverages Splunk’s distributed search architecture to limit data at the indexer level, reducing processing load and network transfer.

Reference (Splunk Enterprise Documentation):

• Search Performance Tuning and Optimization Guide

• Best Practices for Writing Efficient SPL Queries

• Understanding Streaming and Non-Streaming Commands

• Search Job Inspector: Analyzing Execution Costs

Which Splunk internal field can confirm duplicate event issues from failed file monitoring?

- A . _time

- B . _indextime

- C . _index_latest

- D . latest

B

Explanation:

According to the Splunk documentation1, the _indextime field is the time when Splunk indexed the event. This field can be used to confirm duplicate event issues from failed file monitoring, as it can show you when each duplicate event was indexed and if they have different _indextime values. You can use the Search Job Inspector to inspect the search job that returns the duplicate events and check the _indextime field for each event2.

The other options are false because:

The _time field is the time extracted from the event data, not the time when Splunk indexed the event. This field may not reflect the actual indexing time, especially if the event data has a different time zone or format than the Splunk server1.

The _index_latest field is not a valid Splunk internal field, as it does not exist in the Splunk documentation or the Splunk data model3.

The latest field is a field that represents the latest time bound of a search, not the time when Splunk indexed the event. This field is used to specify the time range of a search, along with the earliest field4.

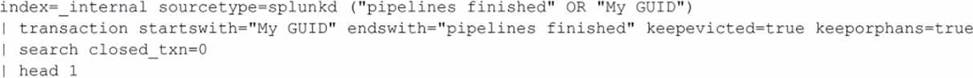

A Splunk instance has crashed, but no crash log was generated.

There is an attempt to determine what user activity caused the crash by running the following search:

What does searching for closed_txn=0 do in this search?

- A . Filters results to situations where Splunk was started and stopped multiple times.

- B . Filters results to situations where Splunk was started and stopped once.

- C . Filters results to situations where Splunk was stopped and then immediately restarted.

- D . Filters results to situations where Splunk was started, but not stopped.

D

Explanation:

Searching for closed_txn=0 in this search filters results to situations where Splunk was started, but not stopped. This means that the transaction was not completed, and Splunk crashed before it could finish the pipelines. The closed_txn field is added by the transaction command, and it indicates whether the transaction was closed by an event that matches the endswith condition1. A value of 0 means that the transaction was not closed, and a value of 1 means that the transaction was closed1. Therefore, option D is the correct answer, and options A, B, and C are incorrect.

1: transaction command overview

(If the maxDataSize attribute is set to auto_high_volume in indexes.conf on a 64-bit operating system, what is the maximum hot bucket size?)

- A . 4GB

- B . 750 MB

- C . 10 GB

- D . 1GB

C

Explanation:

According to the indexes.conf reference in Splunk Enterprise, the parameter maxDataSize controls the maximum size (in GB or MB) of a single hot bucket before Splunk rolls it to a warm bucket. When the value is set to auto_high_volume on a 64-bit system, Splunk automatically sets the maximum hot bucket size to 10 GB.

The “auto” settings allow Splunk to choose optimized values based on the system architecture:

auto: Default hot bucket size of 750 MB (32-bit) or 10 GB (64-bit).

auto_high_volume: Specifically tuned for high-ingest indexes; on 64-bit systems, this equals 10 GB per hot bucket.

auto_low_volume: Uses smaller bucket sizes for lightweight indexes.

The purpose of larger hot bucket sizes on 64-bit systems is to improve indexing performance and reduce the overhead of frequent bucket rolling during heavy data ingestion. The documentation explicitly warns that these sizes differ on 32-bit systems due to memory addressing limitations.

Thus, for high-throughput environments running 64-bit operating systems, auto_high_volume = 10 GB is the correct and Splunk-documented configuration.

Reference (Splunk Enterprise Documentation):

• indexes.conf C maxDataSize Attribute Reference

• Managing Index Buckets and Data Retention

• Splunk Enterprise Admin Manual C Indexer Storage Configuration

• Splunk Performance Tuning: Bucket Management and Hot/Warm Transitions