Practice Free SCS-C02 Exam Online Questions

A company is running workloads in a single IAM account on Amazon EC2 instances and Amazon EMR clusters a recent security audit revealed that multiple Amazon Elastic Block Store (Amazon EBS) volumes and snapshots are not encrypted

The company’s security engineer is working on a solution that will allow users to deploy EC2 Instances and EMR clusters while ensuring that all new EBS volumes and EBS snapshots are encrypted at rest. The solution must also minimize operational overhead

Which steps should the security engineer take to meet these requirements?

- A . Create an Amazon Event Bridge (Amazon Cloud watch Events) event with an EC2 instance as the source and create volume as the event trigger. When the event is triggered invoke an IAM Lambda function to evaluate and notify the security engineer if the EBS volume that was created is not encrypted.

- B . Use a customer managed IAM policy that will verify that the encryption ag of the Createvolume context is set to true. Apply this rule to all users.

- C . Create an IAM Config rule to evaluate the conguration of each EC2 instance on creation or modication. Have the IAM Cong rule trigger an IAM Lambdafunction to alert the security team and terminate the instance it the EBS volume is not encrypted. 5

- D . Use the IAM Management Console or IAM CLi to enable encryption by default for EBS volumes in each IAM Region where the company operates.

A company receives a notification from the AWS Abuse team about an AWS account The notification indicates that a resource in the account is compromised The company determines that the compromised resource is an Amazon EC2 instance that hosts a web application The compromised EC2 instance is part of an EC2 Auto Scaling group

The EC2 instance accesses Amazon S3 and Amazon DynamoDB resources by using an 1AM access key and secret key The 1AM access key and secret key are stored inside the AMI that is specified in the Auto Scaling

group’s launch configuration The company is concerned that the credentials that are stored in the AMI might also have been exposed

The company must implement a solution that remediates the security concerns without causing downtime for the application The solution must comply with security best practices

Which solution will meet these requirements’?

- A . Rotate the potentially compromised access key that the EC2 instance uses Create a new AM I without the potentially compromised credentials Perform an EC2 Auto Scaling instance refresh

- B . Delete or deactivate the potentially compromised access key Create an EC2 Auto Scaling linked 1AM role that includes a custom policy that matches the potentially compromised access key permission Associate the new 1AM role with the Auto Scaling group Perform an EC2 Auto Scaling instance refresh.

- C . Delete or deactivate the potentially compromised access key Create a new AMI without the potentially compromised credentials Create an 1AM role that includes the correct permissions Create a launch template for the Auto Scaling group to reference the new AMI and 1AM role Perform an EC2 Auto Scaling instance refresh

- D . Rotate the potentially compromised access key Create a new AMI without the potentially compromised access key Use a user data script to supply the new access key as environmental variables in the Auto Scaling group’s launch configuration Perform an EC2 Auto Scaling instance refresh

C

Explanation:

The AWS documentation states that you can create a new AMI without the potentially compromised credentials and create an 1AM role that includes the correct permissions. You can then create a launch template for the Auto Scaling group to reference the new AMI and 1AM role. This method is the most secure way to remediate the security concerns without causing downtime for the application.

References: : AWS Security Best Practices

A company needs a solution to protect critical data from being permanently deleted. The data is stored in Amazon S3 buckets.

The company needs to replicate the S3 objects from the company’s primary AWS Region to a secondary Region to meet disaster recovery requirements. The company must also ensure that users who have administrator access cannot permanently delete the data in the secondary Region.

Which solution will meet these requirements?

- A . Configure AWS Backup to perform cross-Region S3 backups. Select a backup vault in the secondary Region. Enable AWS Backup Vault Lock in governance mode for the backups in the secondary Region

- B . Implement S3 Object Lock in compliance mode in the primary Region. Configure S3 replication to replicate the objects to an S3 bucket in the secondary Region.

- C . Configure S3 replication to replicate the objects to an S3 bucket in the secondary Region. Create an S3 bucket policy to deny the s3:ReplicateDelete action on the S3 bucket in the secondary Region

- D . Configure S3 replication to replicate the objects to an S3 bucket in the secondary Region.

Configure S3 object versioning on the S3 bucket in the secondary Region.

B

Explanation:

Implementing S3 Object Lock in compliance mode on the primary Region and configuring S3

replication to a secondary Region ensures the immutability of S3 objects, preventing them from being deleted or altered. This setup meets the requirement of protecting critical data from permanent deletion, even by users with administrative access. The replicated objects in the secondary Region inherit the Object Lock from the primary, ensuring consistent protection across Regions and aligning with disaster recovery requirements.

An ecommerce company has a web application architecture that runs primarily on containers. The application containers are deployed on Amazon Elastic Container Service (Amazon ECS). The container images for the application are stored in Amazon Elastic Container Registry (Amazon ECR).

The company’s security team is performing an audit of components of the application architecture. The security team identifies issues with some container images that are stored in the container repositories.

The security team wants to address these issues by implementing continual scanning and on-push scanning of the container images. The security team needs to implement a solution that makes any findings from these scans visible in a centralized dashboard. The security team plans to use the dashboard to view these findings along with other security-related findings that they intend to generate in the future.

There are specific repositories that the security team needs to exclude from the scanning process.

Which solution will meet these requirements?

- A . Use Amazon Inspector. Create inclusion rules in Amazon ECR to match repos-itories that need to be scanned. Push Amazon Inspector findings to AWS Se-curity Hub.

- B . Use ECR basic scanning of container images. Create inclusion rules in Ama-zon ECR to match repositories that need to be scanned. Push findings to AWS Security Hub.

- C . Use ECR basic scanning of container images. Create inclusion rules in Ama-zon ECR to match repositories that need to be scanned. Push findings to Amazon Inspector.

- D . Use Amazon Inspector. Create inclusion rules in Amazon Inspector to match repositories that need to be scanned. Push Amazon Inspector findings to AWS Config.

A company has AWS accounts in an organization in AWS Organizations. The company needs to install a corporate software package on all Amazon EC2 instances for all the accounts in the organization.

A central account provides base AMIs for the EC2 instances. The company uses AWS Systems Manager for software inventory and patching operations.

A security engineer must implement a solution that detects EC2 instances that do not have the required software. The solution also must automatically install the software if the software is not present.

Which solution will meet these requirements?

- A . Provide new AMIs that have the required software pre-installed. Apply a tag to the AMIs to indicate that the AMIs have the required software. Configure an SCP that allows new EC2 instances to be launched only if the instances have the tagged AMIs. Tag all existing EC2 instances.

- B . Configure a custom patch baseline in Systems Manager Patch Manager. Add the package name for the required software to the approved packages list. Associate the new patch baseline with all EC2 instances. Set up a maintenance window for software deployment.

- C . Centrally enable AWS Config. Set up the ec2-managedinstance-applications-required AWS Config rule for all accounts Create an Amazon EventBridge rule that reacts to AWS Config events. Configure the EventBridge rule to invoke an AWS Lambda function that uses Systems Manager Run Command to install the required software.

- D . Create a new Systems Manager Distributor package for the required software. Specify the download location. Select all EC2 instances in the different accounts. Install the software by using Systems Manager Run Command.

C

Explanation:

Utilizing AWS Config with a custom AWS Config rule (ec2-managedinstance-applications-required) enables detection of EC2 instances lacking the required software across all accounts in an organization. By creating an Amazon EventBridge rule that triggers on AWS Config events, and configuring it to invoke an AWS Lambda function, automated actions can be taken to ensure compliance. The Lambda function can leverage AWS Systems Manager Run Command to install the necessary software on non-compliant instances. This approach ensures continuous compliance and automated remediation, aligning with best practices for cloud security and management.

Your CTO is very worried about the security of your IAM account.

How best can you prevent hackers from completely hijacking your account?

- A . Use short but complex password on the root account and any administrators.

- B . Use IAM IAM Geo-Lock and disallow anyone from logging in except for in your city.

- C . Use MFA on all users and accounts, especially on the root account.

- D . Don’t write down or remember the root account password after creating the IAM account.

C

Explanation:

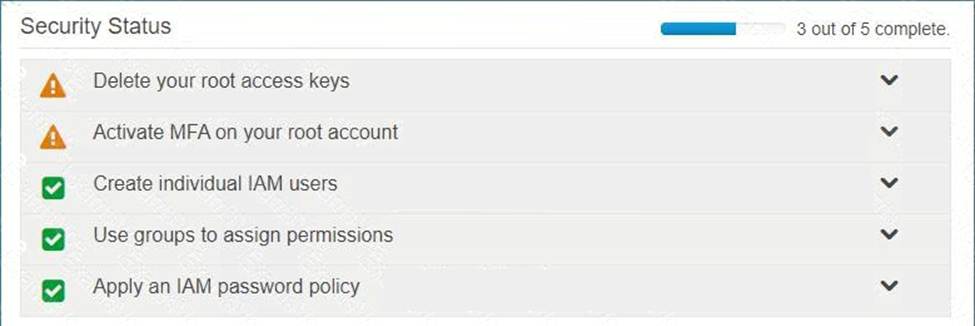

Multi-factor authentication can add one more layer of security to your IAM account Even when you go to your Security Credentials dashboard one of the items is to enable MFA on your root account

Option A is invalid because you need to have a good password policy Option B is invalid because there is no IAM Geo-Lock Option D is invalid because this is not a recommended practices For more information on MFA, please visit the below URL

http://docs.IAM.amazon.com/IAM/latest/UserGuide/idcredentialsmfa.htmll

The correct answer is: Use MFA on all users and accounts, especially on the root account.

Submit your Feedback/Queries to our Experts

A security engineer is setting up an AWS CloudTrail trail for all regions in an AWS account. For added security, the logs are stored using server-side encryption with AWS KMS-managed keys (SSE-KMS) and have log integrity validation enabled.

While testing the solution, the security engineer discovers that the digest files are readable, but the log files are not.

What is the MOST likely cause?

- A . The log flies fail integrity validation and automatically are marked as unavailable.

- B . The KMS key policy does not grant the security engineer’s 1AM user or rote permissions to decrypt with it.

- C . The bucket is set up to use server-side encryption with Amazon S3-managed keys (SSE-S3) as the default and does not allow SSE-KMS-encrypted files.

- D . An 1AM policy applicable to the security engineer’s 1AM user or role denies access to the "CloudTraiir prefix in the Amazon S3 bucket.

There is a requirement for a company to transfer large amounts of data between IAM and an on-premise location. There is an additional requirement for low latency and high consistency traffic to IAM.

Given these requirements how would you design a hybrid architecture? Choose the correct answer from the options below

- A . Provision a Direct Connect connection to an IAM region using a Direct Connect partner.

- B . Create a VPN tunnel for private connectivity, which increases network consistency and reduces latency.

- C . Create an IPsec tunnel for private connectivity, which increases network consistency and reduces latency.

- D . Create a VPC peering connection between IAM and the Customer gateway.

A

Explanation:

IAM Direct Connect makes it easy to establish a dedicated network connection from your premises to IAM. Using IAM Direct Connect you can establish private connectivity between IAM and your datacenter, office, or colocation environment which in many cases can reduce your network costs, increase bandwidth throughput and provide a more consistent network experience than Internet-based connections.

Options B and C are invalid because these options will not reduce network latency

Options D is invalid because this is only used to connect 2 VPC’s

For more information on IAM direct connect, just browse to the below URL:

https://IAM.amazon.com/directconnect

The correct answer is: Provision a Direct Connect connection to an IAM region using a Direct Connect partner. omit your Feedback/Queries to our Experts

A company’s security engineer wants to receive an email alert whenever Amazon GuardDuty, AWS Identity and Access Management Access Analyzer, or Amazon Made generate a high-severity security finding. The company uses AWS Control Tower to govern all of its accounts. The company also uses AWS Security Hub with all of the AWS service integrations turned on.

Which solution will meet these requirements with the LEAST operational overhead?

- A . Set up separate AWS Lambda functions for GuardDuty, 1AM Access Analyzer, and Macie to call each service’s public API to retrieve high-severity findings. Use Amazon Simple Notification Service (Amazon SNS) to send the email alerts. Create an Amazon EventBridge rule to invoke the functions on a schedule.

- B . Create an Amazon EventBridge rule with a pattern that matches Security Hub findings events with high severity. Configure the rule to send the findings to a target Amazon Simple Notification Service (Amazon SNS) topic. Subscribe the desired email addresses to the SNS topic.

- C . Create an Amazon EventBridge rule with a pattern that matches AWS Control Tower events with high severity. Configure the rule to send the findings to a target Amazon Simple Notification Service (Amazon SNS) topic. Subscribe the desired email addresses to the SNS topic.

- D . Host an application on Amazon EC2 to call the GuardDuty, 1AM Access Analyzer, and Macie APIs. Within the application, use the Amazon Simple Notification Service (Amazon SNS) API to retrieve high-severity findings and to send the findings to an SNS topic. Subscribe the desired email addresses to the SNS topic.

B

Explanation:

The AWS documentation states that you can create an Amazon EventBridge rule with a pattern that matches Security Hub findings events with high severity. You can then configure the rule to send the findings to a target Amazon Simple Notification Service (Amazon SNS) topic. You can subscribe the desired email addresses to the SNS topic. This method is the least operational overhead way to meet the requirements.

References: : AWS Security Hub User Guide

A company has an application that needs to read objects from an Amazon S3 bucket. The company configures an IAM policy and attaches the policy to an IAM role that the application uses. When the application tries to read objects from the S3 bucket, the application receives Access Denied errors. A security engineer must resolve this problem without decreasing the security of the S3 bucket or the application.

- A . Attach a resource policy to the S3 bucket to grant read access to the role.

- B . Launch a new deployment of the application in a different AWS Region. Attach the role to the application.

- C . Review the IAM policy by using AWS Identity and Access Management Access Analyzer to ensure that the policy grants the right permissions. Validate that the application is assuming the role

correctly. - D . Ensure that the S3 Block Public Access feature is disabled on the S3 bucket. Review AWS CloudTrail logs to validate that the application is assuming the role correctly.