Practice Free Professional Data Engineer Exam Online Questions

You are building an application to share financial market data with consumers, who will receive data feeds. Data is collected from the markets in real time.

Consumers will receive the data in the following ways:

Real-time event stream

ANSI SQL access to real-time stream and historical data

Batch historical exports

Which solution should you use?

- A . Cloud Dataflow, Cloud SQL, Cloud Spanner

- B . Cloud Pub/Sub, Cloud Storage, BigQuery

- C . Cloud Dataproc, Cloud Dataflow, BigQuery

- D . Cloud Pub/Sub, Cloud Dataproc, Cloud SQL

You are building a new data pipeline to share data between two different types of applications: jobs generators and job runners. Your solution must scale to accommodate increases in usage and must accommodate the addition of new applications without negatively affecting the performance of existing ones.

What should you do?

- A . Create an API using App Engine to receive and send messages to the applications

- B . Use a Cloud Pub/Sub topic to publish jobs, and use subscriptions to execute them

- C . Create a table on Cloud SQL, and insert and delete rows with the job information

- D . Create a table on Cloud Spanner, and insert and delete rows with the job information

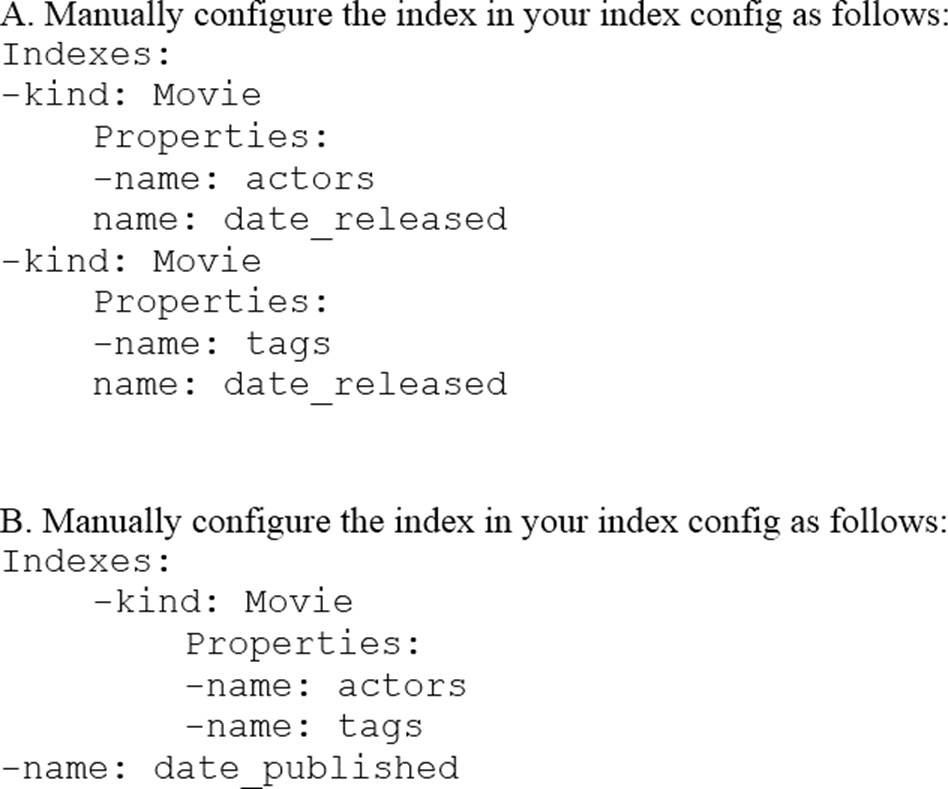

You are deploying a new storage system for your mobile application, which is a media streaming service. You decide the best fit is Google Cloud Datastore. You have entities with multiple properties, some of which can take on multiple values. For example, in the entity ‘Movie’ the property ‘actors’ and the property ‘tags’ have multiple values but the property ‘date released’ does not. A typical query would ask for all movies with actor=<actorname> ordered by date_released or all movies with tag=Comedy ordered by date_released.

How should you avoid a combinatorial explosion in the number of indexes?

- A . Option A

- B . Option B.

- C . Option C

- D . Option D

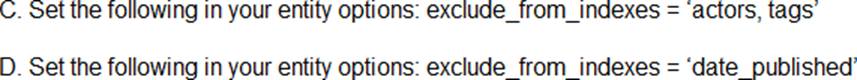

You are deploying a new storage system for your mobile application, which is a media streaming service. You decide the best fit is Google Cloud Datastore. You have entities with multiple properties, some of which can take on multiple values. For example, in the entity ‘Movie’ the property ‘actors’ and the property ‘tags’ have multiple values but the property ‘date released’ does not. A typical query would ask for all movies with actor=<actorname> ordered by date_released or all movies with tag=Comedy ordered by date_released.

How should you avoid a combinatorial explosion in the number of indexes?

- A . Option A

- B . Option B.

- C . Option C

- D . Option D

You store and analyze your relational data in BigQuery on Google Cloud with all data that resides in US regions. You also have a variety of object stores across Microsoft Azure and Amazon Web Services (AWS), also in US regions. You want to query all your data in BigQuery daily with as little movement of data as possible.

What should you do?

- A . Load files from AWS and Azure to Cloud Storage with Cloud Shell gautil rsync arguments.

- B . Create a Dataflow pipeline to ingest files from Azure and AWS to BigQuery.

- C . Use the BigQuery Omni functionality and BigLake tables to query files in Azure and AWS.

- D . Use BigQuery Data Transfer Service to load files from Azure and AWS into BigQuery.

B

Explanation:

BigQuery Omni is a multi-cloud analytics solution that lets you use the BigQuery interface to analyze data stored in other public clouds, such as AWS and Azure, without moving or copying the data. BigLake tables are a type of external table that let you query structured data in external data stores with access delegation. By using BigQuery Omni and BigLake tables, you can query data in AWS and Azure object stores directly from BigQuery, with minimal data movement and consistent performance.

Reference:

1: Introduction to BigLake tables

2: Deep dive on how BigLake accelerates query performance

3: BigQuery Omni and BigLake (Analytics Data Federation on GCP)

A web server sends click events to a Pub/Sub topic as messages. The web server includes an event Timestamp attribute in the messages, which is the time when the click occurred. You have a Dataflow streaming job that reads from this Pub/Sub topic through a subscription, applies some transformations, and writes the result to another Pub/Sub topic for use by the advertising department. The advertising department needs to receive each message within 30 seconds of the corresponding click occurrence, but they report receiving the messages late. Your Dataflow job’s system lag is about 5 seconds, and the data freshness is about 40 seconds. Inspecting a few messages show no more than 1 second lag between their event Timestamp and publish Time.

What is the problem and what should you do?

- A . The advertising department is causing delays when consuming the messages. Work with the advertising department to fix this.

- B . Messages in your Dataflow job are processed in less than 30 seconds, but your job cannot keep up with the backlog in the Pub/Sub

subscription. Optimize your job or increase the number of workers to fix this. - C . The web server is not pushing messages fast enough to Pub/Sub. Work with the web server team to fix this.

- D . Messages in your Dataflow job are taking more than 30 seconds to process. Optimize your job or increase the number of workers to fix this.

B

Explanation:

To ensure that the advertising department receives messages within 30 seconds of the click occurrence, and given the current system lag and data freshness metrics, the issue likely lies in the processing capacity of the Dataflow job.

Here’s why option B is the best choice:

System Lag and Data Freshness:

The system lag of 5 seconds indicates that Dataflow itself is processing messages relatively quickly.

However, the data freshness of 40 seconds suggests a significant delay before processing begins, indicating a backlog.

Backlog in Pub/Sub Subscription:

A backlog occurs when the rate of incoming messages exceeds the rate at which the Dataflow job can process them, causing delays.

Optimizing the Dataflow Job:

To handle the incoming message rate, the Dataflow job needs to be optimized or scaled up by increasing the number of workers, ensuring it can keep up with the message inflow.

Steps to Implement:

Analyze the Dataflow Job:

Inspect the Dataflow job metrics to identify bottlenecks and inefficiencies.

Optimize Processing Logic:

Optimize the transformations and operations within the Dataflow pipeline to improve processing efficiency.

Increase Number of Workers:

Scale the Dataflow job by increasing the number of workers to handle the higher load, reducing the backlog.

Reference: Links:

Dataflow Monitoring

Scaling Dataflow Jobs

Business owners at your company have given you a database of bank transactions. Each row contains the user ID, transaction type, transaction location, and transaction amount. They ask you to investigate what type of machine learning can be applied to the data.

Which three machine learning applications can you use? (Choose three.)

- A . Supervised learning to determine which transactions are most likely to be fraudulent.

- B . Unsupervised learning to determine which transactions are most likely to be fraudulent.

- C . Clustering to divide the transactions into N categories based on feature similarity.

- D . Supervised learning to predict the location of a transaction.

- E . Reinforcement learning to predict the location of a transaction.

- F . Unsupervised learning to predict the location of a transaction.

Flowlogistic is rolling out their real-time inventory tracking system. The tracking devices will all send package-tracking messages, which will now go to a single Google Cloud Pub/Sub topic instead of the Apache Kafka cluster. A subscriber application will then process the messages for real-time reporting and store them in Google BigQuery for historical analysis. You want to ensure the package data can be analyzed over time.

Which approach should you take?

- A . Attach the timestamp on each message in the Cloud Pub/Sub subscriber application as they are received.

- B . Attach the timestamp and Package ID on the outbound message from each publisher device as they are sent to Clod Pub/Sub.

- C . Use the NOW () function in BigQuery to record the event’s time.

- D . Use the automatically generated timestamp from Cloud Pub/Sub to order the data.

Flowlogistic is rolling out their real-time inventory tracking system. The tracking devices will all send package-tracking messages, which will now go to a single Google Cloud Pub/Sub topic instead of the Apache Kafka cluster. A subscriber application will then process the messages for real-time reporting and store them in Google BigQuery for historical analysis. You want to ensure the package data can be analyzed over time.

Which approach should you take?

- A . Attach the timestamp on each message in the Cloud Pub/Sub subscriber application as they are received.

- B . Attach the timestamp and Package ID on the outbound message from each publisher device as they are sent to Clod Pub/Sub.

- C . Use the NOW () function in BigQuery to record the event’s time.

- D . Use the automatically generated timestamp from Cloud Pub/Sub to order the data.

Flowlogistic is rolling out their real-time inventory tracking system. The tracking devices will all send package-tracking messages, which will now go to a single Google Cloud Pub/Sub topic instead of the Apache Kafka cluster. A subscriber application will then process the messages for real-time reporting and store them in Google BigQuery for historical analysis. You want to ensure the package data can be analyzed over time.

Which approach should you take?

- A . Attach the timestamp on each message in the Cloud Pub/Sub subscriber application as they are received.

- B . Attach the timestamp and Package ID on the outbound message from each publisher device as they are sent to Clod Pub/Sub.

- C . Use the NOW () function in BigQuery to record the event’s time.

- D . Use the automatically generated timestamp from Cloud Pub/Sub to order the data.