Practice Free Professional Cloud Developer Exam Online Questions

You are porting an existing Apache/MySQL/PHP application stack from a single machine to Google Kubernetes Engine. You need to determine how to containerize the application. Your approach should follow Google-recommended best practices for availability.

What should you do?

- A . Package each component in a separate container. Implement readiness and liveness probes.

- B . Package the application in a single container. Use a process management tool to manage each component.

- C . Package each component in a separate container. Use a script to orchestrate the launch of the components.

- D . Package the application in a single container. Use a bash script as an entrypoint to the container, and then spawn each component as a background job.

A

Explanation:

https://cloud.google.com/blog/products/containers-kubernetes/7-best-practices-for-building-containers

https://cloud.google.com/architecture/best-practices-for-building-containers

"classic Apache/MySQL/PHP stack: you might be tempted to run all the components in a single container. However, the best practice is to use two or three different containers: one for Apache, one for MySQL, and potentially one for PHP if you are running PHP-FPM."

Your development team is using Cloud Build to promote a Node.js application built on App Engine from your staging environment to production. The application relies on several directories of photos stored in a Cloud Storage bucket named webphotos-staging in the staging environment. After the promotion, these photos must be available in a Cloud Storage bucket named webphotos-prod in the production environment. You want to automate the process where possible.

What should you do?

A) Manually copy the photos to webphotos-prod.

B) Add a startup script in the application’s app.yami file to move the photos from webphotos-staging to webphotos-prod.

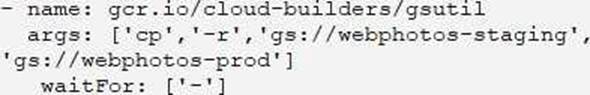

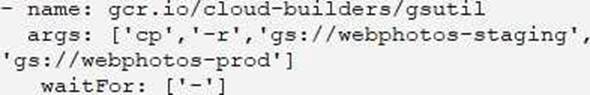

C) Add a build step in the cloudbuild.yaml file before the promotion step with the arguments:

D) Add a build step in the cloudbuild.yaml file before the promotion step with the arguments:

- A . Option A

- B . Option B

- C . Option C

- D . Option D

C

Explanation:

https://cloud.google.com/storage/docs/gsutil/commands/cp

You need to migrate an internal file upload API with an enforced 500-MB file size limit to App Engine.

What should you do?

- A . Use FTP to upload files.

- B . Use CPanel to upload files.

- C . Use signed URLs to upload files.

- D . Change the API to be a multipart file upload API.

C

Explanation:

Reference: https://wiki.christophchamp.com/index.php?title=Google_Cloud_Platform

Your team is creating a serverless web application on Cloud Run. The application needs to access images stored in a private Cloud Storage bucket. You want to give the application Identity and Access Management (IAM) permission to access the images in the bucket, while also securing the services using Google-recommended best practices.

What should you do?

- A . Enforce signed URLs for the desired bucket. Grant the Storage Object Viewer IAM role on the bucket to the Compute Engine default service account.

- B . Enforce public access prevention for the desired bucket. Grant the Storage Object Viewer IAM role on the bucket to the Compute Engine default service account.

- C . Enforce signed URLs for the desired bucket Create and update the Cloud Run service to use a user – managed service account. Grant the Storage Object Viewer IAM role on the bucket to the service account

- D . Enforce public access prevention for the desired bucket. Create and update the Cloud Run service to use a user-managed service account. Grant the Storage Object Viewer IAM role on the bucket to the service account.

You are developing a microservice-based application that will run on Google Kubernetes Engine (GKE). Some of the services need to access different Google Cloud APIs.

How should you set up authentication of these services in the cluster following Google-recommended best practices? (Choose two.)

- A . Use the service account attached to the GKE node.

- B . Enable Workload Identity in the cluster via the gcloud command-line tool.

- C . Access the Google service account keys from a secret management service.

- D . Store the Google service account keys in a central secret management service.

- E . Use gcloud to bind the Kubernetes service account and the Google service account using roles/iam.workloadIdentity.

BE

Explanation:

https://cloud.google.com/kubernetes-engine/docs/how-to/workload-identity

You are developing a microservice-based application that will run on Google Kubernetes Engine (GKE). Some of the services need to access different Google Cloud APIs.

How should you set up authentication of these services in the cluster following Google-recommended best practices? (Choose two.)

- A . Use the service account attached to the GKE node.

- B . Enable Workload Identity in the cluster via the gcloud command-line tool.

- C . Access the Google service account keys from a secret management service.

- D . Store the Google service account keys in a central secret management service.

- E . Use gcloud to bind the Kubernetes service account and the Google service account using roles/iam.workloadIdentity.

BE

Explanation:

https://cloud.google.com/kubernetes-engine/docs/how-to/workload-identity

Your application is composed of a set of loosely coupled services orchestrated by code executed on Compute Engine. You want your application to easily bring up new Compute Engine instances that find and use a specific version of a service.

How should this be configured?

- A . Define your service endpoint information as metadata that is retrieved at runtime and used to connect to the desired service.

- B . Define your service endpoint information as label data that is retrieved at runtime and used to connect to the desired service.

- C . Define your service endpoint information to be retrieved from an environment variable at runtime and used to connect to the desired service.

- D . Define your service to use a fixed hostname and port to connect to the desired service. Replace the service at the endpoint with your new version.

A

Explanation:

https://cloud.google.com/service-infrastructure/docs/service-metadata/reference/rest#service-endpoint

Your data is stored in Cloud Storage buckets. Fellow developers have reported that data downloaded from Cloud Storage is resulting in slow API performance. You want to research the issue to provide details to the GCP support team.

Which command should you run?

- A . gsutil test Co output.json gs://my-bucket

- B . gsutil perfdiag Co output.json gs://my-bucket

- C . gcloud compute scp example-instance:~/test-data Co output.json gs://my-bucket

- D . gcloud services test Co output.json gs://my-bucket

B

Explanation:

Reference: https://groups.google.com/forum/#!topic/gce-discussion/xBl9Jq5HDsY

Your team develops services that run on Google Cloud. You want to process messages sent to a Pub/Sub topic, and then store them. Each message must be processed exactly once to avoid duplication of data and any data conflicts. You need to use the cheapest and most simple solution.

What should you do?

- A . Process the messages with a Dataproc job, and write the output to storage.

- B . Process the messages with a Dataflow streaming pipeline using Apache Beam’s PubSubIO package, and write the output to storage.

- C . Process the messages with a Cloud Function, and write the results to a BigQuery location where you can run a job to deduplicate the data.

- D . Retrieve the messages with a Dataflow streaming pipeline, store them in Cloud Bigtable, and use another Dataflow streaming pipeline to deduplicate messages.

B

Explanation:

https://cloud.google.com/dataflow/docs/concepts/streaming-with-cloud-pubsub

You are developing an internal application that will allow employees to organize community events within your company. You deployed your application on a single Compute Engine instance. Your company uses Google Workspace (formerly G Suite), and you need to ensure that the company employees can authenticate to the application from anywhere.

What should you do?

- A . Add a public IP address to your instance, and restrict access to the instance using firewall rules.

Allow your company’s proxy as the only source IP address. - B . Add an HTTP(S) load balancer in front of the instance, and set up Identity-Aware Proxy (IAP).

Configure the IAP settings to allow your company domain to access the website. - C . Set up a VPN tunnel between your company network and your instance’s VPC location on Google Cloud. Configure the required firewall rules and routing information to both the on-premises and Google Cloud networks.

- D . Add a public IP address to your instance, and allow traffic from the internet. Generate a random hash, and create a subdomain that includes this hash and points to your instance. Distribute this DNS address to your company’s employees.

B

Explanation:

https://cloud.google.com/blog/topics/developers-practitioners/control-access-your-web-sites-identity-aware-proxy