Practice Free Plat-Arch-204 Exam Online Questions

The director of customer service at Northern Trail Outfitters (NTO) wants to capture and trend specific business events that occur in Salesforce in real time. The metrics will be accessed in an ad-hoc manner using an external analytics system. The events include product exchanges, authorization clicks, subscription cancellations, and refund initiations via Cases.

Which solution should meet these business requirements?

- A . Case after insert Trigger that executes a callout

- B . Case Workflow Rule that sends an Outbound Message

- C . Case Trigger after insert, after update to publish the platform event

C

Explanation:

To meet a requirement for real-time event capture that supports an external analytics system, the arc14hitect must choose a pattern that is scalable, decoupled, and reliable. Platform Events are the modern standard for this use case.

By using a Case Trigger to publish a specific Platform Event, NTO creates a highly decoupled Publish/Subscribe architecture. The external analytics system (or a middleware layer feeding it) acts as a subscriber to the event channel. This is superior to standard callouts or outbound messaging for several reasons:

Durability: Platform Events offer a 72-hour retention window. If the analytics system is momentarily offline, it can use the Replay ID to retrieve missed events.

Atomic Transactions: Triggers can be configured to publish events only after the database transaction successfully commits ("Publish After Commit"), ensuring the analytics system doesn’t receive data for transactions that were eventually rolled back.

Event Volume: Platform Events are designed to handle much higher volumes of real-time messages than standard synchronous callouts.

Option A (Apex Callouts) is a point-to-point, synchronous pattern that would block Case processing and risk hitting "Concurrent Long-Running Request" limits.

Option B (Outbound Messaging) is reliable but is limited to a single object per message and uses a rigid SOAP format that is less flexible for ad-hoc external analytics than the modern JSON/CometD/gRPC structures used by the event bus. By implementing Option C, the architect ensures that every specific business milestone (refund, exchange, cancellation) is broadcasted immediately, providing the customer service director with the accurate, real-time visibility required for trending and metrics.

An integration architect has designed a mobile application for Salesforce users to get data while on the road using a custom user interface (UI). The application is secured with OAuth and is currently functioning well. There is a new requirement where the mobile application needs to obtain the GPS coordinates and store them on a custom geolocation field. The geolocation field is secured with field-level security, so users can view the value without changing it.

What should be don4e to meet the requirement?

- A . The mobil10e device makes a REST Apex inbound call.

- B . The 15mobile device makes a REST API inboun16d call.

- C . The mobile device receives19 a REST Apex callout call.

B

Explanation:

When a custom mobile application already secured with OAuth needs to update a record in Salesforce, the standard architectural recommendation is to use the REST API. The REST API is optimized for mobile environments because it uses lightweight JSON payloads and follows standard HTTP methods (such as PATCH for updates), which are highly compatible with mobile development frameworks.

In this specific scenario, the architect must address the Field-Level Security (FLS) constraint. Because the geolocation field is set to read-only for users, a standard UI-based update would typically fail. However, when using an inbound REST API call with a properly authorized integration user or via a "System Mode" context (if utilizing a custom Apex REST resource), the system can be configured to

bypass UI-level restrictions while maintaining data integrity.

The mobile device captures the coordinates via the device’s native GPS capabilities and initiates an inbound call to the Salesforce REST endpoint.

Option A (Apex inbound call) is a subset of REST functionality but is only necessary if complex server-side logic is required that the standard REST API cannot handle.

Option C is technically incorrect as mobile devices do not typically "receive" callouts from Salesforce in this pattern; they initiate the requests. By leveraging the standard REST API, the architect ensures a scalable, secure, and standardized integration that adheres to Salesforce’s mobile-first integration principles.

Which Web Services Description Language (WSDL) should an architect consider when creating an integration that might be used for more than one Salesforce org and different metadata?

- A . Enterprise WSDL

- B . Partner WSDL

- C . SOAP API WSDL

B

Explanation:

In the world of Salesforce SOAP APIs, the choice of WSDL depends on the nature of the application being built. When an architect needs to build an integration that is org-agnostic―meaning it can work across multiple different Salesforce organizations with varying custom objects and fields―the Partner WSDL is the correct choice.

The Partner WSDL is loosely-typed. It does not contain information about an org’s specific custom metadata; instead, it uses a generic sObject structure. This allows a single client application (like a third-party integration tool or a mobile app) to connect to any Salesforce org and dynamically discover its schema at runtime. Because it is not tied to a specific org’s metadata, it does not need to be regenerated every time a custom field is added to one of the target orgs.

In contrast, Option A (Enterprise WSDL) is strongly-typed. It is generated specifically for one org and contains hard-coded references to that org’s custom objects and fields. While this provides better compile-time safety for internal, single-org integrations, it is unsuitable for an application intended for "more than one Salesforce org" because the WSDL would be invalid for any org that doesn’t have the exact same metadata.

Option C is a general term; the actual choices provided by Salesforce for integration are the Partner or Enterprise WSDLs. Therefore, for maximum flexibility and reusability across a multi-org landscape, the Partner WSDL is the industry standard.

A large business-to-consumer (B2C) customer is planning to implement Salesforce CRM to become a customer-centric enterprise.

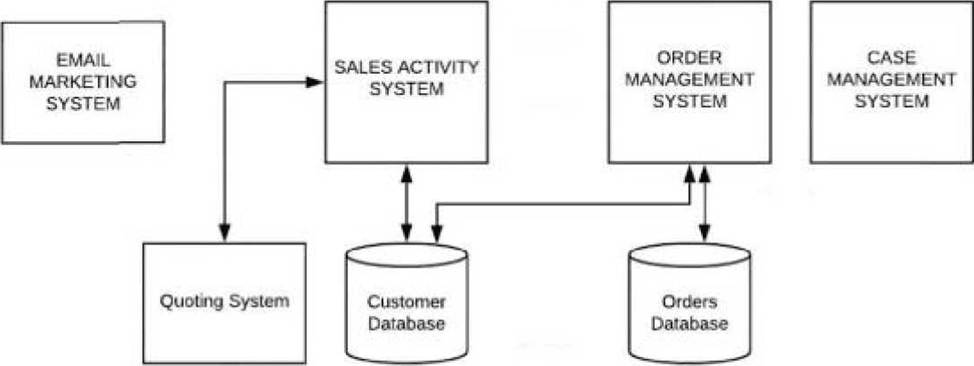

Below is the B2C customer’s current system landscape diagram.

The goals for implementing Salesforce include:

Develop a 360-degree view of the customer.

Leverage Salesforce capabilities for marketing, sales, and service processes.

Reuse Enterprise capabilities built for quoting and order management processes.

Which three systems from the current system landscape can be retired with the implementation of Salesforce?

- A . Email Marketing, Sales Activity, and Case Management

- B . Sales Activity, Order Management, and Case Management

- C . Order Management, Case Management, and Email Marketing

A

Explanation:

In the framework of a Salesforce Platform Integration Architect’s landscape evaluation, the primary goal is to determine the "system of record" for each business function and identify redundancies between legacy systems and the proposed Salesforce architecture. This process is driven by the alignment of Salesforce’s native "Customer 360" capabilities with the specific goals defined by the enterprise stakeholders.

According to Goal 2, the customer intends to leverage Salesforce specifically for marketing, sales, and service processes. Within the standard Salesforce ecosystem, these domains are addressed by the three core cloud products:

Marketing Cloud provides the capabilities found in the legacy Email Marketing System.

Sales Cloud replaces the functions of the Sales Activity System.

Service Cloud is the native replacement for the Case Management System.

By migrating these three domains to a single platform, the organization directly fulfills Goal 1― developing a 360-degree view of the customer. Consolidating these interactions onto the Salesforce platform allows for a unified data model where customer behaviors in marketing, sales, and support are visible in one place, eliminating the silos inherent in the previous landscape.

However, a critical constraint is presented in Goal 3, which explicitly mandates the reuse of existing enterprise capabilities for quoting and order management. In an integration architecture, this signals that the Quoting System and Order Management System (OMS) are designated as external systems of record that must remain active. These systems often contain complex logic, tax calculations, or supply chain integrations (such as with an SAP Business Suite) that the business is not currently ready to migrate.

Therefore, since the Quoting and Order Management systems must be retained, they are excluded from the retirement list. The remaining three systems―Email Marketing, Sales Activity, and Case Management―overlap with Salesforce’s native strengths and are not protected by the "reuse" requirement. Retiring them streamlines the technology stack and allows the architect to focus on building robust integration patterns (such as REST or SOAP callouts) to connect Salesforce to the retained Quoting and Order Management systems.

Northern Trail Outfitters uses Salesforce to track leads and opportunities, and to capture order details. However, Salesforce isn’t the system that holds or processes orders. After the order details are captured in Salesforce, an order must be created in the Remote system, which manages the order’s lifecycle. The integration architect for the project is recommending a remote system that will subscribe to the platform event defined in Salesforce.

Which integration pattern should be used for this business use case?

- A . Request and Reply

- B . Fire and Forget

- C . Remote Call-In

B

Explanation:

In this scenario, Salesforce acts as the trigger for a business process that completes in an external system. The architect’s recommendation for the remote system to subscribe to a platform event is the classic implementation of the Remote Process Invocation―Fire and Forget pattern.1

In a Fire and Forget pattern, Salesforce initiates a process by publishing a message (the event) to the event bus and then immediately continues its own2 processing without waiting for a functional response from the target system. The "Fire" part occurs when the order details are captured and the event is published; the "Forget" part refers to Salesforce handing off the responsibility of order creation to the remote system. This pattern is ideal for improving user experience and system performance, as it avoids blocking the user interface while waiting for potentially slow back-office systems to respond.

Option A (Request and Reply) is incorrect because that would require Salesforce to make a synchronous call and wait for the remote system to confirm the order was created before allowing the user to proceed.

Option C (Remote Call-In) is the inverse of what is described; it would involve the remote system actively reaching into Salesforce to "pull" the data, whereas here Salesforce is "pushing" the notification via an event stream. By using Platform Events to facilitate this hand-off, Northern Trail Outfitters ensures a decoupled, scalable architecture where the remote system can process orders at its own pace while Salesforce remains responsive to sales users.

An enterprise customer is implementing Salesforce for Case Management. Based on the landscape (Email, Order Management, Data Warehouse, Case Management), what should the integration architect evaluate?

- A . Integrate Salesforce with Order Management System, Data Warehouse, and Case Management System.

- B . Integrate Salesforce with Email Management System, Order Management System, and Case Management System.

- C . Integrate Salesforce with Data Warehouse, Order Management, and Email Management System.

C

Explanation:

The evaluation of an integration landscape is a process of rationalization. The goal is to identify which legacy systems Salesforce will replace (System Retirement) and which systems it must coexist with (Integration).

In this scenario, Salesforce is being implemented for Case Management. Salesforce Service Cloud is the industry leader for this specific function. Therefore, the legacy Case Management System should be retired. Any architecture that suggests "integrating" Salesforce with the legacy Case Management system (Options A and B) is creating a redundant and complex "dual-master" scenario that increases technical debt.

To provide a successful support experience, Salesforce needs to be the central "Engagement Layer,"

which requires integration with the remaining ecosystem:

Email Management System: To support "Email-to-Case" and ensure all customer communications are captured within the Salesforce Case record.

Order Management System (OMS): Support agents often need to verify purchase history or shipping status to resolve a case. A "Data Virtualization" or "Request-Reply" integration with the OMS is vital.

Data Warehouse: For long-term historical reporting and cross-functional analytics, Salesforce must push case data to the enterprise Data Warehouse.

By evaluating the integration with the Data Warehouse, Order Management, and Email Management systems, the architect ensures that Salesforce is enriched with the context it needs to resolve cases while simultaneously retiring the redundant legacy support system.

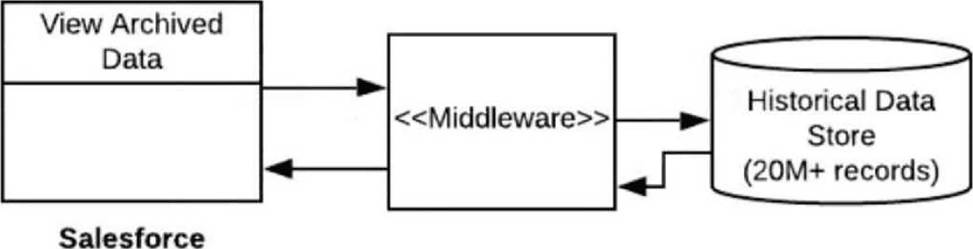

Given the diagram above, a Salesforce org, middleware, and Historical Data store exist with connectivity between them. Historical records are archived from Salesforce, moved to a Historical Data store (which houses 20 million records and growing), and fine-tuned to be performant with search queries. When reviewing occasional special cases, call center agents that use Salesforce have requested access to view the historical case items that relate to submitted cases.

Which mechanism and patterns are recommended to maximize declarative configuration?

- A . Use an ESB tool with a Data Virtualization pattern, expose the OData endpoint, and then use Salesforce Connect to consume and display the External object alongside the Case object.12

- B . Use an ESB tool with a Request and Reply pattern, and then make a real-time Apex callout to the ESB endpoint to fetch3 and display historical Data in a custom Lightning compo4nent related to the Case object.

- C . Use an ESB tool with a Fire and Forget pattern, and then publish a platform event for the requested historical data.

A

Explanation:

When designing a solution to view large volumes of archived data (over 20 million records) without physically storing them back in Salesforce, a Data Virtualization pattern is the architecturally preferred approach. This pattern allows users to view and interact with external data in real-time without the overhead of data replication, which would otherwise consume significant storage and impact platform performance.

To maximize declarative configuration, the Salesforce Platform Integration Architect should recommend Salesforce Connect. Salesforce Connect allows for the creation of External Objects, which behave much like standard objects but point to data residing outside of Salesforce. This is achieved by having the middleware (ESB) expose the Historical Data store via an OData (Open Data Protocol) endpoint. Once configured, call center agents can view historical case items directly on the Case record page using standard related lists or lookups, all configured through the point-and-click interface rather than custom code.

The provided landscape diagram illustrates a clear path from Salesforce through middleware to the Historical Data Store.

Option A leverages this by using the ESB to bridge the protocol gap. Because the data store is already "fine-tuned to be performant with search queries," Salesforce Connect can efficiently query only the specific historical records needed for the current case view.

In contrast, Option B requires a "Request and Reply" pattern using Apex callouts and custom Lightning components. While functional, this is a code-heavy approach that increases technical debt and does not meet the "maximize declarative configuration" requirement.

Option C, using "Fire and Forget" with Platform Events, is unsuitable for a synchronous "view data" request; Platform Events are asynchronous and would require a complex, custom-built UI to "wait" for and display the response. Therefore, the combination of OData and Salesforce Connect provides the most seamless, scalable, and low-maintenance solution for call center agents.

The URL for a business-critical external service providing exchange rates changed without notice.

Which solutions should be implemented to minimize potential downtime for users in this situation?

- A . Remote Site Settings and Named Credentials

- B . Enterprise Service Bus (ESB) and Remote Site Settings

- C . Named Credentials and Content Security Policies

A

Explanation:

To minimize downtime when an external endpoint changes, an Integration Architect must ensure that the URL is not "hardcoded" within Apex code or configuration. The standard Salesforce mechanism for abstracting and managing external endpoints is Named Credentials.

Named Credentials specify the URL of a callout endpoint and its required authentication parameters in one definition. If the URL changes, an administrator simply updates the "URL" field in the Named Credential setup. This change takes effect immediately across all Apex callouts, Flows, and External Services that reference it, without requiring a code deployment or a sandbox-to-production migration.

Along with Named Credentials, Remote Site Settings (or the more modern External Website Configurations) are required. Salesforce blocks all outbound calls to URLs that are not explicitly whitelisted.

By having both in place, the remediation process is:

Update the URL in the Named Credential.

Update (or add) the new URL in the Remote Site Settings.

This approach follows the "Separation of Concerns" principle.

Option B (ESB) could technically handle this, but it adds an extra layer of failure and complexity for a simple URL change.

Option C (Content Security Policies) is used to control which resources (like scripts or images) a browser is allowed to load in the UI; it does not govern server-side Apex callouts. Therefore, the combination of Named

Credentials and Remote Site whitelisting is the most efficient and standard way to provide architectural agility and minimize downtime.

Universal Containers (UC) is planning to implement Salesforce as its CRM system. Currently, UC has a marketing system for leads, Microsoft Outlook for contacts and emails, and an ERP for billing and payments. The proposed CRM should provide a single customer view.

What should an integration architect consider to support this strategy?

- A . Evaluate current and future data and system usage, and then identify potential integration requirements to Salesforce

- B . Explore out-of-the-box Salesforce connectors for integration with ERP, Marketing, and Microsoft Outlook systems.

- C . Propose a middleware system that can support interface between systems with Salesforce.

A

Explanation:

The foundational step in a CRM transformation project is to understand the business context and data landscape before selecting technical tools or middleware.

Before proposing a technical solution, an architect must evaluate current and future data and system usage to identify specific integration requirements. This includes documenting business processes, mapping data flows between the Marketing system, Outlook, and the ERP, and identifying which system will serve as the "System of Record" for each data entity. For example, the architect needs to determine if lead data from the Marketing system should flow unidirectionally to Salesforce or if activities from Outlook need bidirectional synchronization to maintain a "360-degree view".

While out-of-the-box connectors (Option B) and middleware (Option C) are valuable, they are implementation tactics that follow the discovery phase. Jumping directly to connectors may overlook unique business processes that require custom integration logic, while proposing middleware prematurely can lead to unnecessary costs if native tools or point-to-point connections are sufficient. A thorough evaluation ensures that the integration architecture is scalable, avoids data corruption, and aligns with UC’s strategic goal of breaking down information silos to provide sales and support staff with meaningful, unified customer insights.

Universal Containers (UC) is a global financial company that sells financial products and services. There is a daily scheduled Batch Apex job that generates invoices from a given set of orders. UC requested building a resilient integration for this Batch Apex job in case the invoice generation fails.

What should an integration architect recommend to fulfill the requirement?

- A . Build Batch Retry and Error Handling using BatchApexErrorEvent.

- B . Build Batch Retry and Error Handling in the Batch Apex job itself.

- C . Build Batch Retry and Error Handling in the middleware.

A

Explanation:

Resiliency in long-running Batch Apex processes is best achieved by utilizing modern, event-driven error handling frameworks provided by the Salesforce platform. The BatchApexErrorEvent is the architecturally recommended component for monitoring and responding to failures in Batch Apex jobs.

When a Batch Apex class implements the `Database.RaisesPlatformEvents` interface, the platform automatically publishes a BatchApexErrorEvent whenever an unhandled exception occurs during the execution of a batch. This event contains critical metadata, including the exception message, the stack trace, and the scope (the specific IDs of the records that were being processed when the failure occurred).

An Integration Architect should recommend building a Platform Event Trigger that subscribes to these error events.

This trigger can perform sophisticated error handling logic, such as:

* Logging the failure details into a custom "Integration Error" object for auditing.

* Initiating a retry logic by re-enqueuing only the failed records into a new batch job.

* Notifying administrators or external systems via an outbound call or email.

This approach is superior to Option B (internal handling) because unhandled exceptions often cause the entire batch transaction to roll back, potentially losing any error logging performed within the same scope. It is also more efficient than Option C (middleware), as it keeps the error recovery logic "close to the data," reducing the need for external systems to constantly poll for job status or parse complex logs. By using BatchApexErrorEvent, UC ensures a resilient, self-healing process that maintains the integrity of the invoice generation cycle.