Practice Free Plat-Arch-204 Exam Online Questions

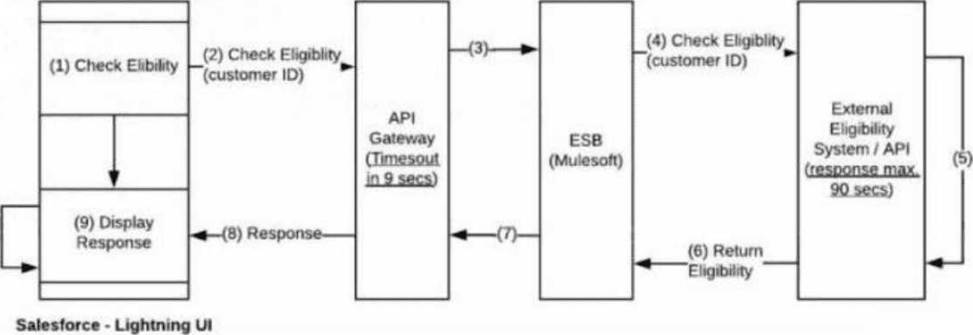

An enterprise architect has requested the Salesforce integration architect to review the following (see diagram and description) and provide recommendations after carefully considering all constraints of the enterprise systems and Salesforce Platform limits.

About 3,000 phone sales agents use a Salesforce Lightning user interface (UI) concurrently to check eligibility of a customer for a qualifying offer.

There are multiple eligibility systems that provide this service and are hosted externally.

However, their current response times could take up to 90 seconds to process and return (there are discussions to reduce the response times in the future, but no commitments are made).

These eligibility systems can be accessed through APIs orchestrated via ESB (MuleSoft).

All requests from Salesforce will have to traverse through the customer’s API Gateway layer, and the API Gateway imposes a constraint of timing out requests after 9 seconds.

Which recommendation should the integration architect make?

- A . Recommend synchronous Apex callouts from Lightning UI to External Systems via Mule and implement polling on an API Gateway timeout.

- B . Use Continuation callouts to make the eligibility check request from Salesforce Lightning UI at page load.

- C . Create a platform event in Salesforce via Remote Call-In and use the empAPI in the Lightning UI to serve 3,000 concurrent users when responses are received by Mule.

C

Explanation:

The primary architectural challenge in this scenario is the massive discrepancy between the backend response time (up to 90 seconds) and the API Gateway timeout constraint (9 seconds). In any synchronous integration pattern, the connection must remain open across the entire path; if the API Gateway closes the connection at 9 seconds, a standard Salesforce "Request-Reply" callout will fail long before the 90-second eligibility check is complete.

Option A is non-viable because synchronous polling at a high scale (3,000 concurrent users) would likely hit Salesforce concurrent request limits and place an immense, unnecessary load on the API Gateway.

Option B, using Continuation, is designed to handle long-running callouts (up to 120 seconds) without blocking Salesforce threads, but it still requires the external connection path to remain open. It does not bypass the 9-second timeout imposed by the customer’s API Gateway.

The optimal recommendation is Option C, which implements an Asynchronous Request-Reply pattern using Platform Events and the empAPI.12

Request Phase: The Salesforce UI initiates the request. To bypass the 9-second gateway timeout, the ESB (MuleSoft) should be configured to receive the request3 and immediately return an acknowledgment (e.g.,4 HTTP 202 Accepted). This allows the initial Salesforce callout to complete successfully within the 9-second window.56

Processing Phase: MuleSoft then proceeds with the long-running (up to 90 seconds) call to the external eligibility systems.78

Callback Phase (Remote Call-In)9: Once the eligibility result is received, MuleSoft calls back into Salesforce via the REST API to publish a Platform Event containing the result.10

UI Update (empA11PI): The 3,000 sales agents’ browsers, having subscribed to the event channel using the empAPI (Lightning’s built-in library for streaming events), receive the notification in real-time. The UI then updates to display the "Display Response" step.

This event-driven architecture effectively "insulates" Salesforce and the API Gateway from the

backend’s high latency, ensures scalability for 3,000 concurrent users, and provides a seamless, real-time user experience without hitting governor limits or timeout constraints.

A company needs to integrate a legacy on-premise application that can only support SOAP API. The integration architect determines that the Fire and Forget integration pattern is most appropriate for sending data from Salesforce to the external application and getting a response back in a strongly-typed format.

Which integration capabilities should be used?

- A . Platform Events for Salesforce to Legacy System direction and SOAP API using Enterprise WSDL for the communication back from legacy system to Salesforce

- B . Outbound Messaging for Salesforce to Legacy System direction and SOAP API using Partner Web Services Description Language (WSDL) for the communication back from legacy system to Salesforce

- C . Outbound Messaging for Salesforce to Legacy System direction and SOAP API using Enterprise WSDL for the communication back from legacy system to Salesforce

C

Explanation:

For an outbound, declarative, Fire-and-Forget integration to a legacy SOAP-based system, Salesforce Outbound Messaging is the native tool of choice. Outbound Messaging sends an XML message to a designated endpoint when specific criteria are met. It is highly reliable as Salesforce will automatically retry the delivery for up to 24 hours if the target system is unavailable.

For the communication back from the legacy system to Salesforce, a strongly-typed SOAP API approach is required. The Enterprise WSDL is the correct recommendation here because it is a strongly-typed WSDL that is specific to the organization’s unique data model (including custom objects and fields). Using the Enterprise WSDL allows the legacy system to communicate with Salesforce using specific data types, providing compile-time safety and reducing errors during the mapping process.

Option A is less efficient because Platform Events would likely require middleware to translate the event into the legacy system’s SOAP format.

Option B suggests the Partner WSDL, which is loosely-typed and designed for developers building tools that must work across many different Salesforce orgs. Since this is an internal integration for a specific company, the Enterprise WSDL provides a much more streamlined development experience with better data integrity. By combining Outbound Messaging (for fire-and-forget delivery) and the Enterprise WSDL (for the strongly-typed callback),

the architect fulfills the technical requirements while minimizing custom code.

Northern Trail Outfitters has recently implemented middleware for orchestration of services across platforms. The Enterprise Resource Planning (ERP) system being used requires transactions be

captured near real-time at a REST endpoint initiated in Salesforce when creating an Order object. Additionally, the Salesforce team has limited development resources and requires a low-code solution.

Which option should fulfill the use case requirements?

- A . Use Remote Process Invocation Fire and Forget pattern on insert on the Order object using Flow Builder.

- B . Implement a Workflow Rule wi5th Outbound Messaging to send SOAP messages to the designated endpoint.

- C . Implement Change Data Capture on the Order object and leverage the replay ID in the middleware solution.

A

Explanation:

To satisfy a requirement for near real-time updates to an ERP system while adher9ing to a low-code constraint, the architect must leverage Salesforce’s modern declara10tive automation tools. The goal is to initiate an outbound signal that the existing middleware can then orchestrate and deliver to the ERP’s REST endpoint.

The Remote Process Invocation―Fire and Forget pattern is perfectly suited for this scenario. In this pattern, Salesforce sends a message to an external system and does not wait for a functional response. This is ideal for "capturing" transactions in an ERP where the primary goal is record synchronization rather than a real-time calculation return. By using Flow Builder, the team can implement a record-triggered flow on the Order object. This flow can be configured to execute "Actions" that send data to the middleware via External Services or standard HTTP Callouts (Beta/GA features in modern Flow), which requires zero Apex coding.

Option B, Outbound Messaging, is a legacy declarative tool that is highly reliable but has a significant limitation: it natively sends messages in SOAP format. Since the requirement specifically specifies a REST endpoint, using Outbound Messaging would require additional transformation logic in the middleware, making it a less direct architectural fit than a modern Flow-based REST call.

Option C, Change Data Capture (CDC), is a highly scalable, event-driven mechanism, but it is typically considered more complex to implement and maintain. It requires the middleware to manage "Replay IDs" and subscribe to a streaming channel, which often requires more specialized development effort on the middleware side compared to a simple HTTP POST from a Flow. For a team with limited development resources, Flow Builder provides the most accessible and maintainable path to achieving near real-time integration.

Northern Trail Outfitters (NTO) uses Salesforce to track leads, opportunities, and order details that convert leads to customers. However, orders are managed by an external (remote) system. Sales reps want to view and update real-time order information in Salesforce. NTO wants the data to only persist in the external system.

Which type of integration should an architect recommend to meet this business requirement?

- A . Data Synchronization

- B . Data Virtualization

- C . Process Orchestration

B

Explanation:

The requirement to view and update data in real-time while ens5uring the data only persists in the external system is the definition of a Data Virtualization pattern. In this architectural model, Salesforce does not store a local copy of the data (which would be Data Synchronization), but instead acts as a window into the external system of record.

An Integration Architect implements Data Virtualization primarily through Salesforce Connect. This tool allows the external system’s order table to be represented as an External Object in Salesforce. Because the data is retrieved on-demand via a web service call (typically using the OData protocol), it is always "real-time." Furthermore, since Salesforce Connect supports writeable external objects, sales reps can update the order information directly from the Salesforce UI, and those changes are sent back to the external system immediately without being saved to the Salesforce database.

This approach is superior to Data Synchronization (Option A) in this specific use case because it eliminates the need for data storage costs and the complexity of keeping two databases in sync. It is also distinct from Process Orchestration (Option C), which focuses on the sequencing of tasks across multiple systems rather than the real-time presentation of external data. By utilizing Data Virtualization, NTO achieves a seamless user experience where external orders look and feel like native Salesforce records while strictly adhering to the "no persistence" constraint.

An integration architect has built a solution using REST API, updating Account, Contact, and other related information. The data volumes have increased, resulting in higher API calls consumed, and some days the limits are exceeded. A decision was made to decrease the number of API calls using bulk updates. The customer prefers to continue using REST API to avoid architecture changes.

Which REST API composite resources should the integration architect use to allow up to 200 records in one API call?

- A . Batch

- B . SObject Tree

- C . Composite

B

Explanation:

When designing high-volume integrations, the Salesforce Platform Integration Architect must distinguish between standard REST resources and "Composite" resources to optimize API consumption. The Salesforce REST API provides several composite resources to group multiple operations into a single call, thereby reducing the overhead of multiple HTTP requests and helping to stay within daily API limits.

According to Salesforce documentation on Composite Resources, the sObject Tree resource (/services/data/vXX.X/composite/tree/) is specifically designed to handle multiple records in a single request. While it is primarily marketed for creating complex hierarchies (parent-child relationships), it has a unique limit that allows for up to 200 records to be processed in a single call. These records can even be unrelated records of the same type. This is a significant advantage over the standard Batch and Composite resources.

The Composite resource and the Batch resource both have a much lower limit of 25 subrequests per call. While each subrequest in a Batch call could technically be a collection operation, the question specifically asks for the resource that natively supports the "200 records" threshold preferred for bulk-style updates within the REST framework. By utilizing the sObject Tree resource, the architect can bundle 200 record updates into a single transaction, effectively reducing API consumption by a factor of 200 compared to individual REST calls. This aligns with the requirement to avoid major architectural changes (like switching to the Bulk API 2.0) while solving the immediate problem of exceeding daily governor limits. In the context of the Integration Architect exam, understanding these specific payload limits is crucial for selecting the most efficient "Request-Reply" or "Data Synchronization" pattern.

Northern Trail Outfitters needs to present shipping costs and estimated delivery times to its customers. Shipping services used vary by region and have similar but distinct service request parameters.

Which integration component capability should be used?

- A . Apex REST Service to implement routing logic to the various shipping service

- B . Enterprise Service Bus to determine which shipping service to use and transform requests to the necessary format

- C . Enterprise Service Bus user interface to collect shipper-specific form data

B

Explanation:

When dealing with multiple external service providers (like different regional shippers) that have varying API requirements, the most scalable architectural choice is an Enterprise Service Bus (ESB) or middleware solution. This scenario describes a classic "Orchestration" and "Transformation" use case.

An ESB excels at:

A new Salesforce program has the following high-level abstract requirement: Business processes

executed on Salesforce require data updates between their internal systems and Salesforce.

Which relevant detail should an integration architect seek to specifically solve for integration architecture needs of the program?

- A . Core functional and non-functional requirements for User Experience design, Encryption needs, Community, and license choices

- B . Timing aspects, real-time/near real-time (synchronous or asynchronous), batch and update frequency

- C . Integration skills, SME availability, and Program Governance details

B

Explanation:

In the discovery and translation phase of a Salesforce project, an Integration Architect must move beyond high-level business goals to define the technical "DNA" of the data exchange. While organizational readiness and user experience are vital to project success, they do not dictate the architectural patterns required to move data between systems.

The most critical details for designing an integration architecture are the Timing and Volume requirements. Identifying whether a business process is Synchronous or Asynchronous is the primary decision point. For example, if a Salesforce user requires an immediate validation from an external system before they can save a record, a synchronous "Request-Reply" pattern using an Apex Callout is required. If the data update can happen in the background without blocking the user, an asynchronous "Fire-and-Forget" pattern is preferred to improve system performance and user experience.

Furthermore, understanding the Update Frequency (e.g., real-time, hourly, or nightly) and the Data Volume (e.g., 100 records vs. 1 million records) allows the architect to select the appropriate Salesforce API. High-volume, low-frequency updates are best handled by the Bulk API to minimize API limit consumption, while low-volume, high-frequency updates are better suited for the REST API or Streaming API. By specifically seeking out these timing and frequency aspects, the architect ensures that the chosen solution is scalable, stays within platform governor limits, and meets the business’s Service Level Agreements (SLAs). Without these details, the architect risks designing a solution that is either too slow for the business needs or too taxing on system resources.

UC has an API-led architecture with three tiers. Requirement: return data to systems of engagement (mobile, web, Salesforce) in different formats and enforce different security protocols.

What should the architect recommend?

- A . Implement an API Gateway that all systems of engagement must interface with first.

- B . Enforce separate security protocols and return formats at the first tier of the API-led architecture.

B

Explanation:

In a standard API-led connectivity model, the First Tier (Experience APIs) is responsible for tailoring data for specific systems of engagement.

The Experience APIs take the core data from the lower tiers and transform it into the specific return formats (e.g., JSON for mobile, XML for legacy web) and security protocols (e.g., OAuth for Salesforce, API Keys for web) required by each consumer.

Option B correctly identifies that these transformations and security enforcements should happen at this outer layer. While an API Gateway (Option A) can provide generic security and rate limiting, it is the Experience API layer that provides the functional transformation and specific protocol requirements defined by the business needs of the engagement systems.

A subscription-based media company’s system landscape forces many subscribers to maintain multiple accounts and to log in more than once. An Identity and Access Management (IAM) system, which supports SAML and OpenId, was recently implemented to improve the subscriber experience through self-registration and single sign-on (SSO). The IAM system must integrate with Salesforce to give new self-service customers instant access to Salesforce Community Cloud.

Which requirement should Salesforce Community Cloud support for self-registration and SSO?

- A . OpenId Connect Authentication Provider and JIT provisioning

- B . SAML SSO and Registration Handler

- C . SAML SSO and Just-in-Time (JIT) provisioning

Northern Trail Outfitters has a registration system that is used for workshops offered at its conferences. Attendees use Salesforce Community to register for workshops, but the scheduling system manages workshop availability based on room capacity. It is expected that there will be a big surge of requests for workshop reservations when the conference schedule goes live.

Which Integration pattern should be used to manage the influx in registrations?

- A . Remote Process Invocation Request and Reply

- B . Remote Process Invocation Fire and Forget

- C . Batch Data Synchronization

B

Explanation:

When dealing with a "big surge" or high-volume influx of requests, a synchronous pattern like Request and Reply (Option A) can lead to significant performance bottlenecks. In a synchronous model, each Salesforce user thread must wait for the external scheduling system to respond, which could lead to "Concurrent Request Limit" errors during peak times.

The Remote Process Invocation―Fire and Forget pattern is the architecturally sound choice for managing surges. In this pattern, Salesforce captures the registration intent and immediately hands it off to an asynchronous process or a middleware queue. Salesforce does not wait for the external system to process the room capacity logic; instead, it receives a simple acknowledgment that the message was received.23

This pattern decouples the front-end user experience from the back-end processing limits. Middleware can then "drip-feed" these registration4s into the scheduling system at a rate it can handl5e. If the scheduling system becomes overwhelmed or goes offline, the messages remain safely in the queue.

Option C (Batch) is unsuitable because users expect near real-time feedback on their registration attempt, even if the final confirmation is sent a few minutes later. By utilizing Fire and Forget, NTO ensures a responsive Community Experience during the critical launch window while maintaining system stability.