Practice Free NCP-CN Exam Online Questions

An administrator has been trying to deploy an initial AHV-based NKP cluster in a dark site (no Internet connectivity) environment using the command shown in the question.

nkp create cluster nutanix

–cluster-name=$CLUSTER_NAME

–control-plane-prism-element-cluster=$PE_NAME

–worker-prism-element-cluster=$PE_NAME

–control-plane-subnets=$SUBNET_ASSOCIATED_WITH_PE

–worker-subnets=$SUBNET_ASSOCIATED_WITH_PE

–control-plane-endpoint-ip=$AVAILABLE_IP_FROM_SAME_SUBNET

–csi-storage-container=$NAME_OF_YOUR_STORAGE_CONTAINER

–endpoint=$PC_ENDPOINT_URL

–control-plane-vm-image=$NAME_OF_OS_IMAGE_CREATED_BY_NKP_CLI

–worker-vm-image=$NAME_OF_OS_IMAGE_CREATED_BY_NKP_CLI

–registry-url=${REGISTRY_URL}

–registry-mirror-username=${REGISTRY_USERNAME}

–registry-mirror-password=${REGISTRY_PASSWORD}

–kubernetes-service-load-balancer-ip-range $START_IP-$END_IP

–self-managed

Which missing attribute needs to be added in order for the deployment?

- A . –airgapped

- B . –insecure

- C . –registry-url

- D . –registry-username

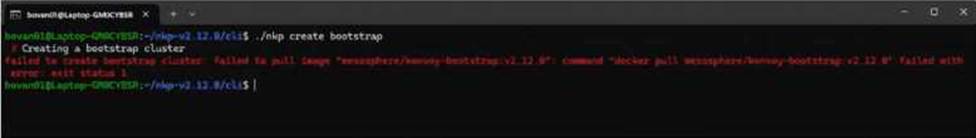

Refer to the exhibit.

A Platform Engineer is preparing to deploy an NKP cluster in an air-gapped environment. The NKP cluster will be deployed on Nutanix infrastructure using the CAPI Nutanix provisioner (CAPX). The engineer has decided to create the bootstrap cluster first, then NIB-prep an Ubuntu 22.04 OS image that the Linux engineering team has provided in Prism Central. After that, the engineer will deploy the NKP cluster. However, during the first step of creating a bootstrap cluster, the engineer received the error shown in the exhibit.

What could be the reason?

- A . The CAPI provisioning method needs to be specified as part of the command nkp create bootstrap nutanix.

- B . The bootstrap cluster image needs to be loaded prior to creating the bootstrap cluster.

- C . The Ubuntu 22.04 OS image needs to be NIB-prepped prior to creating the bootstrap.

- D . The nkp create bootstrap command needs to be executed as root.

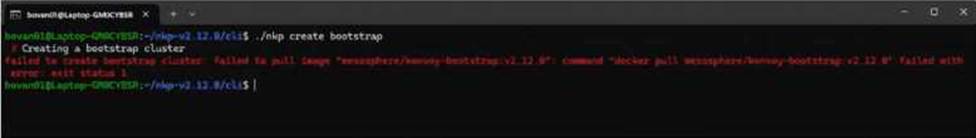

Refer to the exhibit.

A Platform Engineer is preparing to deploy an NKP cluster in an air-gapped environment. The NKP cluster will be deployed on Nutanix infrastructure using the CAPI Nutanix provisioner (CAPX). The engineer has decided to create the bootstrap cluster first, then NIB-prep an Ubuntu 22.04 OS image that the Linux engineering team has provided in Prism Central. After that, the engineer will deploy the NKP cluster. However, during the first step of creating a bootstrap cluster, the engineer received the error shown in the exhibit.

What could be the reason?

- A . The CAPI provisioning method needs to be specified as part of the command nkp create bootstrap nutanix.

- B . The bootstrap cluster image needs to be loaded prior to creating the bootstrap cluster.

- C . The Ubuntu 22.04 OS image needs to be NIB-prepped prior to creating the bootstrap.

- D . The nkp create bootstrap command needs to be executed as root.

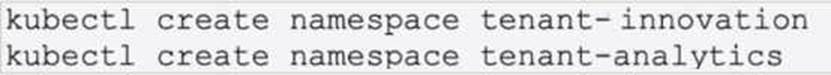

Refer to the exhibit.

A DevOps team faces a growing challenge of managing logs from multiple applications in an NKP cluster. With several teams working on different projects, it is essential to implement a Multi-Tenant Logging system that allows each team to access their own logs securely and efficiently. Initially, two namespaces have been configured for each project, as shown in the exhibit. Then a ConfigMap has also been configured for each tenant, which contains the logging configuration.

Which YAML output corresponds to a retention period of 30 days for tenant-innovation and seven days for tenant-

analytics?

- A . yamlCollapseWrapCopyapiVersion: v1kind: ConfigMapmetadata:name: logging-innovation-confignamespace: tenant-innovationdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 30d—apiVersion: v1kind: ConfigMapmetadata:name: logging-analytics-confignamespace: tenant-analyticsdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 7d

- B . yamlCollapseWrapCopyapiVersion: v1kind: ConfigMapmetadata:name: logging-innovation-confignamespace: tenant-innovationdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 30d—apiVersion: v1kind: ConfigMapmetadata:name: logging-analytics-confignamespace: tenant-innovationdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 7d

- C . yamlCollapseWrapCopyapiVersion: v1kind: ConfigMapmetadata:name: logging-innovation-confignamespace: tenant-innovationdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 30h—apiVersion: v1kind: ConfigMapmetadata:name: logging-analytics-confignamespace: tenant-analyticsdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 7h

- D . yamlCollapseWrapCopyapiVersion: v1kind: ConfigMapmetadata:name: logging-innovation-confignamespace: tenantdata:values.yaml: |loki:structuredConfig:limits_config:retention_period:

30d—apiVersion: v1kind: ConfigMapmetadata:name: logging-analytics-confignamespace:

tenantdata:values.yaml: |loki:structuredConfig:limits_config:retention_period: 7d

A company recently deployed NKP. A Platform Engineer was asked to attach the existing Amazon EKS. A workspace and project were created accordingly, and resource requirements were met.

What does the engineer need to do first to prepare the EKS clusters?

- A . Configure a ConfigMap according to EKS configuration.

- B . Create a service account with cluster-admin permissions.

- C . Configure HAProxy to get connected to EKS clusters.

- D . Deploy cert-manager in the EKS clusters.

Some time ago, an EKS cluster was attached to be managed with NKP (Fleet Management). Now, a Platform Engineer has been asked to disconnect the EKS cluster from NKP for licensing reasons. After disconnecting the cluster, the developers realized that application changes are still being reflected in the EKS cluster, despite the fact that the EKS cluster was successfully detached from NKP.

How should the engineer resolve this issue?

- A . Forcefully detach EKS cluster: nkp detach cluster -c detached-cluster-name –force

- B . Detached cluster must also be deleted from NKP: nkp delete cluster -c detached-cluster-name

- C . Developers must have some bad configuration in the deployment config files. Ask for revision or

call AWS technical support. - D . Detached cluster’s Flux installation must be manually disconnected from the management Git repository: kubectl -n kommmander-flux patch gitrepo management -p ‘{"spec":{"suspend":true}}’ — type merge