Practice Free MLA-C01 Exam Online Questions

HOTSPOT

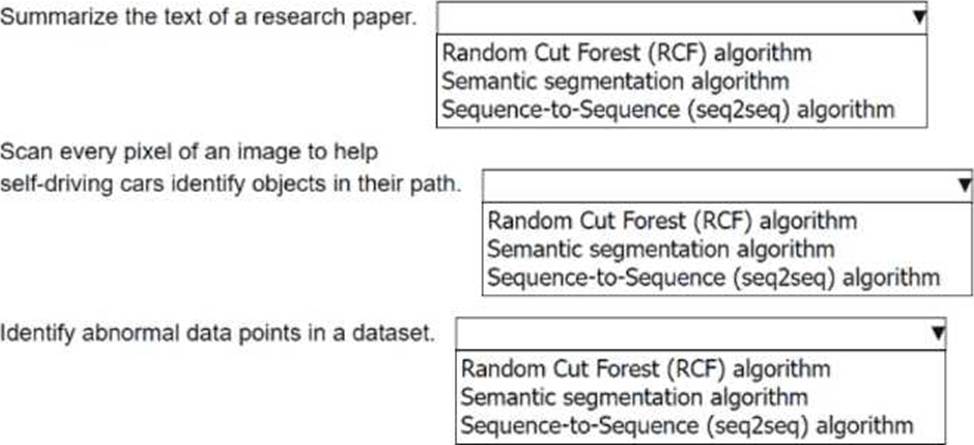

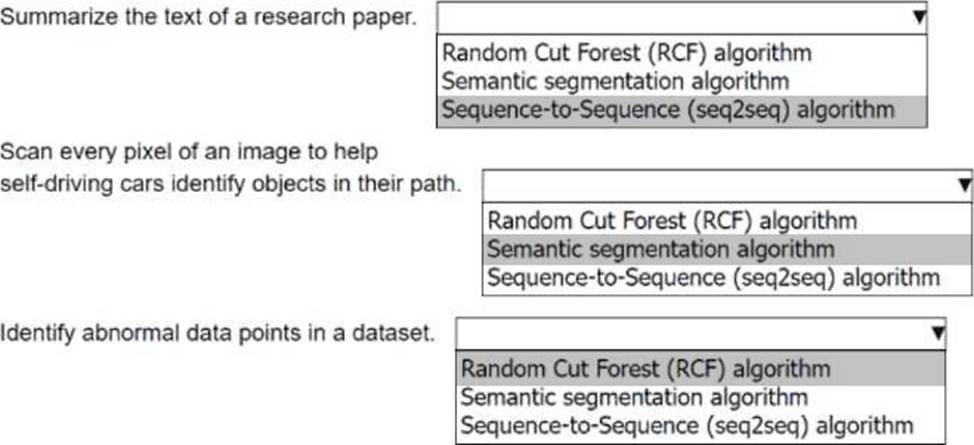

An ML engineer must choose the appropriate Amazon SageMaker algorithm to solve specific AI problems.

Select the correct SageMaker built-in algorithm from the following list for each use case. Each algorithm should be selected one time.

• Random Cut Forest (RCF) algorithm

• Semantic segmentation algorithm

• Sequence-to-Sequence (seq2seq) algorithm

Explanation:

Use case 1:

Summarize the text of a research paper

➡️ Sequence-to-Sequence (seq2seq) algorithm

Why:

Seq2seq models are designed for natural language generation tasks such as text summarization, translation, and paraphrasing. AWS documentation explicitly lists text summarization as a primary use case for the SageMaker seq2seq algorithm.

Use case 2:

Scan every pixel of an image to help self-driving cars identify objects in their path

➡️ Semantic segmentation algorithm

Why:

Semantic segmentation performs pixel-level classification, assigning a class label to every pixel in an image. This is exactly what is required for applications such as autonomous driving, road scene

understanding, and object boundary detection.

Use case 3:

Identify abnormal data points in a dataset

➡️ Random Cut Forest (RCF) algorithm

Why:

Random Cut Forest is an unsupervised anomaly detection algorithm. AWS SageMaker RCF is purpose-built to identify outliers, unusual patterns, and anomalies in numerical datasets, making it ideal for fraud detection, monitoring, and abnormal data point detection.

An ML engineer wants to deploy a workflow that processes streaming IoT sensor data and periodically retrains ML models. The most recent model versions must be deployed to production.

Which service will meet these requirements?

- A . Amazon SageMaker Pipelines

- B . Amazon Managed Workflows for Apache Airflow (MWAA)

- C . AWS Lambda

- D . Apache Spark

A

Explanation:

Amazon SageMaker Pipelines is purpose-built for orchestrating end-to-end ML workflows, including data ingestion, training, evaluation, and deployment. It supports automation, versioning, and deployment of the latest model versions.

MWAA orchestrates general workflows but lacks ML-native features. Lambda cannot handle long-running ML training. Spark processes data but does not manage ML lifecycle.

Therefore, Option A is the correct AWS-native solution.

An ML engineer needs to run intensive model training jobs each month that can take 48C72 hours. The jobs can be interrupted and resumed. The engineer has a fixed budget and needs the most cost-effective compute option.

Which solution will meet these requirements?

- A . Purchase Reserved Instances with partial upfront payment.

- B . Purchase On-Demand Instances.

- C . Purchase SageMaker AI Savings Plans.

- D . Purchase Spot Instances that use automated checkpoints.

D

Explanation:

Amazon EC2 Spot Instances provide unused compute capacity at discounts of up to 90%. AWS documentation strongly recommends Spot Instances for interruptible workloads such as long-running ML training jobs that can resume from checkpoints.

By enabling automatic checkpointing, SageMaker saves model state periodically to Amazon S3. If the Spot Instance is interrupted, training can resume with minimal loss of progress.

Reserved Instances and Savings Plans require long-term commitment and are not as cost-effective for sporadic workloads. On-Demand Instances are the most expensive option.

Therefore, Option D is the most cost-effective solution.

A company needs to give its ML engineers appropriate access to training data. The ML engineers must access training data from only their own business group. The ML engineers must not be allowed to access training data from other business groups.

The company uses a single AWS account and stores all the training data in Amazon S3 buckets. All ML model training occurs in Amazon SageMaker.

Which solution will provide the ML engineers with the appropriate access?

- A . Enable S3 bucket versioning.

- B . Configure S3 Object Lock settings for each user.

- C . Add cross-origin resource sharing (CORS) policies to the S3 buckets.

- D . Create IAM policies. Attach the policies to IAM users or IAM roles.

D

Explanation:

By creating IAM policies with specific permissions, you can restrict access to Amazon S3 buckets or objects based on the user’s business group. These policies can be attached to IAM users or IAM roles associated with the ML engineers, ensuring that each engineer can only access training data belonging to their group. This approach is secure, scalable, and aligns with AWS best practices for access control.

A company has a Retrieval Augmented Generation (RAG) application that uses a vector database to

store embeddings of documents. The company must migrate the application to AWS and must implement a solution that provides semantic search of text files. The company has already migrated the text repository to an Amazon S3 bucket.

Which solution will meet these requirements?

- A . Use an AWS Batch job to process the files and generate embeddings. Use AWS Glue to store the embeddings. Use SQL queries to perform the semantic searches.

- B . Use a custom Amazon SageMaker AI notebook to run a custom script to generate embeddings. Use SageMaker Feature Store to store the embeddings. Use SQL queries to perform the semantic searches.

- C . Use the Amazon Kendra S3 connector to ingest the documents from the S3 bucket into Amazon Kendra. Query Amazon Kendra to perform the semantic searches.

- D . Use an Amazon Textract asynchronous job to ingest the documents from the S3 bucket. Query Amazon Textract to perform the semantic searches.

C

Explanation:

The key requirement is semantic search over text documents that already reside in Amazon S3. AWS provides Amazon Kendra, a fully managed service specifically designed for semantic and natural language search across unstructured text.

Amazon Kendra natively supports S3 connectors, which can ingest documents directly from an S3 bucket, automatically process the text, generate embeddings, and index the content for semantic retrieval. This removes the need for the company to manage embedding generation, vector storage, or similarity search infrastructure. Queries can be expressed in natural language, making Kendra well suited for RAG-style applications.

Option A and B require building and maintaining a custom embedding pipeline and do not provide a true vector similarity search engine using SQL. SageMaker Feature Store is not intended to function as a vector database for semantic search.

Option D is incorrect because Amazon Textract is an OCR service for extracting text from scanned documents and images; it does not support semantic search.

Therefore, ingesting documents using the Amazon Kendra S3 connector and querying Kendra is the correct and AWS-recommended solution.

An ML engineer is setting up an Amazon SageMaker AI pipeline for an ML model. The pipeline must automatically initiate a re-training job if any data drift is detected.

How should the ML engineer set up the pipeline to meet this requirement?

- A . Use an AWS Glue crawler and an AWS Glue extract, transform, and load (ETL) job to detect data drift. Use AWS Glue triggers to automate the retraining job.

- B . Use Amazon Managed Service for Apache Flink to detect data drift. Use an AWS Lambda function to automate the re-training job.

- C . Use SageMaker Model Monitor to detect data drift. Use an AWS Lambda function to automate the re-training job.

- D . Use Amazon Quick Suite (previously known as Amazon QuickSight) anomaly detection to detect data drift. Use an AWS Step Functions workflow to automate the re-training job.

C

Explanation:

AWS provides Amazon SageMaker Model Monitor as a native solution for detecting data drift and model quality issues in production ML pipelines. Model Monitor continuously analyzes incoming inference data and compares it with baseline training data to identify schema drift, feature distribution drift, and data quality anomalies.

When drift thresholds are violated, Model Monitor generates CloudWatch metrics and alerts. These alerts can directly trigger an AWS Lambda function, which can then programmatically initiate a SageMaker retraining job or start a SageMaker Pipeline execution. This design is explicitly documented by AWS as the recommended architecture for automated retraining workflows.

Option A is incorrect because AWS Glue is a data integration service and does not provide ML-specific drift detection capabilities.

Option B is incorrect because Apache Flink is designed for stream processing, not ML data drift detection.

Option D is incorrect because Amazon QuickSight anomaly detection is intended for business intelligence metrics, not ML feature drift.

Therefore, using SageMaker Model Monitor with AWS Lambda automation is the correct, AWS-native solution for drift-driven retraining.

An ML engineer is using AWS CodeDeploy to deploy new container versions for inference on Amazon ECS.

The deployment must shift 10% of traffic initially, and the remaining 90% must shift within 10C15 minutes.

Which deployment configuration meets these requirements?

- A . CodeDeployDefault.LambdaLinear10PercentEvery10Minutes

- B . CodeDeployDefault.ECSAllAtOnce

- C . CodeDeployDefault.ECSCanary10Percent15Minutes

- D . CodeDeployDefault.LambdaCanary10Percent15Minutes

C

Explanation:

AWS CodeDeploy provides predefined deployment configurations for ECS that support canary and linear traffic shifting. The ECSCanary10Percent15Minutes configuration shifts 10% of traffic initially, waits 15 minutes, and then shifts the remaining traffic.

This matches the exact requirement: a 10% initial shift followed by the remaining 90% within the specified time window.

Lambda deployment configurations are not applicable to ECS. ECSAllAtOnce does not perform gradual traffic shifting.

AWS documentation explicitly defines ECSCanary10Percent15Minutes for controlled, low-risk ECS deployments.

Therefore, Option C is the correct and AWS-verified answer.

An ML engineer receives datasets that contain missing values, duplicates, and extreme outliers. The ML engineer must consolidate these datasets into a single data frame and must prepare the data for ML.

Which solution will meet these requirements?

- A . Use Amazon SageMaker Data Wrangler to import the datasets and to consolidate them into a single data frame. Use the cleansing and enrichment functionalities to prepare the data.

- B . Use Amazon SageMaker Ground Truth to import the datasets and to consolidate them into a single data frame. Use the human-in-the-loop capability to prepare the data.

- C . Manually import and merge the datasets. Consolidate the datasets into a single data frame. Use Amazon Q Developer to generate code snippets that will prepare the data.

- D . Manually import and merge the datasets. Consolidate the datasets into a single data frame. Use Amazon SageMaker data labeling to prepare the data.

A

Explanation:

Amazon SageMaker Data Wrangler provides a comprehensive solution for importing, consolidating, and preparing datasets for ML. It offers tools to handle missing values, duplicates, and outliers through its built-in cleansing and enrichment functionalities, allowing the ML engineer to efficiently prepare the data in a single environment with minimal manual effort.

An ML engineer receives datasets that contain missing values, duplicates, and extreme outliers. The ML engineer must consolidate these datasets into a single data frame and must prepare the data for ML.

Which solution will meet these requirements?

- A . Use Amazon SageMaker Data Wrangler to import the datasets and to consolidate them into a single data frame. Use the cleansing and enrichment functionalities to prepare the data.

- B . Use Amazon SageMaker Ground Truth to import the datasets and to consolidate them into a single data frame. Use the human-in-the-loop capability to prepare the data.

- C . Manually import and merge the datasets. Consolidate the datasets into a single data frame. Use Amazon Q Developer to generate code snippets that will prepare the data.

- D . Manually import and merge the datasets. Consolidate the datasets into a single data frame. Use Amazon SageMaker data labeling to prepare the data.

A

Explanation:

Amazon SageMaker Data Wrangler provides a comprehensive solution for importing, consolidating, and preparing datasets for ML. It offers tools to handle missing values, duplicates, and outliers through its built-in cleansing and enrichment functionalities, allowing the ML engineer to efficiently prepare the data in a single environment with minimal manual effort.

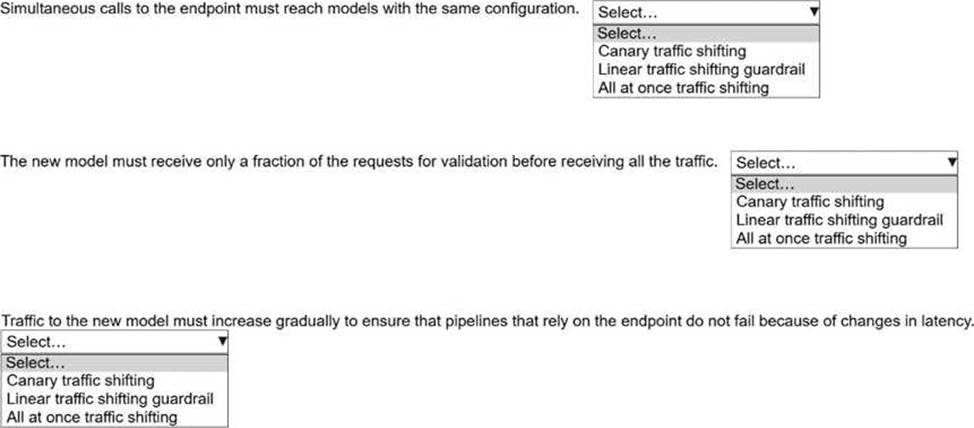

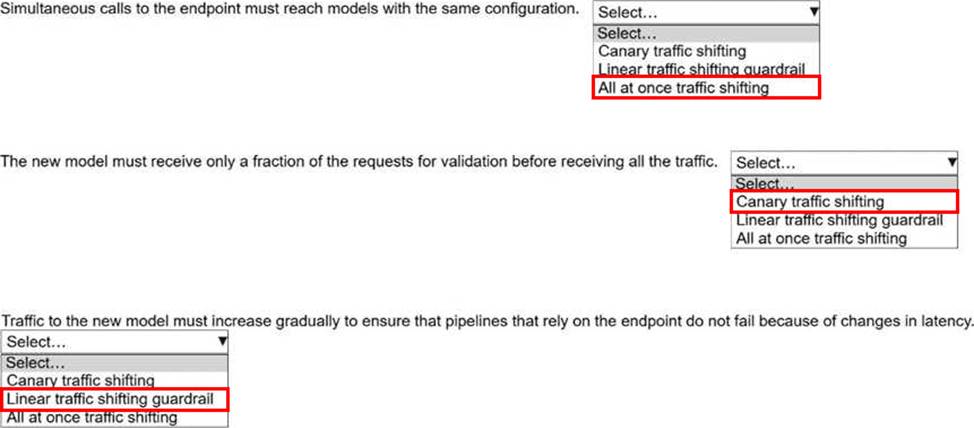

HOTSPOT

A company has multiple models that are hosted on Amazon SageMaker Al. The models need to be re-trained. The requirements for each model are different, so the company needs to choose different deployment strategies to transfer all requests to a new model.

Select the correct strategy from the following list for each requirement. Select each strategy one time. (Select THREE.)

. Canary traffic shifting

. Linear traffic shifting guardrail

. All at once traffic shifting

Explanation:

1️ ⃣ Simultaneous calls to the endpoint must reach models with the same configuration

Correct strategy: All at once traffic shifting

Why:

“All at once” replaces the old model with the new model immediately. After the switch, all concurrent requests hit only the new model configuration, guaranteeing configuration consistency across simultaneous calls.

2️ ⃣ The new model must receive only a fraction of the requests for validation before receiving all the traffic

Correct strategy: Canary traffic shifting

Why:

Canary deployments route a small percentage of traffic (for example, 5% or 10%) to the new model first. This allows validation of correctness and performance before shifting 100% of traffic.

3️ ⃣ Traffic to the new model must increase gradually to ensure that pipelines that rely on the endpoint do not fail because of changes in latency

Correct strategy: Linear traffic shifting guardrail

Why:

Linear traffic shifting gradually increases traffic in equal increments over time and includes guardrails (such as CloudWatch alarms) to automatically roll back if latency or errors exceed thresholds.