Practice Free MLA-C01 Exam Online Questions

A company uses Amazon SageMaker AI to create ML models. The data scientists need fine-grained control of ML workflows, DAG visualization, experiment history, and model governance for auditing and compliance.

Which solution will meet these requirements?

- A . Use AWS CodePipeline with SageMaker Studio and SageMaker ML Lineage Tracking.

- B . Use AWS CodePipeline with SageMaker Experiments.

- C . Use SageMaker Pipelines with SageMaker Studio and SageMaker ML Lineage Tracking.

- D . Use SageMaker Pipelines with SageMaker Experiments.

C

Explanation:

Amazon SageMaker Pipelines provides native orchestration of ML workflows with fine-grained control, DAG-based visualization, and seamless integration with SageMaker Studio. AWS documentation explicitly states that Pipelines is designed for end-to-end ML workflow automation and visualization.

SageMaker ML Lineage Tracking records relationships between datasets, models, training jobs, and endpoints, enabling full auditability and governance, which is essential for compliance.

SageMaker Experiments tracks experiment metrics but does not provide lineage-level governance.

CodePipeline is a general CI/CD service and lacks ML-specific DAG visualization and lineage tracking.

AWS best practices recommend combining SageMaker Pipelines + SageMaker Studio + ML Lineage Tracking for enterprise-grade ML workflow management.

Therefore, Option C is the correct and AWS-verified solution.

A company is using an Amazon Redshift database as its single data source. Some of the data is sensitive.

A data scientist needs to use some of the sensitive data from the database. An ML engineer must give the data scientist access to the data without transforming the source data and without storing anonymized data in the database.

Which solution will meet these requirements with the LEAST implementation effort?

- A . Configure dynamic data masking policies to control how sensitive data is shared with the data scientist at query time.

- B . Create a materialized view with masking logic on top of the database. Grant the necessary read permissions to the data scientist.

- C . Unload the Amazon Redshift data to Amazon S3. Use Amazon Athena to create schema-on-read with masking logic. Share the view with the data scientist.

- D . Unload the Amazon Redshift data to Amazon S3. Create an AWS Glue job to anonymize the data. Share the dataset with the data scientist.

A

Explanation:

Dynamic data masking allows you to control how sensitive data is presented to users at query time, without modifying or storing transformed versions of the source data. Amazon Redshift supports dynamic data masking, which can be implemented with minimal effort. This solution ensures that the data scientist can access the required information while sensitive data remains protected, meeting the requirements efficiently and with the least implementation effort.

A company is using an AWS Lambda function to monitor the metrics from an ML model. An ML engineer needs to implement a solution to send an email message when the metrics breach a threshold.

Which solution will meet this requirement?

- A . Log the metrics from the Lambda function to AWS CloudTrail. Configure a CloudTrail trail to send the email message.

- B . Log the metrics from the Lambda function to Amazon CloudFront. Configure an Amazon CloudWatch alarm to send the email message.

- C . Log the metrics from the Lambda function to Amazon CloudWatch. Configure a CloudWatch alarm to send the email message.

- D . Log the metrics from the Lambda function to Amazon CloudWatch. Configure an Amazon CloudFront rule to send the email message.

D

Explanation:

Logging the metrics to Amazon CloudWatch allows the metrics to be tracked and monitored effectively.

CloudWatch Alarms can be configured to trigger when metrics breach a predefined threshold.

The alarm can be set to notify through Amazon Simple Notification Service (SNS), which can send email messages to the configured recipients.

This is the standard and most efficient way to achieve the desired functionality.

A company has an application that uses different APIs to generate embeddings for input text. The company needs to implement a solution to automatically rotate the API tokens every 3 months.

Which solution will meet this requirement?

- A . Store the tokens in AWS Secrets Manager. Create an AWS Lambda function to perform the rotation.

- B . Store the tokens in AWS Systems Manager Parameter Store. Create an AWS Lambda function to perform the rotation.

- C . Store the tokens in AWS Key Management Service (AWS KMS). Use an AWS managed key to perform the rotation.

- D . Store the tokens in AWS Key Management Service (AWS KMS). Use an AWS owned key to perform the rotation.

A

Explanation:

AWS Secrets Manager is designed for securely storing, managing, and automatically rotating secrets, including API tokens. By configuring a Lambda function for custom rotation logic, the solution can automatically rotate the API tokens every 3 months as required. Secrets Manager simplifies secret management and integrates seamlessly with other AWS services, making it the ideal choice for this use case.

A company that has hundreds of data scientists is using Amazon SageMaker to create ML models.

The models are in model groups in the SageMaker Model Registry.

The data scientists are grouped into three categories: computer vision, natural language processing (NLP), and speech recognition. An ML engineer needs to implement a solution to organize the existing models into these groups to improve model discoverability at scale. The solution must not affect the integrity of the model artifacts and their existing groupings.

Which solution will meet these requirements?

- A . Create a custom tag for each of the three categories. Add the tags to the model packages in the SageMaker Model Registry.

- B . Create a model group for each category. Move the existing models into these category model groups.

- C . Use SageMaker ML Lineage Tracking to automatically identify and tag which model groups should contain the models.

- D . Create a Model Registry collection for each of the three categories. Move the existing model groups into the collections.

A

Explanation:

Using custom tags allows you to organize and categorize models in the SageMaker Model Registry without altering their existing groupings or affecting the integrity of the model artifacts. Tags are a lightweight and scalable way to improve model discoverability at scale, enabling the data scientists to filter and identify models by category (e.g., computer vision, NLP, speech recognition). This approach meets the requirements efficiently without introducing structural changes to the existing model registry setup.

Case study

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model’s algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

After the data is aggregated, the ML engineer must implement a solution to automatically detect anomalies in the data and to visualize the result.

Which solution will meet these requirements?

- A . Use Amazon Athena to automatically detect the anomalies and to visualize the result.

- B . Use Amazon Redshift Spectrum to automatically detect the anomalies. Use Amazon QuickSight to visualize the result.

- C . Use Amazon SageMaker Data Wrangler to automatically detect the anomalies and to visualize the result.

- D . Use AWS Batch to automatically detect the anomalies. Use Amazon QuickSight to visualize the result.

C

Explanation:

Amazon SageMaker Data Wrangler is a comprehensive tool that streamlines the process of data preparation and offers built-in capabilities for anomaly detection and visualization.

Key Features of SageMaker Data Wrangler:

Data Importation: Connects seamlessly to various data sources, including Amazon S3 and on-premises databases, facilitating the aggregation of transaction logs, customer profiles, and MySQL tables.

Anomaly Detection: Provides built-in analyses to detect anomalies in time series data, enabling the identification of outliers that may indicate fraudulent activities.

Visualization: Offers a suite of visualization tools, such as histograms and scatter plots, to help understand data distributions and relationships, which are crucial for feature engineering and model development.

Implementation Steps:

Data Aggregation:

Import data from Amazon S3 and on-premises MySQL databases into SageMaker Data Wrangler.

Utilize Data Wrangler’s data flow interface to combine and preprocess datasets, ensuring a unified dataset for analysis.

Anomaly Detection:

Apply the anomaly detection analysis feature to identify outliers in the dataset.

Configure parameters such as the anomaly threshold to fine-tune the detection sensitivity.

Visualization:

Use built-in visualization tools to create charts and graphs that depict data distributions and highlight anomalies.

Interpret these visualizations to gain insights into potential fraud patterns and feature interdependencies.

Advantages of Using SageMaker Data Wrangler:

Integrated Workflow: Combines data preparation, anomaly detection, and visualization within a single interface, streamlining the ML development process.

Operational Efficiency: Reduces the need for multiple tools and complex integrations, thereby minimizing operational overhead.

Scalability: Handles large datasets efficiently, making it suitable for extensive transaction logs and customer profiles.

By leveraging SageMaker Data Wrangler, the ML engineer can effectively detect anomalies and visualize results, facilitating the development of a robust fraud detection model.

Analyze and Visualize – Amazon SageMaker

Transform Data – Amazon SageMaker

A credit card company has a fraud detection model in production on an Amazon SageMaker endpoint. The company develops a new version of the model. The company needs to assess the new model’s performance by using live data and without affecting production end users.

Which solution will meet these requirements?

- A . Set up SageMaker Debugger and create a custom rule.

- B . Set up blue/green deployments with all-at-once traffic shifting.

- C . Set up blue/green deployments with canary traffic shifting.

- D . Set up shadow testing with a shadow variant of the new model.

D

Explanation:

Shadow testing allows you to send a copy of live production traffic to a shadow variant of the new model while keeping the existing production model unaffected. This enables you to evaluate the performance of the new model in real-time with live data without impacting end users. SageMaker endpoints support this setup by allowing traffic mirroring to the shadow variant, making it an ideal solution for assessing the new model’s performance.

A company is using Amazon SageMaker to create ML models. The company’s data scientists need fine-grained control of the ML workflows that they orchestrate. The data scientists also need the ability to visualize SageMaker jobs and workflows as a directed acyclic graph (DAG). The data scientists must keep a running history of model discovery experiments and must establish model governance for auditing and compliance verifications.

Which solution will meet these requirements?

- A . Use AWS CodePipeline and its integration with SageMaker Studio to manage the entire ML workflows. Use SageMaker ML Lineage Tracking for the running history of experiments and for auditing and compliance verifications.

- B . Use AWS CodePipeline and its integration with SageMaker Experiments to manage the entire ML workflows. Use SageMaker Experiments for the running history of experiments and for auditing and compliance verifications.

- C . Use SageMaker Pipelines and its integration with SageMaker Studio to manage the entire ML workflows. Use SageMaker ML Lineage Tracking for the running history of experiments and for auditing and compliance verifications.

- D . Use SageMaker Pipelines and its integration with SageMaker Experiments to manage the entire ML workflows. Use SageMaker Experiments for the running history of experiments and for auditing and compliance verifications.

C

Explanation:

SageMaker Pipelines provides a directed acyclic graph (DAG) view for managing and visualizing ML workflows with fine-grained control. It integrates seamlessly with SageMaker Studio, offering an intuitive interface for workflow orchestration.

SageMaker ML Lineage Tracking keeps a running history of experiments and tracks the lineage of datasets, models, and training jobs. This feature supports model governance, auditing, and compliance verification requirements.

A company is creating an application that will recommend products for customers to purchase. The application will make API calls to Amazon Q Business. The company must ensure that responses from Amazon Q Business do not include the name of the company’s main competitor.

Which solution will meet this requirement?

- A . Configure the competitor’s name as a blocked phrase in Amazon Q Business.

- B . Configure an Amazon Q Business retriever to exclude the competitor’s name.

- C . Configure an Amazon Kendra retriever for Amazon Q Business to build indexes that exclude the competitor’s name.

- D . Configure document attribute boosting in Amazon Q Business to deprioritize the competitor’s name.

A

Explanation:

Amazon Q Business allows configuring blocked phrases to exclude specific terms or phrases from the responses. By adding the competitor’s name as a blocked phrase, the company can ensure that it will not appear in the API responses, meeting the requirement efficiently with minimal configuration.

HOTSPOT

A company stores historical data in .csv files in Amazon S3. Only some of the rows and columns in the .csv files are populated. The columns are not labeled. An ML

engineer needs to prepare and store the data so that the company can use the data to train ML models.

Select and order the correct steps from the following list to perform this task. Each step should be selected one time or not at all. (Select and order three.)

• Create an Amazon SageMaker batch transform job for data cleaning and feature engineering.

• Store the resulting data back in Amazon S3.

• Use Amazon Athena to infer the schemas and available columns.

• Use AWS Glue crawlers to infer the schemas and available columns.

• Use AWS Glue DataBrew for data cleaning and feature engineering.

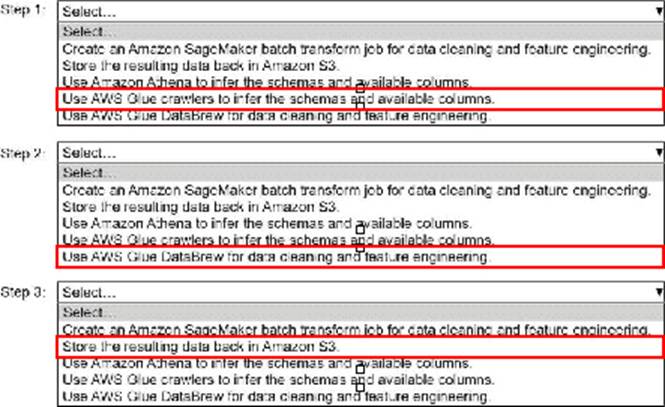

Explanation:

Step 1: Use AWS Glue crawlers to infer the schemas and available columns.

Step 2: Use AWS Glue DataBrew for data cleaning and feature engineering.

Step 3: Store the resulting data back in Amazon S3.

Step 1: Use AWS Glue Crawlers to Infer Schemas and Available Columns

Why? The data is stored in .csv files with unlabeled columns, and Glue Crawlers can scan the raw data in Amazon S3 to automatically infer the schema, including available columns, data types, and any missing or incomplete entries.

How? Configure AWS Glue Crawlers to point to the S3 bucket containing the .csv files, and run the crawler to extract metadata. The crawler creates a schema in the AWS Glue Data Catalog, which can then be used for subsequent transformations.

Step 2: Use AWS Glue DataBrew for Data Cleaning and Feature Engineering

Why? Glue DataBrew is a visual data preparation tool that allows for comprehensive cleaning and transformation of data. It supports imputation of missing values, renaming columns, feature

engineering, and more without requiring extensive coding.

How? Use Glue DataBrew to connect to the inferred schema from Step 1 and perform data cleaning and feature engineering tasks like filling in missing rows/columns, renaming unlabeled columns, and creating derived features.

Step 3: Store the Resulting Data Back in Amazon S3

Why? After cleaning and preparing the data, it needs to be saved back to Amazon S3 so that it can be used for training machine learning models.

How? Configure Glue DataBrew to export the cleaned data to a specific S3 bucket location. This ensures the processed data is readily accessible for ML workflows.

Order Summary:

Use AWS Glue crawlers to infer schemas and available columns.

Use AWS Glue DataBrew for data cleaning and feature engineering.

Store the resulting data back in Amazon S3.

This workflow ensures that the data is prepared efficiently for ML model training while leveraging AWS services for automation and scalability.