Practice Free GES-C01 Exam Online Questions

A Gen AI developer is using ‘SNOWFLAKE.CORTEX.COMPLETE" to generate concise summaries of legal documents. Initially, the LLM sometimes provides overly creative or slightly off-topic responses, indicating potential ‘hallucinations’ or a lack of focus. To improve the factual accuracy and conciseness of the summaries, which combination of prompt engineering techniques and ‘COMPLETE’ function options should be prioritized?

- A . Use a system prompt defining a persona like ‘creative writer’ and set ‘temperature’ to 0.9 to encourage diverse summaries.

- B . Implement ‘first principles thinking’ in the prompt, clearly outlining logical steps for summarization, and set ‘temperature’ to 0 for deterministic output.

- C . Instruct the model to ‘think out loud’ with an inner monologue in the prompt, and set ‘max_tokens’ to a large value to allow full reasoning.

- D . Provide a ‘task description’ focusing on broad themes and set ‘top_p’ to 0.5 to balance creativity and relevance.

- E . Rely solely on a comprehensive list of ‘stop sequences’ to end generation when the summary is complete.

B

Explanation:

To reduce ‘hallucinations’ and improve factual accuracy and conciseness, applying ‘first principles thinking’ by breaking prompts into logical steps helps the model respond from foundational concepts rather than assumptions. Setting ‘temperature’ to 0 yields the most consistent and deterministic results, which is crucial for factual accuracy and conciseness in summaries. Defining a ‘creative writer’ persona or using a high ‘temperature’ would increase diversity and potentially lead to more hallucinations (Option A). Instructing the model to ‘think out loud’ (Option C) enhances transparency of thought but does not directly enforce factual accuracy or conciseness in the way ‘first principles’ and low ‘temperature’ do. ‘max_tokens’ (Option C) affects output length, not necessarily accuracy or conciseness. A broad ‘task description’ (Option D) might not be specific enough, and ‘top_p’ also influences diversity. ‘Stop sequences’ (Option E) help with truncation but do not prevent factual errors or improve conciseness directly from the model’s generation process.

A data application developer is tasked with building a multi-turn conversational AI application using Streamlit in Snowflake (SiS) that leverages the COMPLETE (SNOWFLAKE. CORTEX) LLM function.

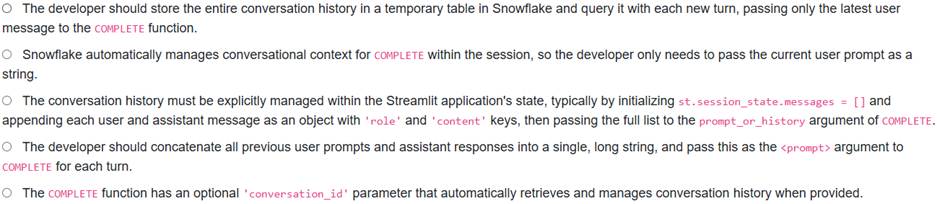

To ensure the conversation flows naturally and the LLM maintains context from previous interactions, which of the following is the most appropriate method for handling and passing the conversation history?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

C

Explanation:

To provide a stateful, conversational experience with the ‘COMPLETE (SNOWFLAKE.CORTEX)’ function, all previous user prompts and model responses must be passed as part of the argument. This argument accepts an array of objects, where each object represents a turn and contains a ‘role’ (‘system’, ‘user’, or ‘assistant’) and a ‘content’ key, presented in chronological order. In Streamlit, st.session_states is the standard and recommended mechanism for storing and managing data across reruns of the application, making it ideal for maintaining chat history.

Option A is inefficient and incorrect because ‘COMPLETE does not inherently manage history from external tables.

Option B is incorrect as ‘COMPLETE does not retain state between calls; history must be explicitly managed.

Option D is less effective than structured history, as it loses the semantic role distinction and can be less accurate for LLMs.

Option E describes a non-existent parameter for the ‘COMPLETE’ function.

A global analytics firm is developing a Retrieval Augmented Generation (RAG) system in Snowflake to answer customer queries across a large repository of technical documentation, which includes documents in English, German, and Spanish. They are looking to use a Snowflake Cortex embedding model to convert document chunks into vector embeddings for their Cortex Search Service.

Which of the following considerations are critical when selecting an appropriate embedding model to optimize for both query relevance and cost-efficiency for their multilingual RAG application? (Select all that apply)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

B,C

Explanation:

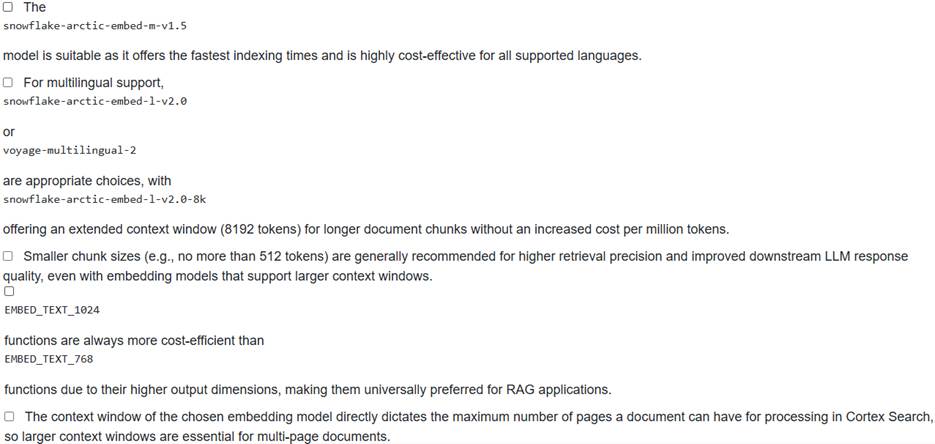

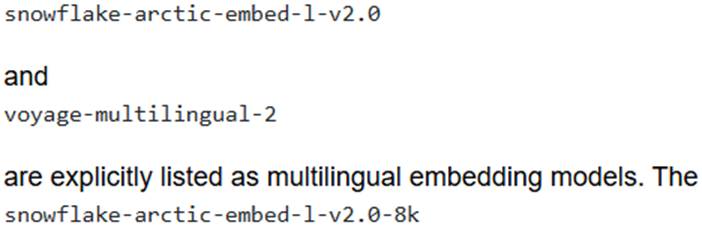

Option B is correct because both

model provides an increased context window of 8000 tokens while maintaining the same cost per million tokens (0.05 credits) as the 512-token version of

![]()

Option C is correct because Snowflake recommends splitting text into chunks of no more than 512 tokens for best search results with Cortex Search, as research shows this typically leads to higher retrieval precision and improved downstream LLM response quality, even when using longer-context embedding models.

Option A is incorrect because

![]()

is an English-only embedding model, which does not meet the requirement for multilingual documentation.

Option D is incorrect; the cost per million tokens for EMBED_TEXT_1024 models (e.g., 0.05-0.07 credits) is not inherently more cost-efficient than EMBED_TEXT_768 models (e.g., 0.03 credits), and cost-efficiency depends on the specific model and use case, not just output dimensions.

Option E is incorrect; the context window of an embedding model refers to the maximum length of a text input (chunk) it can process. The maximum pages a document can have (e.g., 300 pages for Document AI) is a separate document requirement, not directly determined by the embedding model’s context window.

A security engineer is developing an application that uses the Snowflake Cortex REST API to interact with LLMs, specifically to obtain structured outputs for text classification and to ensure secure communication. They are focusing on the /api/v2/cortex/ inference: complete endpoint.

Which of the following statements correctly describe aspects of this interaction?

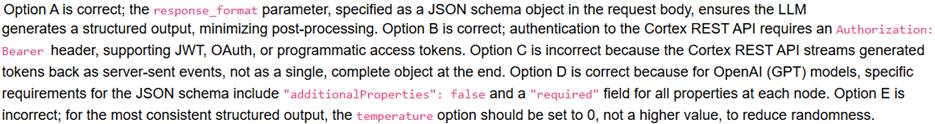

- A . To strictly enforce a JSON schema for the LLM’s response, the response_format parameter must be included in the request body, supplied as a JSON schema object, which helps reduce post-processing efforts.

- B . Authentication for Cortex REST API requests is primarily handled through an Authorization: Bearer header, where the token can be a JSON Web Token (JWT), OAuth token, or programmatic access token.

- C . The Cortex REST API for LLM inference always returns the complete LLM response as a single, fully-formed JSON object once generation is finished, regardless of any streaming options.

- D . For models like OpenAI (GPT) used via the Cortex REST API with structured output, the JSON schema in the response_format field must include "additionalProperties": false and a "required" field listing all properties at every node.

- E . To ensure the most consistent and deterministic structured output from the LLM, it is recommended to set the temperature option to a higher value, such as 0.7 or 1.0, in the request payload.

A,B,D

Explanation:

A data engineer is developing a Snowflake Cortex LLM application that processes sensitive customer feedback. To ensure that generated responses from the ‘COMPLETE’ function are filtered for potentially unsafe or harmful content, they need to enable Cortex Guard.

Which of the following SQL ‘COMPLETE function calls correctly demonstrates the enablement of Cortex Guard with the default unsafe response message?

A)

![]()

B)

![]()

C)

![]()

D)

![]()

E)

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

C

Explanation:

To enable Cortex Guard within the ‘COMPLETE function, the ‘guardrails’ argument must be set to ‘TRUE within the options object. When is not explicitly specified, the default message ‘Response filtered by Cortex Guard’ is returned for unsafe content.

Option C correctly uses ‘guardrails: TRUE without specifying ‘response_when_unsafe’, thus implying the default behavior Option B includes, which is not the default.

Options A, D, and E use incorrect or non-existent arguments for enabling guardrails.

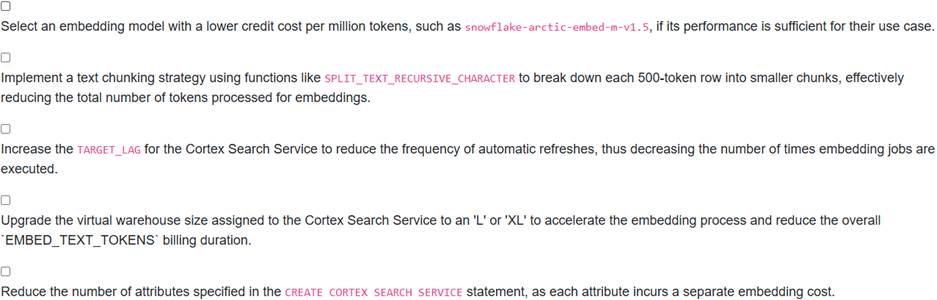

A development team is creating a new search application using Snowflake Cortex Search. They are currently using a ‘snowflake-arctic- embed-I-v2.0’ embedding model. After an initial load of 10 million rows, each with approximately 500 tokens of text, they observe a significant ‘EMBED_TEXT_TOKENS’ cost. They want to minimize these costs for future updates and ongoing operations. Considering their goal to optimize ‘EMBED_TEXT_TOKENS’ costs, which two strategies should the team prioritize for their Cortex Search Service?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,C

Explanation:

Option A is correct: Different embedding models have varying costs per million tokens. Switching from ‘snowflake-arctic-embed-l- v2.0’ (0.05 credits/M tokens) to ‘snowflake-arctic-embed-m-v1 .5′ (0.03 credits/M tokens) would directly reduce costs if the smaller model meets quality requirements.

Option C is correct: The parameter controls how often the search service is refreshed. Increasing the ‘TARGET_LAG’ reduces the frequency of embedding jobs, directly decreasing ‘EMBED_TEXT_TOKENS costs over, time.

Option B is incorrect: ‘EMBED_TEXT_TOKENS’ costs are based on the total number of tokens processed. Splitting a 500-token row into smaller chunks still results in processing the same total number of tokens for that row, so it doesn’t reduce the total ‘EMBED_TEXT _ TOKENS’ cost, although it can improve search quality.

Option D is incorrect: costs are based on the volume of tokens, not processing speed. A larger warehouse size does not reduce the number of tokens and is not recommended for cost reduction for EMBED_TEXT_TOKENS’; Snowflake recommends a warehouse size no larger than MEDIUM for Cortex Search services.

Option E is incorrect: The ‘ATTRIBUTES’ field primarily affects filtering capabilities, and the embedding cost is associated with the primary search column, not each individual attribute incurring a separate embedding cost.

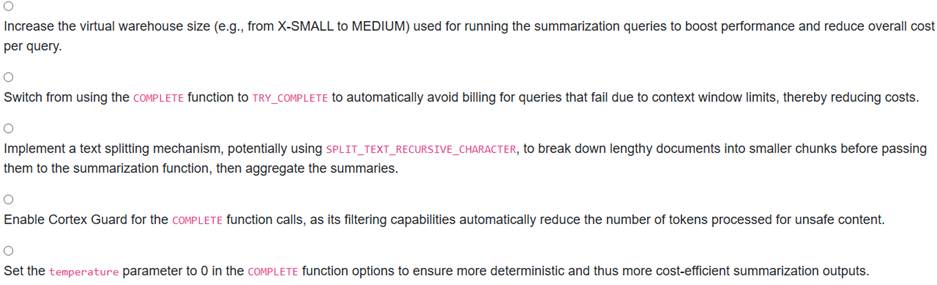

A Snowflake team observes consistently high token costs from ‘SNOWFLAKE.ACCOUNT_USAGE.CORTEX_FUNCTIONS_QUERY_USAGE_HISTORY’ for a summarization task using the ‘mistral- large2’ model. The task involves summarizing legal documents, which often exceed the context window of common LLMs. To optimize these token-based costs, which strategy should the team prioritize?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

C

Explanation:

Option C is correct. For summarization of lengthy documents, exceeding the context window or using large inputs significantly increases token consumption. Text splitting, for example using, can break documents into smaller, more manageable chunks. This reduces the number of input tokens per LLM call, directly leading to cost optimization, and is recommended for best search results and LLM response quality with Cortex Search.

Option A is incorrect because for Cortex AISQL functions, Snowflake recommends using a smaller warehouse (no larger than MEDIUM) as larger warehouses do not increase performance but can result in unnecessary costs associated with keeping the warehouse active. The compute cost for Cortex LLM functions is based on tokens processed, not warehouse size performance.

Option B is incorrect because ‘TRY COMPLETE only prevents costs for ‘failed’ operations by returning NULL instead of an error. It does not optimize the token consumption of ‘successful’ summarization tasks.

Option D is incorrect; Cortex Guard processes additional tokens for its filtering, thus ‘increasing’ token consumption, not reducing it.

Option E is incorrect because setting ‘temperature’ to 0 makes the output more deterministic, which might improve consistency but does not directly reduce the number of input or output tokens processed for a summarization task.

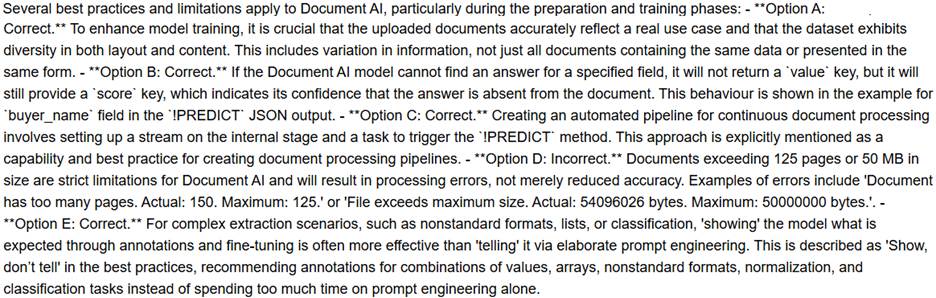

A Gen AI specialist is preparing to upload a large volume of diverse documents to an internal stage for Document AI processing. The objective is to extract detailed information, including lists of items and potentially classifying document types, and then automate this process.

Which of the following statements represent ‘best practices or important considerations/limitations’ when preparing documents and setting up the Document AI workflow in Snowflake? (Select ALL that apply.)

- A . To improve model training, documents uploaded should represent a real use case, and the dataset should consist of diverse documents in terms of both layout and data.

- B . If the Document AI model does not find an answer for a specific field, the ‘!PREDICT method will omit the ‘value’ key but will still return a ‘score’ key to indicate confidence that the answer is not present.

- C . For continuous processing of new documents, it is best practice to create a stream on the internal stage and a task to automate the ‘!PREDICT method execution.

- D . Documents with a page count exceeding 125 pages or a file size greater than 50 MB will be processed, but with a potential reduction in extraction accuracy.

- E . When defining data values for extraction, especially for nonstandard formats or combinations of values, fine-tuning the model with annotations is generally more effective than relying solely on complex prompt engineering.

A,B,C,E

Explanation:

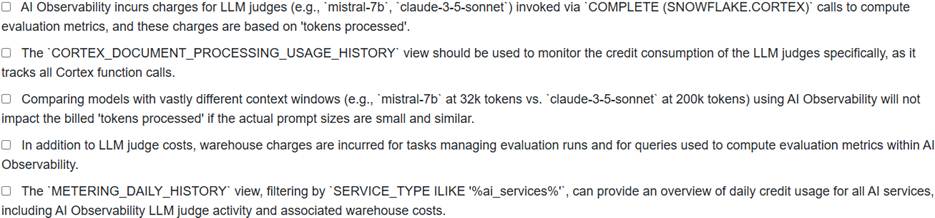

A financial institution is deploying a sentiment analysis application that uses Snowflake Cortex ‘SENTIMENT’ and ‘COMPLETE’ functions, with different LLMs, for processing customer feedback. They are using AI Observability (Public Preview) to compare the cost- efficiency of using ‘mistral-7b’ versus ‘claude-3-5-sonnet’ as LLM judges for evaluation metrics, and also tracking the overall cost of their AI Observability usage.

Which statements accurately reflect the cost implications and monitoring tools for this scenario?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,D,E

Explanation:

Option A is correct because AI Observability utilizes LLM judges (such as ‘mistral-7b’ or ‘claude-3-5-sonnet’) through ‘COMPLETE (SNOWFLAKE.CORTEX)’ function calls to compute evaluation metrics, and these calls incur charges based on the ‘tokens processed’.

Option D is correct as, beyond LLM judge costs, AI Observability also incurs warehouse charges for managing evaluation runs and for queries that compute evaluation metrics.

Option E is correct because the view, with a filter for ‘SERVICE _ TYPE ILIKE, provides a comprehensive daily credit usage report for all AI services, which would include AI Observability’s components.

Option B is incorrect; the view is specifically for Document AI processing functions like ‘!PREDICT and ‘AI_EXTRACT, not for general LLM judge usage in AI Observability. The view is more appropriate for tracking individual Cortex function calls.

Option C is incorrect because while prompt sizes might be similar, the pricing for different LLMs (e.g., ‘mistral-7b’ at 0.12 credits per million tokens vs. ‘claude-3-5-sonnet’ at 2.55 credits per million tokens for AI Complete) will still result in different billed amounts due to varying per-token costs, even if the number of tokens is the same.

A data analyst is using Snowflake Copilot in Snowsight to generate SQL queries for a new dataset containing customer PII.

Which of the following statements accurately describes how Snowflake Copilot operates with respect to data access, governance, and model interaction?

- A . Snowflake Copilot directly accesses and processes the raw data within customer tables to understand its content and generate SQL.

- B . Snowflake Copilot is powered by a fine-tuned model that runs securely inside Snowflake Cortex, leveraging only database/schema/table/column names and data types, ensuring data remains within Snowflake’s governance boundary and respects RBAC.

- C . To protect sensitive information, Snowflake Copilot transmits sampled PII data to an external LLM for schema understanding before generating SQL.

- D . Snowflake Copilot requires explicit column-level grants for direct data access, similar to how a human analyst would query specific data points.

- E . While Snowflake Copilot generates SQL based on metadata, the generated SQL queries are executed in an isolated environment that does not respect existing Snowflake RBAC policies.

B

Explanation:

Snowflake Copilot is an LLM-powered assistant that is powered by a model fine-tuned by Snowflake, running securely inside Snowflake Cortex. It ensures that your enterprise data and metadata always stay securely inside Snowflake and fully respects RBAC. Crucially, Snowflake Copilot does not have access to the data inside your tables; it generates responses based on the names of your databases, schemas, tables, and columns, and their data types.

Options A and C are incorrect because Copilot does not directly access or transmit customer data.

Option D is incorrect as it implies direct data access, which Copilot does not perform.

Option E is incorrect because Copilot fully integrates with Snowflake’s RBAC policies.