Practice Free GES-C01 Exam Online Questions

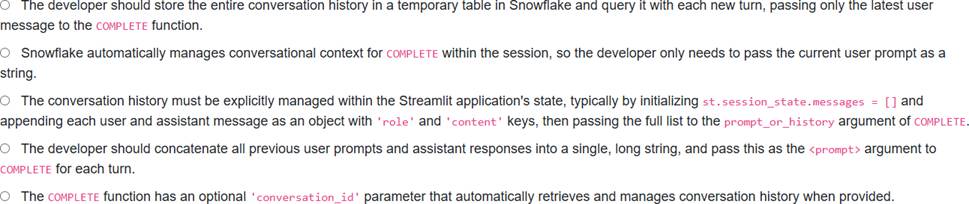

A data application developer is tasked with building a multi-turn conversational AI application using Streamlit in Snowflake (SiS) that leverages the COMPLETE (SNOWFLAKE. CORTEX) LLM function.

To ensure the conversation flows naturally and the LLM maintains context from previous interactions, which of the following is the most appropriate method for handling and passing the conversation history?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A Gen AI Specialist is developing a conversational analytics application using Cortex Analyst, aiming to provide a seamless multi-turn conversation experience for business users querying structured data. The team observes that follow-up questions are sometimes misinterpreted, especially when the conversation history is long.

Which of the following statements accurately describe how Cortex Analyst handles multi-turn conversations and key considerations for optimizing this functionality?

- A . Cortex Analyst simply passes the entire conversation history to all subsequent LLM calls, and optimizing this requires manually truncating the array in messages the REST API request.

- B . Cortex Analyst incorporates an additional LLM summarization agent before its original workflow to rewrite current-turn questions based on conversation history, with Llama 3.1 70B being a recommended model for this task due to its performance in evaluating summarization quality.

- C . Multi-turn conversation in Cortex Analyst is primarily handled by the CORTEX_ANALYST_MODEL AZURE_OPENAI parameter, which, when enabled, allows Azure OpenAl models to manage conversational context more effectively.

- D . To address misinterpretation in long conversations, the max_tokens parameter for the Cortex Analyst REST API should be significantly increased to ensure the LLM receives the complete historical context without truncation.

- E . When a user shifts intent frequently in a multi-turn conversation, Cortex Analyst automatically resets the conversation history to prevent misinterpretations and improve accuracy.

B

Explanation:

Cortex Analyst supports multi-turn conversations for data-related questions by incorporating an additional LLM summarization agent. This agent processes the conversation history and rewrites the current-turn question to include relevant context from previous turns, thereby providing a more coherent and accurate query for subsequent processing. This approach avoids passing arbitrarily long conversation histories to every LLM agent, which would lead to longer inference times and non-determinism. ‘Llama 3.1 70B’ has been identified as a sufficient model for this summarization task, achieving high accuracy in rewriting questions.

Option A is incorrect because Cortex Analyst specifically uses a summarization agent to avoid simply passing the entire, potentially long, conversation history to all LLM calls.

Option C is incorrect. The parameter controls the option to use Azure OpenAl models with Cortex Analyst (a legacy path that is discouraged), but it does not describe the mechanism for handling multi-turn conversational context.

Option D is incorrect. While ‘max_tokenS influences the length of LLM outputs, the strategy for handling long conversation history in Cortex Analyst is to use a summarization agent to create a concise, relevant context, not simply to increase the token limit to send all historical data. Increasing ‘max_tokens’ for entire conversation histories would lead to higher costs and potentially longer latencies.

Option E is incorrect. The documentation suggests that if a conversation becomes too long or the user’s intent shifts frequently, users *might need to reset* the conversation, but it does not state that Cortex Analyst *automatically* performs this reset.

A financial institution is fine-tuning a llama3.1-70b model within Snowflake Cortex using sensitive internal financial reports to improve sentiment analysis on earnings call transcripts. They need to understand the implications for data privacy, model ownership, and how this fine-tuned model can be managed and shared.

Which of the following statements are true regarding this process?

- A . The financial reports used for fine-tuning the llama3.1-7θb model are securely isolated and are not used by Snowflake to train or re-train models for other customers.

- B . The resulting fine-tuned model (e.g., my_sentiment_model) is the exclusive property of the financial institution and cannot be accessed or used by any other Snowflake customer.

- C . The fine-tuned model, being of type CORTEX_FINETUNED, can be shared with other Snowflake accounts using secure data sharing capabilities.

- D . Fine-tuned LLMs built with Cortex Fine-tuning are fully managed through the Snowflake Model Registry API, allowing for programmatic deployment, version control, and comprehensive lifecycle management.

- E . The fine-tuning process requires the explicit provisioning and management of a Snowpark-optimized warehouse with GPU resources by the institution.

A,B,C

Explanation:

Option A is correct. Snowflake’s privacy principles state that your Usage and Customer Data (including inputs and outputs for fine- tuning) are NOT used to train, re-train, or fine-tune Models made available to others.

Option B is correct. Fine-tuned models built using your data can only be used by you, ensuring exclusivity.

Option C is correct. Models generated with Cortex Fine-tuning (specifically of the type) can be shared using Data Sharing.

Option D is incorrect. While Cortex Fine-Tuned LLMs appear in the Model Registry’s Snowsight UI, they are *not* managed by the Model Registry API.

Option E is incorrect. Cortex Fine-tuning is described as a ‘fully managed service’ within Snowflake, which abstracts away much of the underlying infrastructure management like GPU resources, although a warehouse is selected for the job. Explicit provisioning and management of compute pools with GPUs is more characteristic of Snowpark Container Services for custom models.

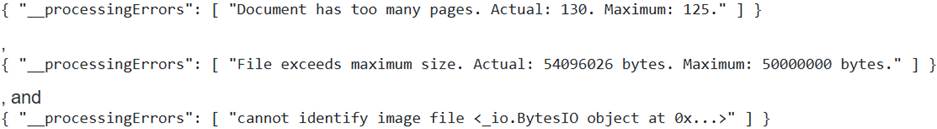

A data processing team is using Snowflake Document AI to extract data from incoming supplier invoices. They observe that many documents are failing to process, and successful extractions are taking longer than expected, leading to increased costs.

Upon investigation, they find error messages such as

. Additionally, their ‘X-LARGE virtual warehouse is constantly active, contributing to higher-than-anticipated bills.

Which two of the following actions are essential steps to troubleshoot and address the root causes of these processing errors and optimize their Document AI pipeline?

- A . Scale down the virtual warehouse to ‘X-SMALC or ‘SMALL’ size, as larger warehouses do not increase Document AI query processing speed and incur unnecessary costs.

- B . Implement a pre-processing step to split documents exceeding 125 pages or 50 MB into smaller, compliant files before loading to the stage.

- C . Redefine extraction questions to be more generic and encompassing, reducing the number of distinct questions needed per document.

- D . Configure the internal stage used for storing invoices with ‘ENCRYPTION = (TYPE = ‘SNOWFLAKE_SSE’Y.

- E . Increase the ‘max_tokenS parameter within the ‘ !PREDICT’ function options to accommodate longer document responses from the model.

B,D

Explanation:

The error messages ‘Document has too many pages. Actual: 130. Maximum: 125.’ and File exceeds maximum size. Actual: 54096026 bytes. Maximum: 50000000 bytes.’ directly indicate that the documents do not meet Document AI’s input requirements, which specify a maximum of 125 pages and 50 MB file size. Therefore, implementing a pre-processing step to split or resize these documents is an essential solution (Option B). The error ‘cannot identify image file <_io.Bytesl0 object at Ox…>‘ is a known issue that occurs when an internal stage used for Document AI is not configured with ‘SNOWFLAKE_SSE encryption. Correctly configuring the stage with this encryption type is crucial for resolving this processing error (Option D).

Option A, while addressing cost optimization, is not a root cause of the ‘processing errors’ themselves, although it is a best practice for cost governance as larger warehouses do not increase Document AI query processing speed.

Option C is incorrect; best practices for question optimization suggest being specific, not generic.

Option E is incorrect as ‘max_tokenS relates to the length of the model’s output, not the input document’s size or page limits.

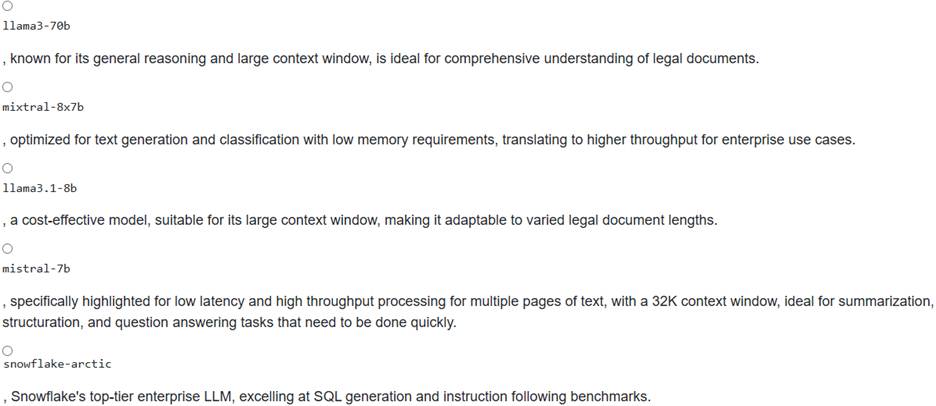

A data science team is developing an internal LLM to classify legal documents. They previously used a general-purpose LLM, but found its performance for their specific legal domain to be inconsistent, leading to high error rates and increased manual review. They decide to fine-tune a model using Snowflake Cortex Fine-tuning to improve accuracy and reduce latency for real-time document classification.

Which base model, among those available for fine-tuning via SNOWFLAKE .CORTEX.FINETUNE, is explicitly noted for its low latency and high throughput processing, making it a strong candidate for this use case, especially for multi-page text classification?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

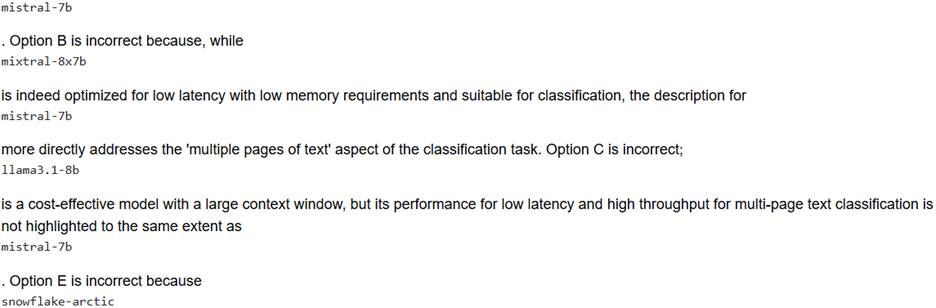

D

Explanation:

Option D is correct. The sources explicitly state that mistral-7b is ‘ideal for your simplest summarization, structuration, and question answering tasks that need to be done quickly. It offers low latency and high throughput processing for multiple pages of text with its 32K context window’. This description directly aligns with the scenario’s requirement for improved accuracy and reduced latency for real-time document classification, particularly for multi-page legal documents.

Option A is incorrect because while

![]()

is available for fine-tuning and suitable for content creation and chat applications, it is not specifically noted for low latency and high throughput processing for multi-page text classification in the same way as

is Snowflake’s top-tier enterprise LLM excelling at SQL generation, coding, and instruction following, but it is not listed as a base model available for fine-tuning with SNOWF LAKE .CORTEX.FINETUNE

.

A financial services company uses Snowflake Cortex’s AI_COMPLETE for sentiment analysis on customer call transcripts, which contain personally identifiable information (PII). They also fine-tune a llama3.1-70b model with proprietary financial data.

Which of the following statements accurately describe Snowflake’s Gen AI principles regarding data privacy, model usage, and governance in this scenario?

- A . Customer Data (inputs and outputs) for AI_COMPLETE, including PII, are guaranteed not to be available to other customers or used to train models made available to others.

- B . The fine-tuned llama3.1-70b model, including the proprietary training data used, is exclusively owned by the financial institution and is not shared with other Snowflake customers.

- C . Snowflake’s metadata fields, such as table and column names, should not contain personal, sensitive, or export-controlled data when using Snowflake AI services, to maintain data governance.

- D . Enabling Cortex Guard for AI_COMPLETE automatically anonymizes all PII within the prompt before it reaches the LLM, regardless of the model chosen, to ensure privacy.

- E . When using Cortex Analyst with Snowflake-hosted LLMs, metadata and prompts are transmitted outside Snowflake’s governance boundary for processing, incurring additional cross-cloud data transfer costs.

A,B,C

Explanation:

Option A is correct because Snowflake’s trust and safety principles explicitly state that Usage and Customer Data (inputs and outputs of Snowflake AI Features) are NOT available to other customers and are NOT used to train, re-train, or fine-tune Models made available to others.

Option B is correct because fine-tuned models built using your data can only be used by you, ensuring exclusivity.

Option C is correct as customers should ensure no personal, sensitive, or regulated data is entered as metadata when using the Snowflake service.

Option D is incorrect because Cortex Guard filters unsafe and harmful responses from the LLM; it does not automatically anonymize PII in the prompt before it reaches the LLM.

Option E is incorrect because when Cortex Analyst is powered by Snowflake-hosted LLMs, data (including metadata or prompts) stays within Snowflake’s governance boundary.

A new ML Engineer, ‘data_scientist_role’, has been assigned to a project involving custom machine learning models in Snowflake. They need to gain the necessary permissions to perform the following actions related to Snowflake Model Registry and Snowpark Container Services: 1. Log a custom model into a specified schem

a. 2. Deploy that model to an existing Snowpark Container Service compute pool. 3. Call the deployed model for inference using SQL.

Which of the following SQL commands grant the ‘minimal’ required privileges to the for these actions, assuming the compute pool and image repository already exist and are appropriately configured?

A)

![]()

B)

![]()

C)

![]()

D)

![]()

E)

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,D

Explanation:

Option A is correct because the ‘CREATE MODEL’ privilege on the target schema is required to log a new model (which creates a model object) in the Snowflake Model Registry.

Option D is correct because deploying a model to a Snowpark Container Service creates a service object within a schema, which requires the ‘CREATE SERVICE privilege on that schema. The role would also implicitly need ‘USAGE on the specified compute pool.

Option B is incorrect. While ‘USAGE ON DATABASE is generally needed for accessing objects within a database, it’s a broader prerequisite and not specifically a minimal privilege for the direct model registry actions of logging, deploying, and calling the model.

Option C is incorrect because ‘CREATE COMPUTE POOL’ is for creating the compute pool itself, not for deploying a service ‘to’ an existing one. The role would need ‘USAGE’ on the existing compute pool, but not the right to create it from scratch for this scenario.

Option E is incorrect because ‘READ ON IMAGE REPOSITORY is required for the ‘service’ to pull the image from the repository, but the question asks for privileges for the to perform the ‘actions’ of logging, deploying, and calling. While the role might need to manage or verify the image, this isn’t a direct privilege for the user’s interaction with the deployed model in the same way ‘CREATE MODEL’ or ‘CREATE SERVICE are.

A data scientist is designing a real-time similarity search feature in Snowflake using product embeddings. They plan to use VECTOR_L2_DISTANCE to find similar products.

Which statement correctly identifies a cost or data type characteristic relevant to this implementation?

- A . The VECTOR_L2_DISTANCE function incurs compute costs based on the square root of the number of output tokens generated.

- B . Storing product embeddings generated by EMBED_TEXT_768 in a VECTOR(INT, 768) column is a valid and efficient data type choice for the embeddings.

- C . Both the EMBED_TEXT_768 function and VECTOR_L2_DISTANCE incur token-based compute costs, but EMBED_TEXT_768 also includes a fixed per-call fee.

- D . The VECTOR_L2_DISTANCE function itself does not incur token-based compute costs, distinguishing it from embedding generation functions.

- E . The maximum dimension supported for a VECTOR data type in Snowflake is 768, aligning with common embedding model outputs.

D

Explanation:

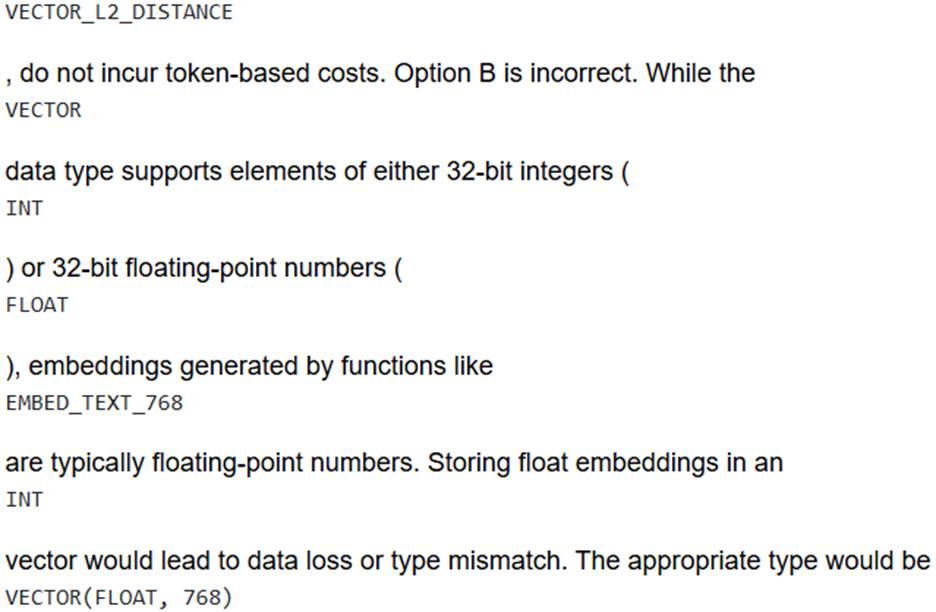

Option A is incorrect because vector similarity functions, including

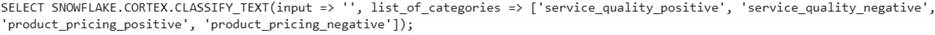

A financial institution uses Snowflake Cortex LLM functions to process customer feedback. They initially used SNOWF LAKE.CORTEX.SENTIMENT for general sentiment analysis. Now, they need to extract specific sentiment categories (e.g., ‘service_quality’, ‘product_pricing’) and the sentiment for each, expecting the output in a structured JSON format for automated downstream processing.

Which AI_COMPLETE configuration best addresses their new requirement while considering cost-efficiency and output reliability?

- A .

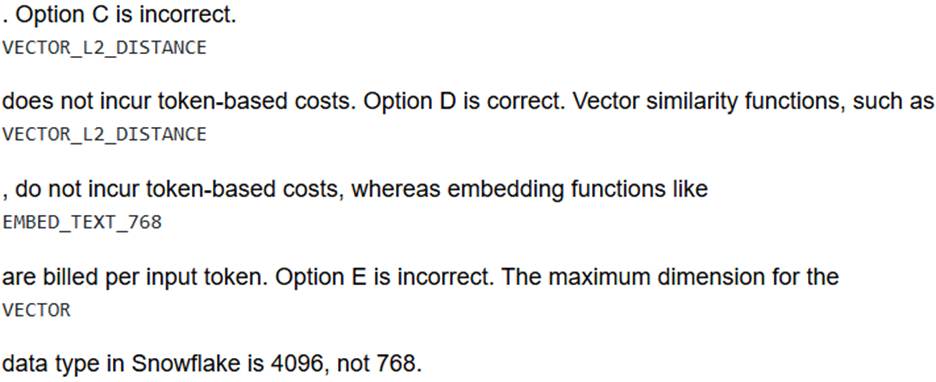

This uses a smaller model and a structured output schema to ensure JSON adherence. - B .

This leverages a more capable model, explicit ‘Respond in JSON’ prompt, and detailed schema with required fields, alongside a recommended temperature of 0 for consistency. - C .

This uses a medium model with high temperature for diverse output, potentially reducing reliability. - D .

This uses a powerful model with Cortex Guard enabled for safety, but without explicitly guiding JSON output for complex tasks. - E .

This leverages the classification function to categorize detailed sentiment, but it does not produce a structured JSON output with multiple sentiment categories for a single input.

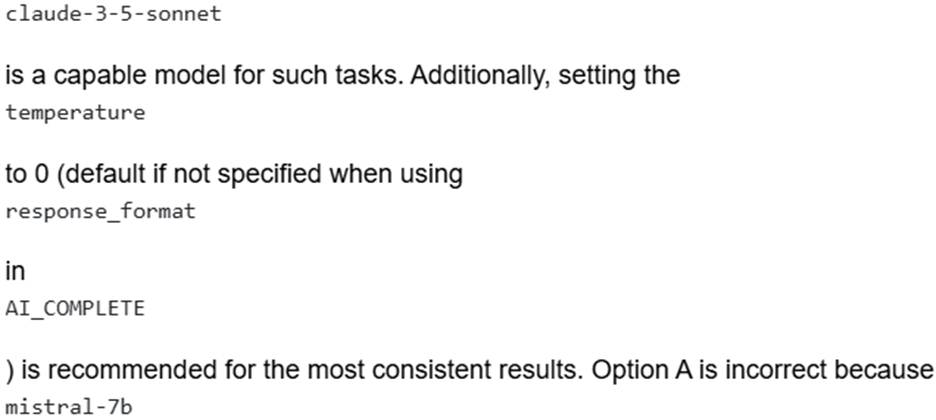

B

Explanation:

Option B is correct. For medium-complexity tasks like extracting specific sentiment categories into a structured format, Snowflake recommends using more powerful models, explicitly prompting the model to ‘Respond in JSON’, providing detailed descriptions for schema fields, and setting fields as ‘required’ to improve accuracy and ensure adherence to the schema.

is a smaller model which might struggle with the accuracy and reliability required for complex structured extraction compared to more powerful models, even with a schema.

Option C is incorrect because a temperature of 1.0 increases randomness, which is detrimental to the reliability and consistency required for structured JSON output and automated processing. The response_format should also be specified in the options argument explicitly for structured output.

Option D is incorrect; while mistral-large2 is a powerful model, relying on guardrails alone does not guarantee structured output or adherence to a specific JSON schema for complex extraction. For complex tasks, explicit prompting and schema details are crucial.

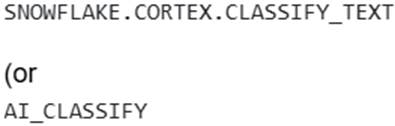

Option E is incorrect because

) returns a single classification label and cannot produce a JSON object with multiple specific sentiment categories and their respective sentiments from a single input text as required by the scenario.

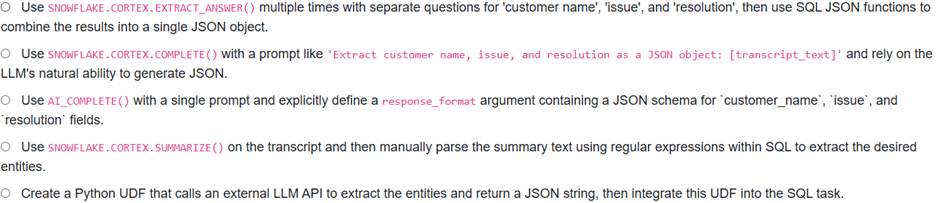

A data engineering team is setting up an automated pipeline in Snowflake to process call center transcripts. These transcripts, once loaded into a raw table, need to be enriched by extracting specific entities like the customer’s name, the primary issue reported, and the proposed resolution. The extracted data must be stored in a structured JSON format in a processed table. The pipeline leverages a SQL task that processes new records from a stream.

Which of the following SQL snippets and approaches, utilizing Snowflake Cortex LLM functions, would most effectively extract this information and guarantee a structured JSON output for each transcript?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

C

Explanation:

To guarantee a structured JSON output for entity extraction, (the updated version of ‘COMPLETE()’) with the response_format’ argument and a specified JSON schema is the most effective approach. This mechanism enforces that the LLM’s output strictly conforms to the predefined structure, including data types and required fields, significantly reducing the need for post-processing and improving data quality within the pipeline.

Option A requires multiple calls and manual JSON assembly, which is less efficient.

Option B relies on the LLM’s ‘natural ability’ to generate JSON, which might not be consistently structured without explicit ‘response_format’.

Option D uses, which is for generating summaries, not structured entity extraction.

Option E involves external LLM API calls and Python UDFs, which, while possible, is less direct than using native ‘AI_COMPLETE structured outputs within a SQL pipeline in Snowflake Cortex for this specific goal.