Practice Free GES-C01 Exam Online Questions

A Data Engineer has successfully deployed a Document AI model build named ‘expense reports’ to extract ‘total amount’ and approver signature’ from digital expense reports. They observe that sometimes the ‘approver signature’ is not present in a document, or certain table cells are intentionally left blank in other document types processed by Document AI. They also want to automate the ingestion and processing of new expense reports. Regarding the ‘!PREDICT’ method’s JSON output when ‘approver signature’ is missing or a table cell is empty, and the recommended Snowflake features for continuously processing new documents, which statements are true?

- A . If the ‘approver_signature’ is not found, the JSON output for ‘approver_signature’ will contain Tvalue": null, "score": 0.0}’.

- B . When a table cell is empty, the Document AI model will return a ‘score’ key but no ‘value’ key for that cell.

- C . To automate continuous processing, the Data Engineer should create a stream on the internal stage where documents are uploaded, and a task that calls the ‘ !PREDICT method when new data arrives.

- D . If the model does not find an answer, it returns an empty string for ‘value’ and a score indicating its confidence that no answer was found.

- E . Dynamic Tables are the primary recommended feature for continuous document processing with Document AI, replacing the need for streams and tasks.

B,C

Explanation:

Option B is correct because if a table cell is empty or if the Document AI model does not find an answer in the document, it will not return a ‘value’ key. However, it will still return the ‘score’ key, indicating its confidence that the cell is empty or that the answer is not present.

Option C is correct as creating document processing pipelines with Document AI typically involves setting up a stream on a stage to capture new documents and a task that then calls the ‘!PREDICT method to process these new documents automatically.

Option A is incorrect; the ‘value’ key is omitted, not set to ‘null’.

Option D is incorrect because the ‘value’ key is omitted entirely, not set to an empty string.

Option E is incorrect because Snowflake Cortex functions, including Document AI’s ‘!PREDICT method, do not currently support dynamic tables.

A Snowflake account administrator in an Azure East US 2 region needs to enable users to access a new, highly capable LLM, ‘claude-3-5-sonnet’, which is currently only natively available in AWS regions via Snowflake Cortex. The administrator also wants to ensure that only specific, approved LLMs can be used across the organization.

Which configuration steps are necessary for the administrator to achieve these requirements?

- A . Set the account parameter to include ‘claude-3-5-sonnet’, and then set the account parameter to ‘TRUE to allow cross-region inference for all Cortex features.

- B . Grant the ‘SNOWFLAKE.CORTEX_USER database role to the relevant user roles. Set the account parameter to ‘ANY REGION’ or a list including an AWS region where ‘claude-3-5-sonnet’ is natively available. Additionally, configure the ‘CORTEX MODELS ALLOWLIST to explicitly permit ‘claude-3-5-sonnet’ and other desired models.

- C . Since ‘claude-3-5-sonnet’ is an OpenAl model, the administrator must enable the ‘ENABLE_CORTEX ANALYST MODEL AZURE OPENAI’ account parameter, and then the model will automatically be available for cross-region inference without further action.

- D . Create a ‘COMPUTE POOL’ with a ‘GPU NV_S instance family in Azure East US 2, and then deploy a custom PyCaret model of ‘claude-3-5-sonnet’ to this pool through the Snowflake Model Registry.

- E . The ‘CORTEX ENABLED CROSS REGION’ parameter allows access to models in other regions, but access to specific LLMs is controlled solely by individual user privileges granted directly on the model objects, not by an account-level allowlist.

B

Explanation:

Option A is partially correct but incomplete. Setting to ‘TRUE or ‘ANY_REGION’ allows cross-region inference. However, ‘ TRUE’ is not a valid value for ‘CORTEX ENABLED CROSS REGION in the provided sources, it can be a list of regions or ‘ANY_REGION’. The does control which models can be used.

Option B is correct. To call Snowflake Cortex AI functions, the ‘SNOWFLAKE.CORTEX USER database role is required. To access ‘claude-3-5-sonnet’ (which is available via cross-cloud inference) from an Azure region when it’s natively available in an AWS region, the ‘CORTEX ENABLED CROSS REGION’ parameter must be configured to allow it, either by specifying ‘ANY REGION’ or listing the relevant AWS region. Additionally, the parameter is used by administrators to restrict or allow access to specific LLMs within Snowflake. ‘claude-3-5-sonnet’ is a supported model for ‘AI_COMPLETE’ and ‘COMPLETE.

Option C is incorrect. ‘claude-3-5-sonnet’ is an Anthropic model, not an OpenAl GPT model. Furthermore, is a legacy parameter for Cortex Analyst specifically for Azure OpenAl models, and its use is discouraged.

Option D is incorrect. While ‘COMPUTE POOL’ and instance families are used for Snowpark Container Services and Hugging Face models can be deployed this way, this scenario involves directly using a Snowflake-hosted Cortex LLM (‘claude-3-5-sonnet’) rather than deploying a custom external model, and ‘claude-3-5-sonnet’ is not a PyCaret model.

Option E is incorrect. While RBAC applies to model objects, the ‘CORTEX MODELS ALLOWLIST’ is an account-level parameter used by administrators to limit which LLMs can be used, overriding or complementing individual object grants.

A developer is refining a Document AI extraction process using the ‘!PREDICT’ method and is meticulously examining the JSON output for invoices, which include ‘invoice number’, ‘invoice items’, ‘tax amount’, and ‘vendor name’. They also have a detailed internal table of ‘product details’ to be extracted. To ensure optimal data quality and accurate interpretation of the extracted information, which of the following best practices or characteristics of Document AI’s output should the developer consider?

- A . The ‘ocrScore’ provided in the ‘_documentMetadata’ object for each document indicates the model’s confidence in the content of specific extracted values, rather than the overall quality of the optical character recognition process.

- B . When extracting lists of values, such as ‘invoice_items’, the Document AI model returns them as an array in the JSON output, preserving the original order of items as they appear in the document.

- C . For table extraction, such as the extracted values for each column (e.g., ‘tablel litem’, ‘tablel Igross) are ordered consistently with the rows of the original table, facilitating direct joining of columns.

- D . To maximize accuracy when defining data values, questions should be broadly generic (e.g., ‘What is the amount?) to allow the Document AI model to infer the most relevant context, especially for fields like ‘tax_amount’ where multiple numbers might be present.

- E . If the ‘vendor_name’ field cannot be confidently identified in a document, the model will include ‘"vendor_name": [ { "score": O.X, "value": "NOT FOUND" } l’ in the JSON output.

B,C

Explanation:

Option A is incorrect. The ‘ocrScore’ in the ‘_documentMetadata’ field specifies the confidence score for the optical character recognition (OCR) ‘process’ for that document, not the confidence of specific extracted values. The ‘score’ field associated with individual extracted values indicates confidence for that specific value.

Option B is correct. Document AI models can return lists, and the ‘invoice_itemS field is given as an example. The JSON format for ‘invoice_items’ shows an array of objects for multiple items. The order is inherently maintained in such list extractions.

Option C is correct. The sources explicitly state that in table extraction, the values in the JSON output are provided in the same order as the rows in the table, which allows columns to be easily joined. This ensures the structural integrity of the extracted table data.

Option D is incorrect. For question optimization, it is crucial to be specific and precise. The guidelines advise against asking generic questions like ‘What is the date?’ without including more details, especially when multiple similar values might be present, as Document AI is not expected to guess intentions or have extended domain knowledge.

Option E is incorrect. If the Document AI model does not find an answer (such as it does not return a ‘value’ key at all within that field, although it does return the ‘score’ key to indicate its confidence that the answer is not present.

An operations manager is tasked with monitoring the cost and ensuring compliance for a Cortex Analyst deployment that uses the REST API. They are particularly concerned with accurately tracking credit consumption and understanding the implications of enabling external models.

Which of the following statements correctly describe aspects of Cortex Analyst cost and governance?

- A . Credit consumption for Cortex Analyst is primarily based on the number of tokens processed by the underlying LLMs, with more complex natural language questions leading to higher token usage and costs.

- B . The view can be queried to track detailed information about cortex Analyst requests, including generated SQL and any errors.

- C . Enabling Azure OpenAI models via the ‘ENABLE_CORTEX_ANALYST_MODEL_AZURE_OPENAF parameter ensures that all customer data and prompts remain within Snowflake’s governance boundary and fully respect RBAC policies for those models.

- D . Snowflake’s view provides granular usage information for REST API requests to cortex Analyst, including tokens processed per model.

- E . Cortex Analyst credit usage is based on the number of messages processed, at a rate of 67 Credits per 1 ,000 messages, and only successful responses (HTTP 200) are counted.

E

Explanation:

A company wants to ingest and process scanned invoices and digitally-born contracts in Snowflake. They need to extract all text, preserving layout for contracts and just the text content for scanned invoices.

Which AI_PARSE_DOCUMENT modes would be most appropriate for this scenario, and what is the primary purpose of the function itself?

- A . Primary purpose is to generate new text. For contracts, use OCR mode; for invoices, use LAYOUT mode.

- B . Primary purpose is to classify text. For contracts, use LAYOUT mode; for invoices, use OCR mode.

- C . Primary purpose is to extract data and layout. For contracts, use LAYOUT mode; for invoices, use OCR mode.

- D . Primary purpose is to summarize text. For contracts, use OCR mode; for invoices, use LAYOUT mode.

- E . Primary purpose is to translate text. Both document types should use LAYOUT mode.

C

Explanation:

Option C is correct. AI_PARSE_DOCUMENT is a Cortex AI SQL function designed to extract text, data, and layout elements from documents with high fidelity, preserving structure like tables, headers, and reading order. For digitally-born contracts where layout preservation is needed, the mode is appropriate. For scanned invoices where only text content is needed without layout, the OCR mode, which extracts text LAYOUT from scanned documents and does not preserve layout, is suitable.

An organization relies on Snowflake Cortex LLM functions and has established a robust model governance policy using the ‘CORTEX MODELS_ALLOWLIST parameter. A developer is integrating ‘TRY COMPLETE into an application for processing various text inputs.

Which of the following statements are correct regarding ‘TRY COMPLETE and model access controls?

- A . if a If a model specified in ‘TRY_COMPLETE is not allowed by the and no RBAC grant exists for it, the ‘TRY_COMPLETE function will automatically return ‘NULL’ to prevent a hard error.

- B . The ‘TRY_COMPLETE function can return a ‘NULL ‘ value if the underlying LLM experiences a temporary internal processing error during response generation, allowing the query execution to continue without halting.

- C . The parameter primarily governs access for models invoked by their plain names with but ‘TRY COMPLETE can still access models if the user has been granted appropriate RBAC on a fully-qualified model object.

- D . If ‘TRY_COMPLETE fails to perform its operation and returns ‘ NULL ‘, the tokens that would have been processed for that specific call are not billed, ensuring cost efficiency for failed operations.

- E . To provide a stateful conversational experience with ‘TRY_COMPLETE, the function automatically retains context from previous calls within the same session.

B,C,D

Explanation:

Option A is incorrect. While ‘TRY_COMPLETE generally returns ‘NULL’ for operations that cannot be performed, the documentation explicitly states that if a model powering a Cortex function (including ‘TRY COMPLETE’) is not allowed by the allowlist or RBAC, an ‘error message contains information about how to modify the allowlist’. This suggests an actual error is raised rather than ‘NULL’ being returned for access control violations, unlike internal processing errors.

Option B is correct. ‘TRY COMPLETE’ is designed to return ‘NULL’ instead of raising an error when an operation cannot be performed, which includes scenarios where the LLM encounters internal errors during response generation.

Option C is correct. The ‘CORTEX MODELS ALLOWLIST controls which models can be used when referenced by their plain names (e.g., ‘mistral-large2’). However, model-level RBAC, granted through application roles on fully-qualified model objects (e.g., ‘SNOWFLAKE.MODELS."LLAMA3.1-70B"’), provides an independent access path that bypasses the plain-name allowlist check for those specific model objects.

Option D is correct. ‘TRY_COMPLETE does not incur costs for error handling; if the function returns ‘NULL’, no cost is incurred for that specific call.

Option E is incorrect. ‘TRY COMPLETES, like ‘COMPLETE’, does not retain state between calls. To provide a stateful conversational experience, all previous user prompts and model responses must be explicitly passed in the array argument.

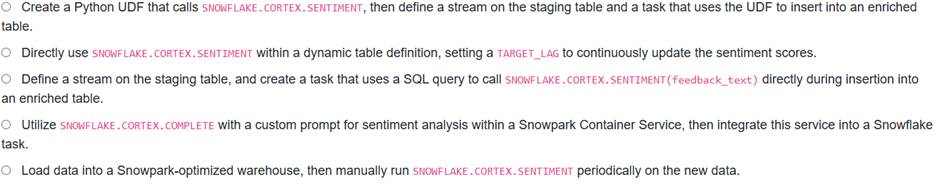

A Gen AI Specialist is tasked with implementing a data pipeline to automatically enrich new customer feedback entries with sentiment scores using Snowflake Cortex functions. The new feedback arrives in a staging table, and the enrichment process must be automated and cost-effective. Given the following pipeline components, which combination of steps is most appropriate for setting up this continuous data augmentation process?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

C

Explanation:

Option C is the most direct and efficient approach for continuously augmenting data with sentiment scores in a Snowflake pipeline. is a task-specific AI function designed for this purpose, returning an overall sentiment score for English-language text. SNOWF LAKE .CORTEX.SENTIMENT Integrating it directly into a task that monitors a stream allows for automated, incremental processing of new data as it arrives in the stage. The source explicitly mentions using Cortex functions in data pipelines via the SQL interface.

Option A is plausible, but calling SENTIMENT directly in SQL within a task (Option C) is simpler and avoids the overhead of a Python UDF if the function is directly available in SQL, which it is.

Option B, using a dynamic table, is not supported for Snowflake Cortex functions.

Option D, while powerful for custom LLMs, is an over-engineered solution and introduces more complexity (SPCS setup, custom service) than necessary for a direct sentiment function.

Option E describes a manual, non- continuous process, which contradicts the requirement for an automated pipeline.

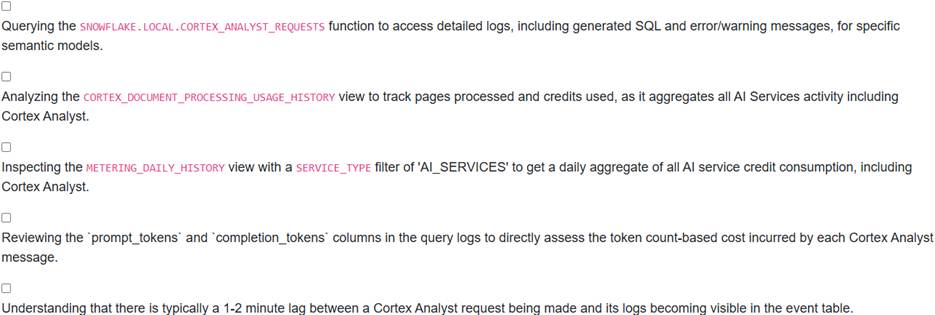

A Snowflake administrator is tasked with monitoring the efficiency and cost-effectiveness of their Cortex Analyst deployments. They need to identify if certain semantic models are generating a high volume of failed or expensive queries.

Which of the following approaches or statements are crucial for effectively monitoring and identifying issues with Cortex Analyst usage and associated costs?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,C,E

Explanation:

Option A is correct because Cortex Analyst logs requests to an event table, and the function can be used to query these logs, which include generated SQL and errors/warnings, helping identify issues.

Option C is correct as the ‘METERIN_ DAILY_HISTORY view tracks daily credit usage for services, including ‘AI_SERVICES’, which encompasses Cortex Analyst.

Option E is correct as there is a reported 1-2 minute lag for Cortex Analyst requests to become visible in the event table logs.

Option B is incorrect because ‘CORTEX_DOCUMENT_PROCESSING_USAGE_HISTORY specifically displays Document AI processing function activity, not all AI Services or Cortex Analyst.

Option D is incorrect because Cortex Analyst costs are based on messages, not tokens, so ‘prompt_tokens’ and ‘completion_tokens’ would not be relevant for direct cost assessment in this context.

An AI developer is building a Snowflake data pipeline to prepare unstructured data for a RAG application. The pipeline involves extracting text, splitting it into chunks, generating embeddings, and then indexing for Cortex Search. Considering the role of helper functions like SNOWFLAKE.CORTEX.SPLIT_TEXT_RECURSIVE_CHARACTER, which of the following statements accurately describes its typical operational placement and interaction within this Gen AI pipeline?

- A . It is typically applied after an embedding function (e.g., SNOWFLAKE.CORTEX.EMBED_TEXT_768) to break down large vector embeddings into smaller, more granular vector components for precise similarity search.

- B . Its output, consisting of smaller text chunks, serves as the direct input for text embedding functions that then convert these chunks into vector representations for semantic indexing.

- C . It replaces the need for SNOWFLAKE.CORTEX.COUNT_TOKENS by implicitly returning the token count of each chunk along with the chunked text, thereby optimizing resource usage.

- D . The function’s recursive nature enables it to automatically detect and correct factual inconsistencies or ‘hallucinations’ present in the original large text documents before they are embedded.

- E . It is a post-processing step for LLM-generated responses, used to break down long answers into digestible paragraphs for user display in chat interfaces.

B

Explanation:

Option B is correct.

![]()

is a helper function used to divide large text documents into smaller chunks. These smaller text chunks are then processed by embedding functions, such as

, to create vector embeddings that are subsequently used for indexing and semantic search in RAG applications.

Option A is incorrect because text splitting (chunking) happens *before* embedding generation, not after, as it prepares the raw text for vectorization.

Option C is incorrect; COUNT_TOKENS is a separate helper function specifically designed to return the token count of input text.

SPLIT_TEXT_RECURSIVE_CHARACTER

does not implicitly provide token counts for its output chunks.

Option D is incorrect; the function’s purpose is text splitting, not hallucination detection or correction, which pertains to LLM output quality.

Option E is incorrect; while LLM responses might be formatted, the primary role of SPLIT_TEXT_RECURSIVE_CHARACTER is in preparing input documents for RAG, not post-processing LLM outputs for display.

An enterprise is deploying a new RAG application using Snowflake Cortex Search on a large dataset of customer support tickets. The operations team is concerned about managing compute costs and ensuring efficient index refreshes for the Cortex Search Service, which needs to be updated hourly.

Which of the following considerations and configurations are relevant for optimizing cost and performance of the Cortex Search Service in this scenario?

- A . The CREATE CORTEX SEARCH SERVICE command requires specifying a dedicated virtual warehouse for materializing search results during initial creation and subsequent refreshes.

- B . For embedding text, selecting a model like

cost per million tokens, assuming English-only content is sufficient. - C . For optimal performance and cost efficiency, Snowflake recommends using a dedicated warehouse of size no larger than MEDIUM for each Cortex Search Service.

- D . CHANGE_TRACKING must be explicitly enabled on the source table for the Cortex Search Service to leverage incremental refreshes and avoid full re-indexing, thus optimizing refresh costs.

- E . The primary cost driver for Cortex Search is the number of search queries executed against the service, with the volume of indexed data (GB/month) having a minimal impact on overall billing.

A,B,C,D

Explanation:

Option A is correct because a Cortex Search Service requires a virtual warehouse to refresh the service, which runs queries against base objects when they are initialized and refreshed, incurring compute costs.

Option B is correct because the cost of embedding models varies .

For example, ‘snowflake-arctic-embed-m-v1.5 costs 0.03 credits per million tokens, while ‘voyage-multilingual-2 costs 0.07 credits per million tokens. Choosing a more cost-effective model like ‘snowflake-arctic-embed-m-v1.5’ for English-only data can reduce token costs.

Option C is correct because Snowflake recommends using a dedicated warehouse of size no larger than MEDIUM for each Cortex Search Service to achieve optimal performance.

Option D is correct because change tracking is required for the Cortex Search Service to be able to detect and process updates to the base table, enabling incremental refreshes that are more efficient than full re-indexing.

Option E is incorrect because Cortex Search Services incur costs based on virtual warehouse compute for refreshes, ‘EMBED_TEXT_TOKENS’ cost per input token, and a charge of 6.3 Credits per GB/mo of indexed data. The volume of indexed data has a significant impact, not minimal.