Practice Free GES-C01 Exam Online Questions

A business is implementing Snowflake Document AI to process a high volume of scanned legal contracts. The contracts are in PDF format, average 75 pages each, and some are up to 45 MB in size. They need to extract specific clauses, contract dates, and signatory names, including any handwritten elements. The business requires the extracted data to be available for continuous processing within an automated pipeline.

Which of the following considerations are critical for successful implementation of this Document AI solution?

- A . Document AI automatically detects page orientation and supports PDF as a file format. The maximum page limit of 125 pages per document and size limit of 50 MB are well within the specifications for these contracts.

- B . To train the Document AI model to extract handwritten text and signatures accurately, particularly if readability is low, the business should utilize the ‘ocrScore’ from the prediction results to flag documents for image quality review or enhancement.

- C . The primary method for extracting information is This method is exclusively designed for zero-shot extraction, thus fine-tuning is not an option to improve results on specific document types like these contracts.

- D . For continuous processing of new documents, the business needs to create a stream on a stage and a task to automate the execution of the extracting query. Document AI supports processing up to 500 documents in a single query.

- E . When extracting lists of values, such as multiple contract clauses, the Document AI model may initially struggle with complex extractions. It’s best practice to train the model with annotations and corrections over a representative dataset to ensure accuracy and correct ordering.

A,B,E

Explanation:

Option A is correct. Document AI supports PDF, PNG, DOCX, EML, JPEG, JPG, HTM, HTML, TEXT, TXT, TIF, TIFF formats. Documents must be no more than 125 pages long and 50 MB or less in size. The contracts, at 75 pages and 45 MB, are within these limits. DocumentAI also automatically detects page orientation.

Option B is correct. The accuracy of extracting handwritten information depends on text recognition. If readability is low, reviewing the document to improve image quality or using the OCR score (provided in the documentMetadata.ocrScore’ field of the JSON output) to flag documents for additional review is helpful.

Option C is incorrect. Document AI provides both zero-shot extraction and fine-tuning capabilities. You can fine-tune the Arctic-TILT model to improve results on documents specific to your use case. The S!PREDICT method is used for extraction after the model is ready, which can be zero-shot or fine-tuned.

Option D is incorrect. While creating a stream on a stage and a task is the correct approach for continuous processing, Document AI has a limitation for the number of documents processed in one query, supporting a maximum of 1000 documents, not 500.

Option E is correct. The Document AI model can return lists, and for complex extractions like lists of line items (or in this case, clauses), the foundational model may not immediately understand the intent. Training with annotations and corrections is crucial to achieve the desired output.

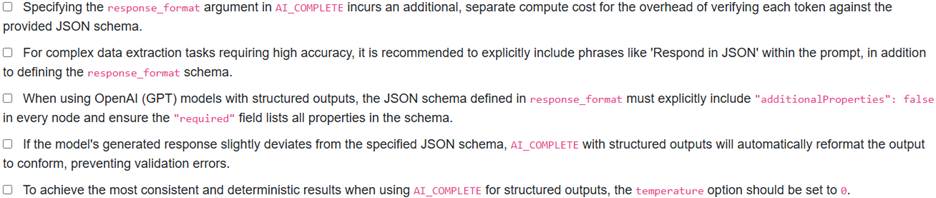

A data engineering team is building an automated pipeline in Snowflake to process customer reviews. They need to use AI_COMPLETE to extract specific details like product, sentiment, and issue type, and store them in a strictly defined JSON format for seamless downstream integration. They aim to maximize the accuracy of the structured output and manage potential model limitations.

Which statements accurately reflect the best practices and characteristics when using AI_COMPLETE with structured outputs for this scenario?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

B,C,E

Explanation:

Option A is incorrect because Structured Outputs do not incur additional compute cost for the overhead of verifying each AI_COMPLETE token against the supplied JSON schema, though the number of tokens processed (and thus billed) can increase with schema complexity.

Option B is correct because for complex reasoning tasks, it is recommended to use the most powerful models and explicitly add ‘Respond in JSON’ to the prompt to optimize accuracy.

Option C is correct as for OpenAI (GPT) models, the schema has specific requirements: response_format must be set to in every node, and the required field must include the names of every property in the schema.

Option D additional Properties false is incorrect because AI_COMPLETE verifies each generated token against the JSON schema to ensure conformity, and if the model cannot generate a response that matches the schema, it will result in a validation error.

Option E is correct as setting the option to e is recommended for temperature the most consistent results, regardless of the task or model, especially for structured outputs.

An administrator has configured the ‘CORTEX_MODELS_ALLOWLIST parameter to only permit the ‘mistral-large? model at the account level. A user with the ‘PUBLIC’ role, which has been granted ‘SNOWFLAKE.CORTEX USER and ‘SNOWFLAKE."CORTEX- MODEL-ROLE-LLAMA3.1-70B"’, attempts to execute several ‘AI COMPLETE queries.

Which of the following queries will successfully execute?

A)

![]()

B)

![]()

C)

![]()

D)

![]()

E)

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,B

Explanation:

Option A is correct. The query directly references ‘MISTRAL-LARGE2’, which is explicitly in the account-level, so it will succeed.

Option B is correct. When a model name is provided as a string argument, Cortex first treats it as an identifier for a schema-level model object. If found, RBAC is applied. The user’s role has ‘SNOWFLAKE."CORTEX-MODEL-ROLE-LLAMA3.1-70B"’ granted, which provides access to the ‘LLAMA3.1-70B’ model object in ‘SNOWFLAKE.MODELS’, regardless of the ‘CORTEX MODELS_ALLOWLIST setting for plain model names.

Option C is incorrect because ‘llama3.1-70b’ as a plain model name is not in the ‘CORTEX_MODELS_ALLOWLIST. Although the user has access to the model object, a plain string like ‘llama3.1-70b’ will be looked up in the allowlist after failing to match a model object by that plain name, and the allowlist only has ‘MISTRAL-LARGE2’.

Option D is incorrect. ‘snowflake-arctic’ is neither in the ‘CORTEX MODELS ALLOWLIST nor does the user have a specific application role granting access to a ‘snowflake-arctic’ model object.

Option E is incorrect because ‘ALTER ACCOUNT operations can only be performed by the ‘ACCOUNTADMIN’ role, not by a SPUBLIC’ user role.

An operations team is investigating an issue with a generative AI application powered by Snowflake Cortex Analyst, where users reported unexpected behavior in generated SQL. To diagnose the problem, they examine the detailed event logs captured by Snowflake AI Observability.

Which categories of information can they expect to find in these event tables to assist their investigation?

- A . The full text of the natural language questions submitted by the users.

- B . The exact SQL queries that Cortex Analyst generated in response to user questions.

- C . Any error messages or warnings that occurred during the processing of the request.

- D . The complete request and response bodies associated with the application’s execution steps.

- E . Real-time CPU and memory usage statistics for the Snowflake virtual warehouse executing the LLM inference.

A,B,C,D

Explanation:

Cortex Analyst logs requests to an event table to aid in refining semantic models or views. These logs are comprehensive and include specific details crucial for debugging and monitoring. The captured information includes ‘The user who asked the question’, ‘The question asked’, ‘Generated SQL’, ‘Errors and/or warnings’, ‘Request and response bodies’, and ‘Other metadata’. Therefore, options A, B, C, and D are all accurate descriptions of the data available in these event logs.

Option E, real-time CPU and memory usage, refers to infrastructure monitoring metrics rather than the content specifically logged within the application’s execution event table by Cortex Analyst itself.

An administrator has configured the ‘CORTEX MODELS ALLOWLIST’ parameter to only permit the ‘mistral-large? model at the account level. A user with the ‘PUBLIC’ role, which has been granted ‘SNOWFLAKE.CORTEX USER and ‘SNOWFLAKE."CORTEX- MODEL-ROLE-LLAMA3.1-70B"’, attempts to execute several ‘AI_COMPLETE queries.

Which of the following queries will successfully execute?

A)

![]()

B)

![]()

C)

![]()

D)

![]()

E)

![]()

Explanation:

Option A is correct. The query directly references ‘MISTRAL-LARGE2’, which is explicitly in the account-level ‘CORTEX MODELS_ALLOWLIST, so it will succeed.

Option B is correct. Snowflake first treats the model name as an identifier for a schema-level model object. The user’s role has ‘SNOWFLAKE."CORTEX-MODEL-ROLE-LLAMA3.1-70B"’ granted, which provides access to the ‘LLAMA3.1- 70B’ model object in ‘SNOWFLAKE.MODELS, regardless of the setting for plain model names. option C is incorrect because ‘llama3.1-70b’ as a plain model name is not in the ‘CORTEX_MODELS_ALLOWLIST. Although the user has access to the model object, a plain string like ‘llama3.1-70b’ will be looked up in the allowlist after failing to match a model object by that plain name, and the allowlist only has ‘MISTRAL-LARGE2’.

Option D is incorrect. ‘snowflake-arctic’ is neither in the ‘CORTEX MODELS ALLOWLIST nor does the user have a specific application role granting access to a ‘snowflake-arctic’ model object.

Option E is incorrect because "ALTER ACCOUNT operations can only be performed by the ‘ACCOUNTADMIN’ role, not typically by a ‘PUBLIC’ user role, regardless of other grants.

A )

![]()

B )

![]()

C )

![]()

D )

![]()

E )

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

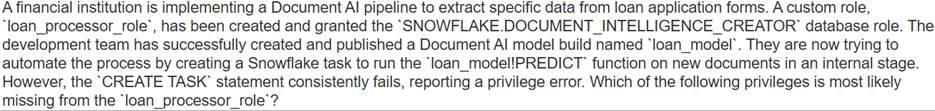

D

Explanation:

While the database role provides broad access to Document AI features and the ability to work on model builds, creating pipeline components like streams and tasks requires specific object privileges on the relevant database objects. For creating a task, the ‘CREATE TASK’ privilege on the schema where the task is being created is explicitly required.

Option A (CREATE DATABASE’) is a much higher-level privilege and not directly relevant to task creation within an existing schema.

Option B (‘USAGE’ on warehouse) is necessary for the task to using that warehouse, but not for the ‘CREATE TASK statement itself to succeed.

Option C (EXECUTE IMMEDIATE) is not a standard privilege for creating tasks.

Option E (‘ALTER STAGE’) is for modifying stage properties, not for creating tasks.

A development team is implementing a suite of generative AI applications on Snowflake, utilizing both SQL functions and the Cortex REST API. They prioritize content safety and plan to integrate Cortex Guard wherever possible. Considering the various interfaces for interacting with Snowflake Cortex LLMs, which of the following interfaces and functions support the direct use of Cortex Guard via the guardrails’ argument or equivalent configuration?

- A . The ‘SNOWFLAKE.CORTEX.CLASSIFY_TEXT SQL function for text classification tasks.

- B . The ‘SNOWFLAKE.CORTEX.COMPLETE SQL function for generative AI tasks.

- C . The Snowflake Cortex LLM REST API when invoking the ‘/api/v2/cortex/inference:complete’ endpoint.

- D . The ‘Cortex Playground’ (Public Preview) when testing prompts and model settings.

- E . The ‘SNOWFLAKE.CORTEX.TRY_COMPLETE SQL function, which is the error-tolerant version of ‘COMPLETE.

B,C,D,E

Explanation:

Cortex Guard is a feature specifically designed to filter potentially unsafe and harmful responses from a language model, and it’s an option of the ‘AI_COMPLETE (or ‘SNOWFLAKE.CORTEX.COMPLETE) function.

Option B is correct as ‘COMPLETE (SNOWFLAKE.CORTEX)’ supports the ‘guardrails’ argument.

Option C is correct as the Cortex REST API endpoint 7api/v2/cortex/inference:complete’ accepts ‘guardrails’ as an optional JSON argument.

Option D is correct as the Cortex Playground allows users to ‘Enable Cortex Guard’ to implement safeguards. option E is correct because ‘ TRY_COMPLETE (SNOWFLAKE.CORTEXY performs the same operation as ‘COMPLETE and also supports the ‘guardrails’ argument.

Option A is incorrect because ‘CLASSIFY TEXT is a task-specific function and does not have the ‘guardrails’ option; Cortex Guard is associated with generative completion functions like ‘COMPLETE.

A team of data application developers is leveraging Snowflake Copilot to streamline the creation of analytical SQL queries within their Streamlit in Snowflake application. They observe that Copilot sometimes struggles with complex joins or provides suboptimal queries when dealing with a newly integrated, deeply nested dataset.

Based on Snowflake’s best practices and known limitations, which actions or considerations would help improve Copilot’s performance in this scenario?

- A . Implement curated views with descriptive and easy-to-understand names for views and columns, appropriate data types, and pre-define common/complex joins to simplify the underlying schema for Copilot.

- B . Enable the CORTEX_MODELS_ALLOWLIST parameter to restrict Copilot to only use the largest available LLMs, thereby guaranteeing higher accuracy for complex queries.

- C . Break down complex requests into simpler, multi-turn questions, as Copilot is designed to build complex queries through conversational refinement and follow-up questions.

- D . Grant Copilot direct access to the raw data using ACCOUNTADMIN privileges, allowing it to infer schema relationships more effectively from data content.

- E . Ensure that a database and schema are explicitly selected for the current session, and that column names are meaningful, to provide Copilot with better context for query generation.

A,C,E

Explanation:

To improve Snowflake Copilot’s performance, creating curated views with descriptive names, appropriate data types, and capturing common/complex joins is a key best practice. Copilot can build complex queries through a conversation by asking follow-up questions. It also uses the names of databases, schemas, tables, and columns, and their data types to determine available data, so ensuring these are meaningful and correctly set for the session is crucial for relevant responses.

Option B is incorrect because CORTEX_MODELS_ALLOWLIST controls access to specific LLMs but doesn’t guarantee higher accuracy for Copilot’s SQL generation.

Option D is incorrect as Snowflake Copilot does not have access to the data inside tables; it operates on metadata. Granting privileges would not change this fundamental operational principle and is ACCOUNTADMIN against best practices for least privilege.

An ML engineer is planning a fine-tuning project for a llama3.1-8b model to summarize long customer support tickets. They are considering the impact of dataset size and max_epochs on cost and performance, as well as the behavior of the fine-tuned model for inference.

Which statements about cost and performance in Snowflake Cortex Fine-tuning are true? (Select all that apply)

- A . D When fine-tuning a llama3.1-8b model, the maximum input context (for the prompt ) is 20,000 tokens, and the maximum output context (for the completion) is 4,000 tokens.

- B . The compute cost for fine-tuning is primarily determined by multiplying the number of input tokens in the training data by the number of epochs trained.

- C . For optimal cost efficiency, especially with smaller datasets, the max_epochs parameter should be consistently set to its maximum allowed value of 10 to ensure the best model performance.

- D . The cost for inferencing with a fine-tuned model using the COMPLETE function is solely based on the number of output tokens generated by the model, as input token costs are absorbed during fine-tuning.

- E . For large fine-tuning jobs with substantial datasets, particularly when exceeding millions of rows, utilizing Snowpark-optimized warehouses is recommended for improved performance during the training phase.

A,B,E

Explanation:

Option A is correct. For the llama3.1-8b model, the context window specifically allotted for the prompt during fine-tuning is 20,000 tokens, and for the completion is 4,000 tokens.

Option B is correct. The compute cost incurred for Cortex Fine-tuning is based on the number of tokens used in training, which is calculated as ‘number of input tokens number of epochs trained’.

Option C is incorrect. While max_epochs can be set to a value from 1 to 10 (inclusive), the default is automatically determined by the system. Setting it to the maximum for ‘optimal cost efficiency’ is not universally recommended, as a higher number of epochs directly increases the compute cost, and the goal is often to select the smallest model that satisfies the need.

Option D is incorrect. When using the COMPLETE function for inference with a fine-tuned model, *both* input and output tokens incur compute cost.

Option E is correct. Snowpark-optimized warehouses are recommended for Snowpark workloads with large memory requirements, such as ML training use cases, particularly if the training data has more than 5 million rows. Fine-tuning is an ML training process, so this guidance applies.

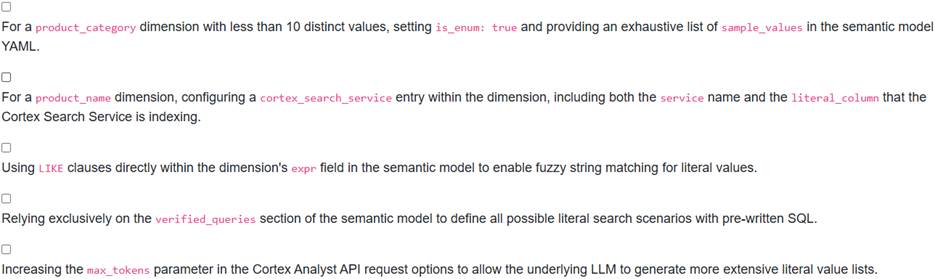

A data engineering team is tasked with improving the accuracy of a Cortex Analyst solution for a large e-commerce product catalog. Users frequently ask natural language questions involving specific product names, brands, and categories. The team observes that Cortex Analyst sometimes struggles to identify and correctly filter by these literal values in the generated SQL.

Which of the following configurations or approaches, within the semantic model, can effectively enhance Cortex Analyst’s ability to precisely identify and use literal values for filtering, based on Snowflake’s best practices?

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A,B

Explanation:

Options A and B are correct. For dimensions with low cardinality (around 1-10 distinct values), setting ‘is_enum: true’ and providing an exhaustive ‘sample_values’ list ensures Cortex Analyst chooses only from that predefined list, improving literal usage. For higher cardinality dimensions, integrating a Cortex Search Service via the entry, specifying both the ‘service’ name and the, allows semantic search over the underlying data to find appropriate literal values.

Option C is incorrect because Cortex Analyst leverages semantic similarity search or Cortex Search for literal values, not direct ‘LIKE clauses in the ‘expr’ field.

Option D is incorrect because while ‘verified_queries’ improve accuracy for specific, known questions, they are not a scalable solution for all possible literal search scenarios and are not the primary mechanism for improving general literal value identification.

Option E is incorrect because the ‘max_tokens’ parameter controls the length of the LLM’s output response, not its ability to identify or filter by literal values.