Practice Free AIF-C01 Exam Online Questions

A company is using an Amazon Bedrock base model to summarize documents for an internal use case. The company trained a custom model to improve the summarization quality.

Which action must the company take to use the custom model through Amazon Bedrock?

- A . Purchase Provisioned Throughput for the custom model.

- B . Deploy the custom model in an Amazon SageMaker endpoint for real-time inference.

- C . Register the model with the Amazon SageMaker Model Registry.

- D . Grant access to the custom model in Amazon Bedrock.

B

Explanation:

To use a custom model that has been trained to improve summarization quality, the company must deploy the model on an Amazon SageMaker endpoint. This allows the model to be used for real-time inference through Amazon Bedrock or other AWS services. By deploying the model in SageMaker, the custom model can be accessed programmatically via API calls, enabling integration with Amazon Bedrock.

Option B (Correct): "Deploy the custom model in an Amazon SageMaker endpoint for real-time inference": This is the correct answer because deploying the model on SageMaker enables it to serve real-time predictions and be integrated with Amazon Bedrock.

Option A: "Purchase Provisioned Throughput for the custom model" is incorrect because provisioned throughput is related to database or storage services, not model deployment.

Option C: "Register the model with the Amazon SageMaker Model Registry" is incorrect because while the model registry helps with model management, it does not make the model accessible for real-time inference.

Option D: "Grant access to the custom model in Amazon Bedrock" is incorrect because Bedrock does not directly manage custom model access; it relies on deployed endpoints like those in SageMaker.

AWS AI Practitioner

Reference: Amazon SageMaker Endpoints: AWS recommends deploying models to SageMaker endpoints to use them for real-time inference in various applications.

A company wants to classify images of different objects based on custom features extracted from a dataset.

Which solution will meet this requirement with the LEAST development effort?

- A . Use traditional ML algorithms with custom features extracted from the dataset.

- B . Use a pre-trained deep learning model and fine-tune the model on the dataset.

- C . Use a generative adversarial network (GAN) model to classify the images.

- D . Use a support vector machine (SVM) with manually engineered features for classification.

B

Explanation:

Comprehensive and Detailed Explanation From Exact AWS AI documents:

Fine-tuning a pre-trained deep learning model (transfer learning) provides the lowest development effort because:

The model already understands general visual features

Only minimal training is required on the custom dataset

Feature extraction is handled automatically

AWS guidance strongly recommends transfer learning for image classification tasks when development speed and efficiency are priorities.

Why the other options are incorrect:

A and D require extensive feature engineering and tuning.

C (GANs) are designed for data generation, not classification.

AWS AI document references:

Transfer Learning for Computer Vision on AWS

Image Classification Best Practices with SageMaker

Deep Learning Model Reuse and Fine-Tuning

A company wants to use Amazon Bedrock. The company needs to review which security aspects the company is responsible for when using Amazon Bedrock.

- A . Patching and updating the versions of Amazon Bedrock

- B . Protecting the infrastructure that hosts Amazon Bedrock

- C . Securing the company’s data in transit and at rest

- D . Provisioning Amazon Bedrock within the company network

C

Explanation:

With Amazon Bedrock, AWS handles infrastructure security and patching (shared responsibility model).

Customers are responsible for securing their data (encryption, IAM, policies) both in transit and at rest.

Provisioning infrastructure (D) and platform patching (A, B) are AWS responsibilities.

Reference: AWS Shared Responsibility Model

A company is using Amazon Bedrock to develop an AI assistant. The AI assistant will respond to customer questions about the company’s products. The company conducts initial tests of the AI assistant. The company finds that the AI assistant’s responses do not represent the company well and might damage customer perception.

The company needs a prompt engineering technique to improve the AI assistant’s responses so that the responses better represent the company.

Which solution will meet this requirement?

- A . Use zero-shot prompting.

- B . Use chain-of-thought (CoT) prompting.

- C . Use Retrieval Augmented Generation (RAG).

- D . Provide a persona and tone in the prompt.

D

Explanation:

AWS documentation for Amazon Bedrock explains that prompt engineering is a primary mechanism for controlling the behavior, tone, and style of foundation model outputs. Providing a persona and tone within the prompt allows organizations to align model responses with brand voice, customer expectations, and business values.

In this use case, the AI assistant’s responses risk damaging customer perception, which indicates a mismatch in tone, style, or personality, rather than a lack of knowledge. AWS explicitly states that prompts can include role definitions, communication style, formality level, and behavioral constraints to guide the model’s outputs. By defining a persona―such as “a professional, friendly company representative”―the model consistently generates responses that better represent the company.

Other options are less appropriate. Zero-shot prompting provides no additional guidance beyond the task itself and does not influence tone. Chain-of-thought prompting is designed to improve reasoning transparency, not brand alignment. Retrieval Augmented Generation (RAG) enhances factual accuracy by injecting external knowledge sources, but it does not inherently control tone or personality.

AWS highlights persona-based prompting as a best practice when building customer-facing generative AI applications, particularly chatbots and assistants. This approach improves consistency, reduces reputational risk, and ensures outputs align with organizational communication standards. Therefore, providing a persona and tone in the prompt is the most effective solution.

A company wants to improve a large language model (LLM) for content moderation within 3 months.

The company wants the model to moderate content according to the company’s values and ethics.

The LLM must also be able to handle emerging trends and new types of problematic content.

Which solution will meet these requirements?

- A . Conduct continuous pre-training on a large amount of text-based internet content.

- B . Create a high-quality dataset of historical moderation decisions.

- C . Fine-tune the LLM on a diverse set of general ethical guidelines from various sources.

- D . Conduct reinforcement learning from human feedback (RLHF) by using real-time input from skilled moderators.

D

Explanation:

Reinforcement learning from human feedback (RLHF) is the most effective approach to align large language models with company-specific values, ethics, and evolving moderation requirements. AWS documentation explains that RLHF uses direct human input to guide model behavior, enabling models to learn preferences that cannot be fully captured through static datasets or generic fine-tuning.

In this scenario, the company requires improvement within a short time frame of three months, alignment with organizational ethics, and adaptability to emerging trends and new forms of harmful content. RLHF meets these needs by incorporating real-time feedback from skilled human moderators, allowing the model to rapidly adjust its responses based on expert judgment.

AWS highlights that RLHF is particularly valuable for content moderation, safety alignment, and policy enforcement, where nuanced decisions and evolving standards are common. By rewarding desirable behaviors and penalizing undesirable outputs, the model continuously improves in a controlled and targeted manner.

The other options are less suitable. Continuous pre-training on large internet datasets is time-consuming, resource-intensive, and may introduce content misaligned with company values. Historical moderation datasets may not reflect new or emerging content patterns. Fine-tuning on general ethical guidelines lacks the specificity required for company-defined moderation policies and does not adapt quickly to new risks.

AWS positions RLHF as a key technique in responsible generative AI development, enabling organizations to maintain human oversight while improving model safety and alignment. Therefore, using RLHF with real-time input from skilled moderators is the most effective and compliant solution for this use case.

Which term is an example of output vulnerability?

- A . Model misuse

- B . Data poisoning

- C . Data leakage

- D . Parameter stealing

A

Explanation:

Model misuse is a key example of output vulnerability, where the output of a model can be intentionally or unintentionally used in ways that create harm or deviate from the model’s intended purpose. According to AWS Responsible AI guidance, output vulnerabilities refer to flaws or weaknesses in how a model’s predictions or generations are interpreted or used by external systems or users. This could involve using a generative model to produce harmful content, manipulate outputs to spread misinformation, or expose private information. AWS recommends that safeguards such as Guardrails, Human-in-the-Loop (HITL) validation, and ethical guidelines be enforced to mitigate these output risks. In contrast, data poisoning and data leakage are input-level vulnerabilities that corrupt model training, and parameter stealing is a model-level attack where internal configurations are extracted. Model misuse specifically reflects how outputs can be abused, making it a textbook example of output vulnerability.

Referenced AWS AI/ML Documents and Study Guides:

AWS Responsible AI Whitepaper C Output Vulnerabilities

Amazon Bedrock Documentation C Guardrails for Responsible Generation

A company is building a customer service chatbot. The company wants the chatbot to improve its responses by learning from past interactions and online resources.

Which AI learning strategy provides this self-improvement capability?

- A . Supervised learning with a manually curated dataset of good responses and bad responses

- B . Reinforcement learning with rewards for positive customer feedback

- C . Unsupervised learning to find clusters of similar customer inquiries

- D . Supervised learning with a continuously updated FAQ database

B

Explanation:

Reinforcement learning allows a model to learn and improve over time based on feedback from its environment. In this case, the chatbot can improve its responses by being rewarded for positive customer feedback, which aligns well with the goal of self-improvement based on past interactions and new information.

Option B (Correct): "Reinforcement learning with rewards for positive customer feedback": This is the correct answer as reinforcement learning enables the chatbot to learn from feedback and adapt its behavior accordingly, providing self-improvement capabilities.

Option A: "Supervised learning with a manually curated dataset" is incorrect because it does not support continuous learning from new interactions.

Option C: "Unsupervised learning to find clusters of similar customer inquiries" is incorrect because unsupervised learning does not provide a mechanism for improving responses based on feedback.

Option D: "Supervised learning with a continuously updated FAQ database" is incorrect because it still relies on manually curated data rather than self-improvement from feedback.

AWS AI Practitioner

Reference: Reinforcement Learning on AWS: AWS provides reinforcement learning frameworks that can be used to train models to improve their performance based on feedback.

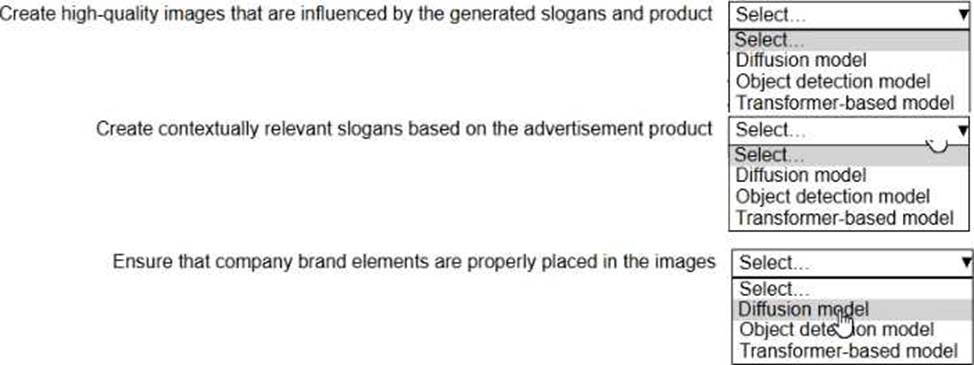

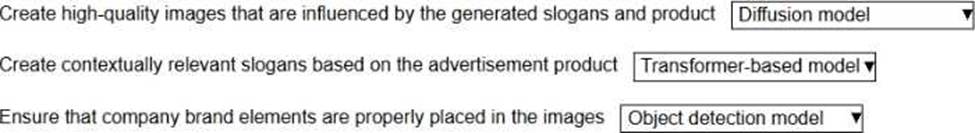

HOTSPOT

A company wants to develop a solution that uses generative AI to create content for product advertisements, Including sample images and slogans.

Select the correct model type from the following list for each action. Each model type should be selected one time. (Select THREE.)

• Diffusion model

• Object detection model

• Transformer-based model

Explanation:

Diffusion models are state-of-the-art generative models for creating high-quality, realistic images from textual prompts or other forms of conditioning. These are the foundational technology behind tools like Amazon Bedrock Titan Image Generator and other generative image models.

Reference: AWS Generative AI Overview, Diffusion Models Explained C AWS Blog

Transformer-based models (such as GPT or Amazon Titan Text) are designed for generating and understanding natural language. These models can generate coherent, contextually relevant slogans based on product information.

Reference: AWS Generative AI on Bedrock, Transformers Explained C AWS

Object detection models are designed to identify and locate objects within images, which makes them suitable for verifying that specific brand elements (like logos or products) are correctly positioned in the generated content.

Reference: AWS Rekognition Object Detection, Object Detection Overview C AWS

A hospital developed an AI system to provide personalized treatment recommendations for patients. The AI system must provide the rationale behind the recommendations and make the insights accessible to doctors and patients.

- A . Explainability

- B . Privacy and security

- C . Fairness

- D . Data governance

A

Explanation:

The correct answer is A C Explainability. According to AWS Responsible AI documentation, explainability refers to an AI system’s ability to clearly communicate why it produced a given result. In healthcare, clinical decision support systems must provide traceable, understandable reasoning, especially when generating treatment recommendations. AWS highlights explainability as critical for high-impact domains such as medicine because doctors and patients must trust and understand the basis for AI-driven decisions. Tools like Amazon SageMaker Clarify support feature attribution, helping clinicians understand which patient factors (e.g., age, symptoms, lab values) influenced a recommendation. Privacy and security (B) protect data but do not provide rationale. Fairness (C) ensures equitable treatment across demographics but does not explain decisions. Data governance (D) focuses on handling and controlling data, not model decision transparency. Explainability is the AWS principle that ensures clinical users can interpret, validate, and rely on AI-generated recommendations for patient care.

Referenced AWS Documentation:

AWS Responsible AI Whitepaper C Explainability

Amazon SageMaker Clarify C Feature Attribution and Model Insights

A company wants to use AI for budgeting. The company made one budget manually and one budget by using an AI model. The company compared the budgets to evaluate the performance of the AI model. The AI model budget produced incorrect numbers.

Which option represents the AI model’s problem?

- A . Hallucinations

- B . Safety

- C . Interpretability

- D . Cost

A

Explanation:

Comprehensive and Detailed Explanation From Exact AWS AI documents:

Hallucinations occur when an AI model generates incorrect, fabricated, or misleading outputs that appear plausible but are factually wrong.

AWS generative AI guidance identifies hallucinations as:

A common limitation of generative models

A risk when models generate numerical or factual data

A key reason for validation and human review in critical use cases

Why the other options are incorrect:

Safety (B) relates to harmful or restricted content.

Interpretability (C) refers to understanding how a model makes decisions.

Cost (D) concerns operational expenses.

AWS AI document references:

Generative AI Risks and Limitations

Responsible Use of Foundation Models

Model Validation Best Practices