Practice Free AIF-C01 Exam Online Questions

A company is building an application that needs to generate synthetic data that is based on existing data.

Which type of model can the company use to meet this requirement?

- A . Generative adversarial network (GAN)

- B . XGBoost

- C . Residual neural network

- D . WaveNet

A

Explanation:

Generative adversarial networks (GANs) are a type of deep learning model used for generating synthetic data based on existing datasets. GANs consist of two neural networks (a generator and a discriminator) that work together to create realistic data.

Option A (Correct): "Generative adversarial network (GAN)": This is the correct answer because GANs are specifically designed for generating synthetic data that closely resembles the real data they are trained on.

Option B: "XGBoost" is a gradient boosting algorithm for classification and regression tasks, not for generating synthetic data.

Option C: "Residual neural network" is primarily used for improving the performance of deep networks, not for generating synthetic data.

Option D: "WaveNet" is a model architecture designed for generating raw audio waveforms, not synthetic data in general.

AWS AI Practitioner

Reference: GANs on AWS for Synthetic Data Generation: AWS supports the use of GANs for creating synthetic datasets, which can be crucial for applications like training machine learning models in environments where real data is scarce or sensitive.

A company creates video content. The company wants to use generative AI to generate new creative content and to reduce video creation time.

Which solution will meet these requirements in the MOST operationally efficient way?

- A . Use the Amazon Titan Image Generator model on Amazon Bedrock to generate intermediate images. Use video editing software to create videos.

- B . Use the Amazon Nova Canvas model on Amazon Bedrock to generate intermediate images. Use video editing software to create videos.

- C . Use the Amazon Nova Reel model on Amazon Bedrock to generate videos.

- D . Use the Amazon Nova Pro model on Amazon Bedrock to generate videos.

C

Explanation:

The correct answer is C because Amazon Nova Reel is the AWS foundation model designed for generative video use cases, providing end-to-end video generation using generative AI, which significantly reduces video creation time and eliminates the need for manual assembly.

According to AWS Bedrock documentation:

"Amazon Nova Reel enables users to generate short-form video content directly from prompts, including the ability to define style, motion, scenes, and transitions ― streamlining the generative content creation process."

This is the most operationally efficient choice as it does not require stitching together images or using external editing tools.

Explanation of other options:

A and B involve generating intermediate images and then manually creating videos using video editing tools ― not operationally efficient.

D. Amazon Nova Pro is intended for high-end professional-grade image or 3D content generation, but not specifically optimized for video generation like Nova Reel.

Referenced AWS AI/ML Documents and Study Guides: Amazon Bedrock Model Directory C Nova Models Overview AWS GenAI Foundation Model Comparison Guide AWS Generative AI for Creators Whitepaper (2024)

A company uses Amazon Comprehend to analyze customer feedback. A customer has several unique trained models. The company uses Comprehend to assign each model an endpoint. The company wants to automate a report on each endpoint that is not used for more than 15 days.

- A . AWS Trusted Advisor

- B . Amazon CloudWatch

- C . AWS CloudTrail

- D . AWS Config

B

Explanation:

The correct answer is B because Amazon CloudWatch provides monitoring capabilities that include tracking usage metrics for Amazon Comprehend endpoints, such as invocation counts. You can configure CloudWatch to collect these metrics and create custom dashboards or alarms to report when an endpoint has zero usage over a period (e.g., 15 days).

From AWS documentation:

"Amazon CloudWatch enables you to collect and track metrics for Comprehend endpoints, create alarms, and automatically react to changes in your AWS resources."

This allows automated reporting and alerting for underused or idle endpoints.

Explanation of other options:

HOTSPOT

Sated and order the steps from the following bat to correctly describe the ML Lifecycle for a new custom modal Select each step one time. (Select and order FOUR.)

• Define the business objective.

• Deploy the modal.

• Develop and tram the model.

• Process the data.

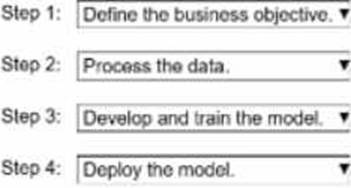

Step 1: Define the business objective.

Step 2: Process the data.

Step 3: Develop and train the model.

Step 4: Deploy the model.

The correct order represents the machine learning lifecycle as defined by AWS in the Amazon SageMaker documentation and AWS Certified Machine Learning Specialty Study Guide. The lifecycle describes the sequence of tasks required to build, train, and deploy a custom ML model effectively.

From AWS documentation:

"The machine learning process begins with defining the business problem, followed by collecting and processing data, developing and training models, and finally deploying them into production for inference."

Step 1 C Define the business objective:

This step involves clearly identifying the business problem to be solved and determining the measurable outcomes expected from the ML model. This ensures alignment between business goals and ML outputs.

Step 2 C Process the data:

Data is collected, cleaned, transformed, and prepared for training. This includes handling missing values, normalizing data, and performing feature engineering ― a crucial phase that influences model performance.

Step 3 C Develop and train the model:

The model is built and trained on the processed data using algorithms appropriate to the problem (e.g., regression, classification, clustering). Hyperparameters are tuned to optimize model accuracy.

Step 4 C Deploy the model:

Once validated, the model is deployed to a production environment (e.g., Amazon SageMaker endpoint) to make predictions on new data. Continuous monitoring and retraining ensure the model remains effective.

Referenced AWS AI/ML Documents and Study Guides:

Amazon SageMaker Developer Guide C Machine Learning Lifecycle

AWS Certified Machine Learning Specialty Study Guide C Model Development Lifecycle

AWS ML Best Practices Whitepaper C End-to-End ML Workflow

A company wants to implement a generative AI assistant to provide consistent responses to various

phrasings of user questions.

Which advantages can generative AI provide in this use case?

- A . Low latency and high throughput

- B . Adaptability and responsiveness

- C . Deterministic outputs and fixed responses

- D . Hardware acceleration and GPU optimization

B

Explanation:

The correct answer is B C Adaptability and responsiveness, which are core strengths of generative AI models such as the foundation models available in Amazon Bedrock. According to AWS documentation, generative AI systems excel at understanding natural language variations, meaning they can interpret different phrasings, synonyms, sentence structures, and conversational styles while still generating contextually consistent answers. This capability comes from pretraining on diverse natural language corpora, allowing models to generalize across multiple linguistic patterns. AWS highlights that generative AI models are designed to handle “flexible, dynamic, and conversational inputs” and provide responses grounded in understanding user intent rather than matching exact keywords.

Options A and D describe infrastructure performance characteristics, not the reasoning ability required for this use case.

Option C (deterministic outputs) is incorrect because LLMs are inherently probabilistic and not fixed unless using advanced constraints. Therefore, generative AI’s adaptability to varied user phrasing makes it ideal for assistants requiring consistent, intent-based responses.

Referenced AWS Documentation:

Amazon Bedrock Developer Guide C Foundation Model Capabilities AWS Generative AI Best Practices C Natural Language Understanding

A company is using AI to build a toy recommendation website that suggests toys based on a customer’s interests and age. The company notices that the AI tends to suggest stereotypically gendered toys.

Which AWS service or feature should the company use to investigate the bias?

- A . Amazon Rekognition

- B . Amazon Q Developer

- C . Amazon Comprehend

- D . Amazon SageMaker Clarify

D

Explanation:

Comprehensive and Detailed Explanation From Exact AWS AI documents:

Amazon SageMaker Clarify is designed to detect and explain bias in ML models and datasets.

AWS Responsible AI guidance recommends Clarify to:

Identify bias in predictions

Analyze feature attribution

Support fairness and ethical AI practices

Why the other options are incorrect:

Rekognition (A) analyzes images, not recommendation bias.

Amazon Q Developer (B) assists developers with code.

Comprehend (C) performs NLP tasks, not bias analysis.

AWS AI document references:

Amazon SageMaker Clarify Documentation

Detecting Bias in AI Systems

Responsible AI on AWS

An ecommerce company is deploying a chatbot. The chatbot will give users the ability to ask questions about the company’s products and receive details on users’ orders. The company must implement safeguards for the chatbot to filter harmful content from the input prompts and chatbot responses.

Which AWS feature or resource meets these requirements?

- A . Amazon Bedrock Guardrails

- B . Amazon Bedrock Agents

- C . Amazon Bedrock inference APIs

- D . Amazon Bedrock custom models

A

Explanation:

The ecommerce company is deploying a chatbot that needs safeguards to filter harmful content from input prompts and responses. Amazon Bedrock Guardrails provide mechanisms to ensure responsible AI usage by filtering harmful content, such as hate speech, violence, or misinformation, making it the appropriate feature for this requirement.

Exact Extract from AWS AI Documents:

From the AWS Bedrock User Guide:

"Amazon Bedrock Guardrails enable developers to implement safeguards for generative AI applications, such as chatbots, by filtering harmful content in input prompts and model responses. Guardrails include content filters, word filters, and denied topics to ensure safe and responsible outputs."

(Source: AWS Bedrock User Guide, Guardrails for Responsible AI)

Detailed

Option A: Amazon Bedrock Guardrails This is the correct answer. Amazon Bedrock Guardrails are specifically designed to filter harmful content from chatbot inputs and responses, ensuring safe interactions for users.

Option B: Amazon Bedrock Agents Amazon Bedrock Agents are used to automate tasks and integrate with external tools, not to filter harmful content. This option does not meet the requirement.

Option C: Amazon Bedrock inference APIs Amazon Bedrock inference APIs allow users to invoke foundation models for generating responses, but they do not provide built-in content filtering mechanisms.

Option D: Amazon Bedrock custom models Custom models on Amazon Bedrock allow users to fine-tune models, but they do not inherently include safeguards for filtering harmful content unless explicitly implemented.

Reference: AWS Bedrock User Guide: Guardrails for Responsible AI (https://docs.aws.amazon.com/bedrock/latest/userguide/guardrails.html)

AWS AI Practitioner Learning Path: Module on Responsible AI and Model Safety

Amazon Bedrock Developer Guide: Building Safe AI Applications (https://aws.amazon.com/bedrock/)

A company is training a foundation model (FM). The company wants to increase the accuracy of the model up to a specific acceptance level.

Which solution will meet these requirements?

- A . Decrease the batch size.

- B . Increase the epochs.

- C . Decrease the epochs.

- D . Increase the temperature parameter.

B

Explanation:

Increasing the number of epochs during model training allows the model to learn from the data over more iterations, potentially improving its accuracy up to a certain point. This is a common practice when attempting to reach a specific level of accuracy.

Option B (Correct): "Increase the epochs": This is the correct answer because increasing epochs allows the model to learn more from the data, which can lead to higher accuracy.

Option A: "Decrease the batch size" is incorrect as it mainly affects training speed and may lead to overfitting but does not directly relate to achieving a specific accuracy level.

Option C: "Decrease the epochs" is incorrect as it would reduce the training time, possibly preventing the model from reaching the desired accuracy.

Option D: "Increase the temperature parameter" is incorrect because temperature affects the randomness of predictions, not model accuracy.

AWS AI Practitioner

Reference: Model Training Best Practices on AWS: AWS suggests adjusting training parameters, like the number of epochs, to improve model performance.

A company wants to use AI to protect its application from threats. The AI solution needs to check if an IP address is from a suspicious source.

- A . Build a speech recognition system

- B . Create a natural language processing (NLP) named entity recognition system

- C . Develop an anomaly detection system

- D . Create a fraud forecasting system

C

Explanation:

Anomaly detection identifies unusual behavior (such as suspicious IP traffic) compared to normal baselines.

Speech recognition (A) is irrelevant.

NER in NLP (B) extracts entities from text, not detect malicious IPs.

Fraud forecasting (D) predicts fraudulent transactions but not directly suspicious IP activity.

Reference: AWS Documentation C Anomaly Detection

A company uses an open-source pre-trained model to analyze user sentiment for a newly released product.

Which action must the company perform, according to MLOps best practices?

- A . Use deep learning to perform hyperparameter tuning.

- B . Collect user reviews and label each review as positive or negative.

- C . Continuously monitor outputs in production.

- D . Perform feature engineering on the input dataset.

C

Explanation:

Comprehensive and Detailed Explanation From Exact AWS AI documents:

According to MLOps best practices, once an ML model is deployed to production―especially an open-source pre-trained model―the organization must continuously monitor model outputs to ensure:

Model predictions remain accurate over time

No performance degradation due to data drift or concept drift Outputs remain aligned with business and ethical expectations

AWS MLOps guidance emphasizes monitoring in production as a mandatory step for maintaining model reliability and governance.

Why the other options are incorrect:

A (Hyperparameter tuning) is optional and model-dependent.

B (Labeling data) is required only when training or fine-tuning, not when using a pre-trained model directly.

D (Feature engineering) is less relevant for modern pre-trained NLP models.

AWS AI document references:

MLOps Best Practices on AWS

Amazon SageMaker Model Monitoring

Operationalizing Machine Learning on AWS