Practice Free Databricks Certified Data Analyst Associate Exam Online Questions

What is a benefit of using Databricks SQL for business intelligence (Bl) analytics projects instead of using third-party Bl tools?

- A . Computations, data, and analytical tools on the same platform

- B . Advanced dashboarding capabilities

- C . Simultaneous multi-user support

- D . Automated alerting systems

A

Explanation:

Databricks SQL offers a unified platform where computations, data storage, and analytical tools coexist seamlessly. This integration allows business intelligence (BI) analytics projects to be executed more efficiently, as users can perform data processing and analysis without the need to transfer data between disparate systems. By consolidating these components, Databricks SQL streamlines workflows, reduces latency, and enhances data governance. While third-party BI tools may offer advanced dashboarding capabilities, simultaneous multi-user support, and automated alerting systems, they often require integration with separate data processing platforms, which can introduce complexity and potential inefficiencies.

Reference: Databricks AI & BI: Transform Data into Actionable Insights

Data professionals with varying responsibilities use the Databricks Lakehouse Platform.

Which role in the Databricks Lakehouse Platform use Databricks SQL as their primary service?

- A . Data scientist

- B . Data engineer

- C . Platform architect

- D . Business analyst

D

In the Databricks Lakehouse Platform, business analysts primarily utilize Databricks SQL as their main service. Databricks SQL provides an environment tailored for executing SQL queries, creating visualizations, and developing dashboards, which aligns with the typical responsibilities of business analysts who focus on interpreting data to inform business decisions. While data scientists and data engineers also interact with the Databricks platform, their primary tools and services differ; data scientists often engage with machine learning frameworks and notebooks, whereas data engineers focus on data pipelines and ETL processes. Platform architects are involved in designing and overseeing the infrastructure and architecture of the platform. Therefore, among the roles listed, business analysts are the primary users of Databricks SQL.

Reference: The scope of the lakehouse platform

A data engineering team has created a Structured Streaming pipeline that processes data in micro-batches and populates gold-level tables. The microbatches are triggered every minute. A data analyst has created a dashboard based on this gold-level data. The project stakeholders want to see the results in the dashboard updated within one minute or less of new data becoming available within the gold-level tables.

Which of the following cautions should the data analyst share prior to setting up the dashboard to complete this task?

- A . The required compute resources could be costly

- B . The gold-level tables are not appropriately clean for business reporting

- C . The streaming data is not an appropriate data source for a dashboard

- D . The streaming cluster is not fault tolerant

- E . The dashboard cannot be refreshed that quickly

A

Explanation:

A Structured Streaming pipeline that processes data in micro-batches and populates gold-level tables every minute requires a high level of compute resources to handle the frequent data ingestion, processing, and writing. This could result in a significant cost for the organization, especially if the data volume and velocity are large. Therefore, the data analyst should share this caution with the project stakeholders before setting up the dashboard and evaluate the trade-offs between the desired refresh rate and the available budget.

The other options are not valid cautions because:

B. The gold-level tables are assumed to be appropriately clean for business reporting, as they are the final output of the data engineering pipeline. If the data quality is not satisfactory, the issue should be addressed at the source or silver level, not at the gold level.

C. The streaming data is an appropriate data source for a dashboard, as it can provide near real-time insights and analytics for the business users. Structured Streaming supports various sources and sinks for streaming data, including Delta Lake, which can enable both batch and streaming queries on the same data.

D. The streaming cluster is fault tolerant, as Structured Streaming provides end-to-end exactly-once fault-tolerance guarantees through checkpointing and write-ahead logs. If a query fails, it can be restarted from the last checkpoint and resume processing.

E. The dashboard can be refreshed within one minute or less of new data becoming available in the gold-level tables, as Structured Streaming can trigger micro-batches as fast as possible (every few seconds) and update the results incrementally. However, this may not be necessary or optimal for the business use case, as it could cause frequent changes in the dashboard and consume more resources.

Reference: Streaming on Databricks, Monitoring Structured Streaming queries on Databricks, A look at the new Structured Streaming UI in Apache Spark 3.0, Run your first Structured Streaming workload

Which of the following benefits of using Databricks SQL is provided by Data Explorer?

- A . It can be used to run UPDATE queries to update any tables in a database.

- B . It can be used to view metadata and data, as well as view/change permissions.

- C . It can be used to produce dashboards that allow data exploration.

- D . It can be used to make visualizations that can be shared with stakeholders.

- E . It can be used to connect to third party Bl cools.

B

Explanation:

Data Explorer is a user interface that allows you to discover and manage data, schemas, tables, models, and permissions in Databricks SQL. You can use Data Explorer to view schema details, preview sample data, and see table and model details and properties. Administrators can view and change owners, and admins and data object owners can grant and revoke

permissions1.

Reference: Discover and manage data using Data Explorer

A data analyst creates a Databricks SQL Query where the result set has the following schema:

region STRING

number_of_customer INT

When the analyst clicks on the "Add visualization" button on the SQL Editor page, which of the following types of visualizations will be selected by default?

- A . Violin Chart

- B . Line Chart

- C . IBar Chart

- D . Histogram

- E . There is no default. The user must choose a visualization type.

C

Explanation:

According to the Databricks SQL documentation, when a data analyst clicks on the “Add visualization” button on the SQL Editor page, the default visualization type is Bar Chart. This is because the result set has two columns: one of type STRING and one of type INT. The Bar Chart visualization automatically assigns the STRING column to the X-axis and the INT column to the Y-axis. The Bar Chart visualization is suitable for showing the distribution of a numeric variable across different categories.

Reference: Visualization in Databricks SQL, Visualization types

Which of the following is a benefit of Databricks SQL using ANSI SQL as its standard SQL dialect?

- A . It has increased customization capabilities

- B . It is easy to migrate existing SQL queries to Databricks SQL

- C . It allows for the use of Photon’s computation optimizations

- D . It is more performant than other SQL dialects

- E . It is more compatible with Spark’s interpreters

B

Databricks SQL uses ANSI SQL as its standard SQL dialect, which means it follows the SQL specifications defined by the American National Standards Institute (ANSI). This makes it easier to migrate existing SQL queries from other data warehouses or platforms that also use ANSI SQL or a similar dialect, such as PostgreSQL, Oracle, or Teradata. By using ANSI SQL, Databricks SQL avoids surprises in behavior or unfamiliar syntax that may arise from using a non-standard SQL dialect, such as Spark SQL or Hive SQL12. Moreover, Databricks SQL also adds compatibility features to support common SQL constructs that are widely used in other data warehouses, such as QUALIFY, FILTER, and user-defined functions2.

Reference: ANSI compliance in Databricks Runtime, Evolution of the SQL language at Databricks: ANSI standard by default and easier migrations from data warehouses

In which of the following situations should a data analyst use higher-order functions?

- A . When custom logic needs to be applied to simple, unnested data

- B . When custom logic needs to be converted to Python-native code

- C . When custom logic needs to be applied at scale to array data objects

- D . When built-in functions are taking too long to perform tasks

- E . When built-in functions need to run through the Catalyst Optimizer

C

Explanation:

Higher-order functions are a simple extension to SQL to manipulate nested data such as arrays. A higher-order function takes an array, implements how the array is processed, and what the result of the computation will be. It delegates to a lambda function how to process each item in the array. This allows you to define functions that manipulate arrays in SQL, without having to unpack and repack them, use UDFs, or rely on limited built-in functions. Higher-order functions provide a performance benefit over user defined functions.

Reference: Higher-order functions | Databricks on AWS, Working with Nested Data Using Higher Order Functions in SQL on Databricks | Databricks Blog, Higher-order functions – Azure Databricks | Microsoft Learn, Optimization recommendations on Databricks | Databricks on AWS

A data organization has a team of engineers developing data pipelines following the medallion architecture using Delta Live Tables. While the data analysis team working on a project is using gold-layer tables from these pipelines, they need to perform some additional processing of these tables prior to performing their analysis.

Which of the following terms is used to describe this type of work?

- A . Data blending

- B . Last-mile

- C . Data testing

- D . Last-mile ETL

- E . Data enhancement

D

Explanation:

Last-mile ETL is the term used to describe the additional processing of data that is done by data analysts or data scientists after the data has been ingested, transformed, and stored in the lakehouse by data engineers. Last-mile ETL typically involves tasks such as data cleansing, data enrichment, data aggregation, data filtering, or data sampling that are specific to the analysis or machine learning use case. Last-mile ETL can be done using Databricks SQL, Databricks notebooks, or Databricks Machine Learning.

Reference: Databricks – Last-mile ETL, Databricks – Data Analysis with Databricks SQL

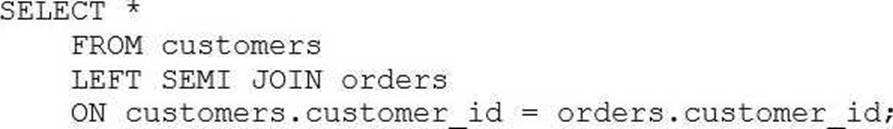

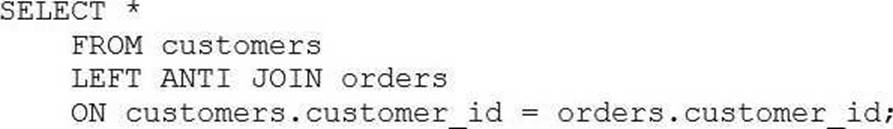

Consider the following two statements:

Statement 1:

Statement 2:

Which of the following describes how the result sets will differ for each statement when they are run in Databricks SQL?

- A . The first statement will return all data from the customers table and matching data from the orders table. The second statement will return all data from the orders table and matching data from the customers table. Any missing data will be filled in with NULL.

- B . When the first statement is run, only rows from the customers table that have at least one match with the orders table on customer_id will be returned. When the second statement is run, only those rows in the customers table that do not have at least one match with the orders table on customer_id will be returned.

- C . There is no difference between the result sets for both statements.

- D . Both statements will fail because Databricks SQL does not support those join types.

- E . When the first statement is run, all rows from the customers table will be returned and only the customer_id from the orders table will be returned. When the second statement is run, only those rows in the customers table that do not have at least one match with the orders table on customer_id will be returned.

B

Explanation:

Based on the images you sent, the two statements are SQL queries for different types of joins between the customers and orders tables. A join is a way of combining the rows from two table references based on some criteria. The join type determines how the rows are matched and what kind of result set is returned. The first statement is a query for a LEFT SEMI JOIN, which returns only the rows from the left table reference (customers) that have a match with the right table reference (orders) on the join condition (customer_id). The second statement is a query for a LEFT ANTI JOIN, which returns only the rows from the left table reference (customers) that have no match with the right table reference (orders) on the join condition (customer_id). Therefore, the result sets for the two statements will differ in the following way:

The first statement will return a subset of the customers table that contains only the customers who

have placed at least one order. The number of rows returned will be less than or equal to the number of rows in the customers table, depending on how many customers have orders. The number of columns returned will be the same as the number of columns in the customers table, as the LEFT SEMI JOIN does not include any columns from the orders table.

The second statement will return a subset of the customers table that contains only the customers who have not placed any order. The number of rows returned will be less than or equal to the number of rows in the customers table, depending on how many customers have no orders. The number of columns returned will be the same as the number of columns in the customers table, as the LEFT ANTI JOIN does not include any columns from the orders table.

The other options are not correct because:

Which statement describes descriptive statistics?

- A . A branch of statistics that uses a variety of data analysis techniques to infer properties of an underlying distribution of probability.

- B . A branch of statistics that uses summary statistics to categorically describe and summarize data.

- C . A branch of statistics that uses summary statistics to quantitatively describe and summarize data.

- D . A branch of statistics that uses quantitative variables that must take on a finite or countably infinite set of values.

C

Explanation:

Descriptive statistics refer to statistical methods used to describe and summarize the basic features of data in a study. They provide simple summaries about the sample and the measures, often including metrics such as mean, median, mode, range, and standard deviation. Databricks learning materials highlight that descriptive statistics use summary statistics to quantitatively describe and summarize data, providing insight into data distributions without making inferences or predictions.